Review of Multimodal Technologies: ViT Series (ViT, Pix2Struct, FlexiViT, NaViT)

Last Updated on September 17, 2025 by Editorial Team

Author(s): tangbasky

Originally published on Towards AI.

In the computer vision domain, CNNs have long been the dominant framework for understanding visual features. In contrast, the transformer framework has achieved great success in the NLP domain, which has encouraged some researchers to explore its potential in computer vision tasks. The emergence of ViT marks a remarkable leap for the application of transformer in the CV domain. Currently, the mainstream framework for multimodal model adpots a Large Language Model as its backbone, and non-text data is converted into tokens, and into LLMs alongside text tokens. Given that ViT is naturally capable of processing visual data, it has widespread application in multimodal domains.

ViT, which adopts the transformer framework, primarily functions as a visual feature encoder — analogous to the tokenizer in the NLP domain — and it converts images into token sequences.

ViT (Visual Transformer)

Simply put, ViT divides an image into multiple patches, and each patch is then converted into a token through a series of linear projections. Next, all these tokens are concatenated into a sequence, and thus the image is transformed from the (H,W,C) format into the (S, H) sequence format — where S denotes the number of tokens (sequence length) and D denotes the dimension of each token.

For an image, it will go through 4 stages to be converted into a ViT feature representation:

(1). The image is resized to a specified resolution.

(2). The image is split into multiple patches as the pre-defined patch size.

(3). These patch blocks are mapped to token embeddings through a linear transformation layer.

(4). Token embeddings are concatenated with positional encodings and fed into the Transformer framework to generate the image feature representation sequence.

Note that: The dynamic flow diagram is posted on: https://github.com/lucidrains/vit-pytorch/blob/main/images/vit.gif

The code implementation is detailed below:

# Source code address:

# https://github.com/lucidrains/vit-pytorch/blob/main/vit_pytorch/vit.py

# Core code block

class ViT(nn.Module):

def __init__(self, *, image_size, patch_size, num_classes, dim, depth, heads, mlp_dim, pool = 'cls', channels = 3, dim_head = 64, dropout = 0., emb_dropout = 0.):

super().__init__()

image_height, image_width = pair(image_size)

patch_height, patch_width = pair(patch_size)

num_patches = (image_height // patch_height) * (image_width // patch_width)

patch_dim = channels * patch_height * patch_width

self.to_patch_embedding = nn.Sequential(

Rearrange('b c (h p1) (w p2) -> b (h w) (p1 p2 c)', p1 = patch_height, p2 = patch_width),

nn.LayerNorm(patch_dim),

nn.Linear(patch_dim, dim),

nn.LayerNorm(dim),

)

self.pos_embedding = nn.Parameter(torch.randn(1, num_patches + 1, dim))

self.transformer = Transformer(dim, depth, heads, dim_head, mlp_dim, dropout)

def forward(self, img):

########

# 1. Perform Patchification on the image and process it into text-like sequence data with dimensions (B, S, H) = (b, h*w, p1*p2*c)

########

x = self.to_patch_embedding(img)

b, n, _ = x.shape

########

# 2. Concatenate with learnable 1D positional embedding

########

x += self.pos_embedding[:, :(n + 1)]

########

# 3. Input to Transformer to generate the final sequence representation

########

x = self.transformer(x)

......

From the above code, a patch is mapped to a vector via a ViT. The pixel of patches is flattened and fed into a two-layer linear transformation layer. The details are shown in Figure 2.

In other words, “AN IMAGE IS WORTH 16X16 WORDS”. Each patch can be regarded as a WORD for the Image. However, flattening all pixels of an image(16 * 16 * 3=768) into the token vector representations is straightforward but oversimplified, as it does not capture the inherent spatial attributes of the image within a patch.

By examining the source codes of multimodal models, we find that most implementations of ViT lie in convolution to extract token embeddings, instead of directly flatening patches. This method can better capture the spatial attributes of patches — As illustrated in the implementation of Qwen-VL:

class VisionTransformer(nn.Module):

def __init__(...):

self.conv1 = nn.Conv2d(in_channels=3, out_channels=width, kernel_size=patch_size, stride=patch_size, bias=False)

def forward(self, x: torch.Tensor):

########

# 1: Map an image from [H, W, C] = [448, 448, 3] to [width, grid, grid] = [1664, 32, 32] using convolution kernels

########

x = self.conv1(x) # shape = [*, width, grid, grid]

########

# 2: Flatten the image in row-major order, mapping [width, grid, grid] to a 2D sequence [grid * grid, width]

########

x = x.reshape(x.shape[0], x.shape[1], -1) # shape = [*, width, grid ** 2]

x = x.permute(0, 2, 1) # shape = [*, grid ** 2, width]

########

# 3: Add positional encoding and feed into the transformer model

########

x = x + get_abs_pos(self.positional_embedding, x.size(1))

x = self.transformer(x)

Now, we assume an image (x) is loaded into a computer with its shape being (h, w, c) where h denotes the height, w denotes the width and c denotes the number of channels.

- Patchification

Firstly, ViT processes x \in R^{h × w×c} into a patch sequence of length s. In this sequence, each element corresponds to x_{i} \in R^{p× p×c}} where p is the patch size and s (e.g., s=⌊h/p⌋ ×⌊w/p⌋) is the sequence length.

- Patch embedding

For each patch x_{i} \in R^{p× p×c}}, we first flatten it into a 1D vector using vec(.) — typically via row-major flattening. Next, we map this flattened vector to a d-dimensional embedding vector using a linear transformation. Specifically, let w \in R^{d× p×p×c} be the learnable matrix, the x_{i} is mapped to a d-dimensional vector via matrix-vector multiplication.

- Position vector

Finally, each embedding is added to a positional vector π_{i} to obtain t_{i}, which is fed into LLM.

where π_{i} is the learnable positional encoding — typically adopting 2D positional encoding cause it has better spatial representation.

Advantages of ViT

- Scalability

Due to the fact that ViT adopts Transformer framework, it exhibits remarkable scalability. This means that as the number of model parameters and data size increase, the model’s capacity improves accordingly.

- Visual tokenization

ViT tokenizes visual features and integrates them with text features into the model.

- Mature utilization ecosystem

The ViT model series has developed a mature utilization ecosystem. Many well-performing models built upon ViT are ready-to-use.

Disadvantages of ViT

- Fixed resolution images

The standard input of ViT must be processed into a unified image resolution. This process necessitates resizing the image in advance; however, this may cause image distortion (and subsequent information loss) and lead the model to form a misleading perception of the image. For example, an image with 200×800 resolution is resized to the pre-defined resolution 224×224. A general implementation for image resizing is to adjust the aspect ratio of the image to 1:1, resulting in a square image.

- Single patch size-based patching processing

It has been observed that the smaller the patch size, the better the model performance, but the slower the processing speed. In contrast, the larger the patch size, the worse the model performance, but the faster the processing speed. The flexibility in patch size configuration is important for the model efficiency.

In figure4, we observe that the model performance with a smaller patch size (ViT-B/16) is obviously weaker than the other model with a larger patch size(ViT-B/30). Therefore, patch size can not be flexibly chosen based on the model performance and efficiency.

- others

Some researchers have also proposed other shortcomings of ViT:

(1). The modeling approach of ViT seriously destroys the original spatial structure of images (i.e., flattening pixels within a patch and expanding feature sequences in row-major order)

Fortunately, these issues have been addressed through convolution within a patch and 2D positional encoding.

(2). ViT requires more computational resources than CNNs.

However, in the era of LLMs, computational resources are sufficient to support the training for ViT.

In conclusion, ViT currently still faces two key limitations:

(1). Fixed image resolution.

(2). Fixed patch size.

To address these two issues, we introduce three improved algorithms including pix2struct, Flexivit and NaViT.

Pix2struct

The main contribution of pix2struct is that, based on ViT, it introduces an adjustable resolution input method, which preserves the original aspect ratio of images and eliminates the image distortion.

The pix2struct scales images while maintaining the original image aspect ratio, and extracts the maximum number of fixed-size patches within a pre-defined sequence length . In addition, to enable models to learn the spatial distribution corresponding to the image’s aspect ratio, it introduces a learnable 2D positional encoding.

As shown in Figure 5, the left side demonstrates the process of pix2struct, where the image is divided into multiple patches while mataining its original aspect raio.

The main codes of pix2struct is as follows:

# Source Code: https://github.com/huggingface/transformers/blob/main/src/transformers/models/pix2struct/image_processing_pix2struct.py#L260C1-L277C1

class Pix2StructImageProcessor(BaseImageProcessor):

def extract_flattened_patches(self, image, max_patches, patch_size):

...

### 3. Get patch height, patch width, image height, and image width

patch_height, patch_width = patch_size["height"], patch_size["width"]

image_height, image_width = get_image_size(image, ChannelDimension.FIRST)

### 4. Calculate new resizable height and width based on the max_patches setting and the original image dimensions

scale = math.sqrt(max_patches * (patch_height / image_height) * (patch_width / image_width))

num_feasible_rows = max(min(math.floor(scale * image_height / patch_height), max_patches), 1)

num_feasible_cols = max(min(math.floor(scale * image_width / patch_width), max_patches), 1)

resized_height = max(num_feasible_rows * patch_height, 1)

resized_width = max(num_feasible_cols * patch_width, 1)

### 5. Upsample the image to the new height and width using bilinear interpolation

image = torch.nn.functional.interpolate(image, size=(resized_height, resized_width), mode="bilinear", ...)

### 6. Split the image into patches, shape: [rows * columns, patch_height * patch_width * image_channels]

patches = torch_extract_patches(image, patch_height, patch_width)

patches = patches.reshape([rows * columns, depth])

### 7. If the patch sequence length is less than max_patches, pad the sequence to unify its length to max_patches

result = torch.nn.functional.pad(result, [0, 0, 0, max_patches - (rows * columns)])

return result

# Main entry function for image data processing

def preprocess(self, ..., images: ImageInput) -> ImageInput:

...

### 1. Set two important parameters: max_patches (fixed patch sequence length processable by the model) and patch_size (patch dimension)

max_patches = xxx

patch_size = yyy

### 2. Call extract_flattened_patches (core method for image resizing) on each image

images = [

self.extract_flattened_patches(image=image,

max_patches=max_patches, patch_size=patch_size, ...)

for image in images

]

return images

Let us take an example to understand the workflow of the above code.

Assume max_patches = 10, patch_size=32, with an input image with 100 × 200 resolution.

The workflow for code annotations 3–4 is:

step1: calculate the aspect ratio

step2: calculate the number of patches in rows and columns, respectively

step3: calculated the resized width and height with integer division for patches

The process of code annotations 5–7 is demonstrated in Figure 6.

Through the operation in figure6, the processed token embeddings are concatenated with the 2D learnable positional encoding, and the combined result is fed into the ViT model.

In summary, the key optimization of pixelstruct is changing the fixed input resolution to an adjustable one.

FlexiViT

FlexiViT proposes an adaptive approach to adjust patch size. Simply put, a random patch size is selected in each training iteration. Since the randomly selected patch size can directly affect the sequence length, the patch embedding and positional encoding parameters need to be resized accordingly. This method enables flexiViT to function as a dynamic patch size ViT model. The details are outlined in the following steps.

Patchification

FlexiViT initiates a set of patch sizes and uniformly samples one patch size in each training iteration followed by the image patchification. In the FlexiViT paper, the input image resolution is 240×240 and the patch size set is {48, 40,30,24,20,16,15,12,10,8} where each patch size is divisible by 240.

Patch embedding

A single patch(with shape p× p×c ) is mapped to a d-dimensional embedding using d trainable matrices w where each matrix has the same shape as the patch. However, the dynamic nature of p raises the question of how to define these matrices w?

A straightforward approach is to assign a unique w to each p in the predefined set, but this approach has obvious shortcomings. On one hand, the matrices w cannot share parameters, resulting in insufficient training; on the other hand, using multiple separate w matrices results in an inelegant model design.

The solution of FlexiViT is: First, a fixed-size weight matrix W (with shape d × p× p×c, where p is set to 32) is initialized. This W is also regarded as d×c individual matrices w, each with shape p× p. Next, a projection matrix P transforms each w into \bar(w) with shape (p*×p* ), where p* is the sampled patch size. Therefore, we can obtain d new matrices, each with shape p*×p*×c. Finally, the input patch of shape p∗×p∗×c is first flattened into a vector of length (p∗)^2⋅c, then multiplied by a learned projection matrix to produce the d-dimensional patch embedding.

How to achieve P? FlexiViT makes an assumption: for a patch x, if it is transformed into \bar{x} without information loss, we aim to find a P such that the inner product of the transformed \bar{w} and \bar{x} is the same as the inner product of the original w and x. The corresponding equation is as follows:

where \bar{w} = Pw and \bar{x} = Bx — here, \bar{x} denotes the result of an information-lossless transformation, and \bar{x} shares same shape as \bar{w}.

The assumption is reasonable. Since the information of x is preserved, the final patch embedding should be identical regardless of how the shape of x is transformed.

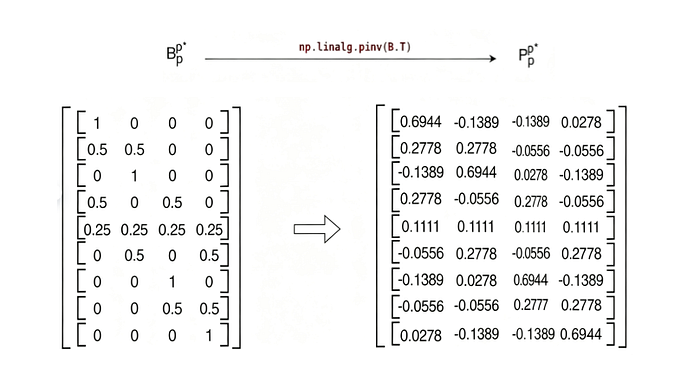

The matrix B can be derived via the bilinear interpolation. P can be defined the pseudoinverse matrix of B^{T}, i.e., P=(B^{T})^{+}=B(B^{T}B)^{-1}. Substituting B and P into the aforementioned equation:

The calculation process of B is as follows:

# Source code address: https://github.com/google-research/big_vision/blob/main/big_vision/models/proj/flexi/vit.py#L60

def resize(x_np, new_shape):

x_tf = tf.constant(x_np)[None, ..., None]

x_upsampled = tf.image.resize(

x_tf, new_shape, method="bilinear")[0, ..., 0].numpy()

return x_upsampled

### Function to obtain matrix B

def get_resize_mat(old_shape, new_shape):

mat = []

for i in range(np.prod(old_shape)):

basis_vec = np.zeros(old_shape)

basis_vec[np.unravel_index(i, old_shape)] = 1.

### Bilinear interpolation

mat.append(resize(basis_vec, new_shape).reshape(-1))

return np.stack(mat).T

To clearly understand the above code, we take old_shape=2 and new_shape=3 as an example:

When the B is calculated, the pseudoinverse matrix of B^{T} can be also calculated.

# https://github.com/google-research/big_vision/blob/main/big_vision/models/proj/flexi/vit.py#L69C3-L69C49

resize_mat_pinv = np.linalg.pinv(resize_mat.T)

After obtaining P, the P is used to transform W into \bar{W}. Finally, the inner product of \bar{W} and the patch generates the patch embedding for the randomly sampled patch size.

We conclude the process of calculating patch embedding in FlexiViT.

(1). initialize fixed-size weights W with shape d × p× p×c.

(2). For a randomly selected patch size p* , construct a matrix B that maps p× p -> p*× p* via bilinear interpolation.

(3). calculate the pseudoinverse matrix P of B^{T}

(4). W is transformed into \bar{W} using P

(5). compute the d-dimensional embedding by taking the inner product of \bar{W} and each patch with shape c×p*× p*.

Now, we use a simple example to validate the relationship that <x,w>=<\bar{x},\bar{w}>.

Assume

The Bx and Pw are calculated as the following equations.

The <x,w> and <Bx, Pw> are derived:

It is clear that the <x,w> is same as the <Bx, Pw> from the above equation.

Positional vector

The FlexiViT initializes a length (e.g., 7×7) for the positional encoding. Next, the length is adjusted to match the sequence length determined by the randomly sampled patch size.

....

### Initialize pos_emb with the initial setting posemb = (7,7)

pos_emb = vit.get_posemb(self, self.posemb, self.posemb_size, c, "pos_embedding", x.dtype)

### Perform linear interpolation on the initialized pos_emb to resize it to length h*w

if pos_emb.shape[1] != h * w:

pos_emb = jnp.reshape(pos_emb, (1, *self.posemb_size, c))

pos_emb = jax.image.resize(pos_emb, (1, h, w, c), "linear")

pos_emb = jnp.reshape(pos_emb, (1, h * w, c)

In the FlexiViT experiment, the model delivers remarkable performance across various patch sizes. As shown in figure 7, the smaller patch size can yield better model performance but result in slower inference speed. Conversely, the larger patch size enables faster inference speed while leading to relatively inferior model performance.

NaViT

Currently, these approaches introduced above all require scaling images to a fixed size, where standard ViT and FlexiViT only accept fixed-size images as the input, and the pix2struct can process images with different aspect ratios but still requires resizing.

Is there an approach that preserves the original image resolution without any resizing operations? NaViT is such an approach which preserves both the original image resolution and aspect ratio.

NaViT relies on packing techniques from the NLP field to process patches extracted at the original image resolution. After that, patches of varying resolutions are then concatenated into a sequence, enabling uniform processing of images with various resolutions. This method is called Patch n’ Pack.

Model modifications

- Masked self attention and masked pooling

When multiple examples are packed into a sequence, each layer of transformer performs self-attention during model forward propagation. An additional self-attention mask is introduced to eliminate the cross-interaction between different examples. In addition, in the CV tasks, since the entire image is transformed into feature representations for loss computation, the model’s top layer will perform representation aggregation for each individual example. Thus, the aggregation also requires an extra self-attention mask to separate computations across examples. As demonstrated in Figure 8, one sequence has three examples with token lengths of 4,6,5 , respectively. Finally, 2 padding tokens are concatenated to align sequence lengths within a batch.

In figure8, self-attention and pooling representations are calculated merely for tokens belonging to the same example. Meanwhile, the mask matrix is like the following figure.

- Token drop

Images are low-information-density carrier and some spatially adjacent pixels and patches have similar information, thus we can moderately drop some patches via sampling to improve both training throughput and performance during model training. Traditional ViT model use a fixed drop rate for patch sampling; however, each image has various drop-token strategies, thus NaViT packs multiple images into one sequence. This method provides flexible sampling approaches for images with varying resolutions. Particularly, for some large-sized images, the drop rate is increased to compress the sequence length.

Meanwhile, to control the total sequence length, the last image in a pack is specially processed (i.e., sampling strategies are set built on the remaining token positions) to concatenate multiple images into a fixed-length sequence. Different preprocessing steps of various images are shown in the bottom part of Figure 10.

Decomposed positional encoding

To process images with arbitrary resolutions and aspect ratios, NaViT introduces decomposed positional encodings added to patch tokens to represent the spatial information of sequences. Positional embeddings for each dimension are initialized as max_len d-dimensional vectors. Patch positional vectors are derived from the absolute position indices of patches, and two vectors (one for each dimension) are added to the patch token vectors. Compared with Pix2Struct which learns [max_len, max_len] positional embeddings, decomposed positional encoding learns weights for each dimension separately. This method offers two advantages:(1). The number of positional encoding parameters are significantly reduced. (2). for unseen image resolutions, it exhibits better scalability. For example, the model is not trained on images of resolution (a,b), but it may still have trained on images with height a or width b.

Alignment of Multiple Examples Within a Batch

Packing organizes sequences into new batches, where each sequence in a batch contains various number of examples. In CV tasks, the final loss computation requires pooling for each example, resulting in different pooling representations per sequence in a batch. This method is detrimental to efficient concurrent model training. To address this issue, NaViT leverages the following steps:

For a batch of size B, each sequence extracts a maximum of B × E_{max} pooling representations(i.e., E_{max} pooling representations per sequence). If a sequence has more than E_{max} pooling representations, the excess is discarded. In contrast, If it has fewer, fake representations are used to fill the remaining slots.

Performance and efficiency

NaViT significantly improves the concurrency of model computations by leverageing packing. Compared to the non-concurrent computation, this allows model to process more samples. As shown in Figure 10, the NaViT requires only 1/4 computation cost of traditional ViT models. In addition, based on the same computation resources, NaViT can achieve better performance than ViT.

Moreover, the sequence length of NaViT after packing is longer than that of traditional ViT. Theoretically, the attention mechanism of NaViT needs more computational resources. However, the experiment of NaViT shows that with the increase in the model’s hidden size, the computational cost of self-attention decreases. As shown in Figure 11, the longer sequence due to packing does not lead to higher computational cost.

Conclusion

ViT is widely used in the CV and multimodal domains due to its simplicity, flexibility and scalability, thus becoming an alternative to CNNs. The standard ViT first divides an image into multiple patches mapped to token embeddings. Generally, the input image is resized to a fixed-size aspect ratio, and then segmented into a fixed number of patches. Pix2struct introduces a method that retains the original image aspect ratio. FlexiViT supports multiple patchification methods for a single image , allowing smooth adjustment of the sequence length and computational cost. NaViT packs images of varying sizes into a single sequence by Patch n’ Pack, enabling images to maintain their original resolution and aspect ratio while avoiding increased computational cost. Notably, Qwen-VL also adopts NaViT to preprocess their input images.

Inference

- [1] FlexiViT: One Model for All Patch Sizes

- [2] Pix2Struct: Screenshot Parsing as Pretraining for Visual Language Understanding

- [3] Patch n’ Pack: NaViT, a Vision Transformer for any Aspect Ratio and Resolution

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.