Mastering AI Agents: Components, Frameworks, and RAG

Last Updated on May 16, 2025 by Editorial Team

Author(s): Sunil Rao

Originally published on Towards AI.

Agents are advanced AI systems that use LLMs as their core “brain” to interpret language, reason through problems, plan solutions, and autonomously execute tasks — often by interacting with external tools or APIs.

Unlike basic LLMs that simply generate text in response to prompts, LLM agents are designed to break down complex tasks into subtasks, remember past interactions, and adapt their responses or actions based on context and goals.

Key Components of LLM Agents in Detail

LLM agents are sophisticated AI systems structured around several core components that enable them to perform complex, context-aware tasks. Here’s a detailed breakdown of these components:

1. Agent/Brain

LLM serves as the core reasoning engine of the agent. It understands user instructions, plans the sequence of actions, decides which tools to use, and generates the final response. The capabilities of the chosen LLM (e.g., reasoning, instruction following, code generation) directly impact the agent’s overall performance.

from langchain.llms import OpenAI

# Initialize an OpenAI model (requires API key)

llm = OpenAI(temperature=0, model_name="gpt-3.5-turbo")

Popular choices include OpenAI models, Anthropic’s Claude, Google’s Gemini and open-source models accessible via Hugging Face.

2. Memory

For agents that need to maintain context over multiple interactions or remember past observations, a memory module is essential. This allows the agent to have more natural and coherent conversations or to build upon previous steps in a task. Memory enables LLM agents to track past actions, recall previous interactions, and maintain context over time. It is generally divided into:

- Short-term Memory: Functions as a temporary workspace, holding recent conversation history or task context. It allows the agent to respond coherently within an ongoing session but is cleared after the session ends.

- Long-term Memory: Stores insights and information from past interactions, sometimes over weeks or months. This memory allows the agent to recognize patterns, learn user preferences, and improve future performance.

Advanced memory systems may dynamically organize and interlink memories, supporting adaptive, context-aware behavior across diverse tasks.

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationChain

# Initialize memory

memory = ConversationBufferMemory(memory_key="chat_history")

Types of Memory Structure:

The way an agent’s memory is organized significantly impacts how efficiently and effectively it can store and retrieve information. Two common structural approaches are:

- Unified Memory:

In a unified memory architecture, all types of information (conversational history, factual knowledge, learned experiences, task-specific data) are stored within a single, integrated memory system. This often involves a common format or a way to link different types of information within the same structure.

Analogy: A human brain where different types of memories (episodic, semantic, procedural) are interconnected and can influence each other.

Potential Implementation: A large knowledge graph where nodes represent entities and concepts, and edges represent relationships, regardless of whether the information came from a conversation, a document, or a sensor reading.

Another approach could be a single vector database storing embeddings of all types of information.

- Hybrid Memory:

A hybrid memory architecture utilizes multiple, distinct memory components, each optimized for storing and retrieving specific types of information. These components can then be accessed and combined as needed.

Analogy: A computer system with different types of memory (RAM for active processes, hard drive for long-term storage, cache for frequently accessed data).

Potential Implementation: An agent might have a short-term conversational buffer (list of recent turns), a long-term knowledge graph for factual information, and a separate vector database for storing embeddings of documents or past experiences.

Types of Memory Format:

The format in which information is stored in the agent’s memory determines how it can be accessed and processed. Common formats include:

- Languages (Textual): Storing information as raw text or structured text (e.g., JSON, XML). This is natural for LLM-based agents as they primarily process language. Ex: Storing the history of a conversation as a list of turns, saving key facts extracted from documents as sentences, maintaining task-specific instructions in a textual format.

- Embeddings (Vector Representations): Converting information (text, images, audio, etc.) into dense numerical vectors that capture their semantic meaning. These embeddings can then be stored in vector databases for efficient similarity search. Ex: Storing embeddings of past user queries, document chunks, image features, or even the agent’s internal states.

- Databases (Structured Data): Storing information in a structured format within relational databases (SQL) or NoSQL databases. This is suitable for organizing and querying large amounts of structured data.

Ex: Storing user profiles, product catalogs, event logs, or the results of tool calls in a structured way. - Lists (Sequential Data): Storing information in a sequential order, often used for maintaining the history of events or interactions. Ex: A list of messages in a conversation, a sequence of actions taken by the agent, a timeline of events.

Types of Memory Operation:

To be useful, an agent must be able to perform operations on its memory:

- Memory Reading (Retrieval):

Accessing and retrieving relevant information from the memory based on the current context, user query, or the agent’s internal needs.

Methods:

- Keyword Search: Finding information based on specific keywords.

- Semantic Search (using embeddings): Retrieving information that is semantically similar to a query embedding.

- Structured Queries (for databases): Using query languages like SQL to retrieve specific data.

- Sequential Access (for lists): Iterating through the list to find relevant items.

Ex: An agent receiving the query “What did I say about the weather yesterday?” needs to read its conversational history to find the relevant turn.

- Memory Writing (Storage/Updating):

Storing new information into the memory or updating existing information based on new observations, actions, or learning.

Methods:

- Appending: Adding new items to a list or database.

- Key-Value Storage: Storing information with a specific key for later retrieval.

- Graph Updates: Adding new nodes and edges to a knowledge graph.

- Vector Insertion: Adding new embeddings to a vector database.

Ex: After the user tells the agent their favorite color, the agent needs to write this information to its long-term memory (e.g., a user profile in a database).

- Memory Reflection (Processing and Learning):

Analyzing and processing the information stored in memory to extract insights, identify patterns, learn new relationships, or refine the agent’s knowledge and strategies.

Methods:

- Summarization: Condensing large amounts of information in memory.

- Pattern Recognition: Identifying recurring sequences or correlations in past experiences.

- Knowledge Extraction: Deriving new facts or relationships from existing data.

- Reinforcement Learning Updates: Adjusting the agent’s policies based on rewards and past actions stored in memory (e.g., experience replay).

- Self-Correction: Reviewing past reasoning steps and identifying errors.

Ex: An agent that failed to complete a task might reflect on its memory of the steps it took and the outcomes to identify where it went wrong and improve its planning for similar tasks in the future.

3. Planning

The planning component is the core of an LLM agent’s ability to solve complex tasks. It determines the sequence of actions the agent needs to take to achieve a given goal. It involves:

- Prompting Strategy: Crafting prompts that guide the LLM to analyze the user’s request, decide on the next action (use a tool or provide a final answer), and format its output.

- Agent Type: Different agent types in Langchain (e.g.,

zero-shot-react-description,conversational-react-description,chat-zero-shot) employ different prompting strategies and workflows to manage the interaction between the LLM and the tools. - Looping and Iteration: The agent might need to go through multiple cycles of planning, tool use, and observation to reach the final solution.

from langchain.agents import initialize_agent, AgentType

# Initialize a zero-shot React agent

agent = initialize_agent(

llm=llm,

tools=available_tools,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True, # See the agent's thought process

)

# Run the agent with a user query

response = agent.run("What is the capital of France and what is 5 multiplied by 7?")

print(response)

There are two main categories of planning techniques:

1. Planning Without Feedback:

In this approach, the agent formulates a complete plan of action upfront, before executing any steps. It relies solely on its initial understanding of the task and its knowledge of the available tools.

a) Single-Path Planning:

The agent generates a linear sequence of actions that it believes will lead to the goal. It follows this plan step-by-step without deviating unless an error occurs that prevents further execution.

Popular Methods:

- Chain of Thought (CoT) Prompting (Implicit Planning): While not strictly a separate planning module, CoT encourages the LLM to explicitly reason through the problem step-by-step in its output. This implicitly creates a plan that the agent (or the user interpreting the output) can follow. Ex: Langchain – Implicit Planning

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

llm = OpenAI(temperature=0)

prompt = PromptTemplate.from_template(

"""Solve this problem by explicitly reasoning step by step:

{problem}

Let's think step by step:"""

)

chain = LLMChain(llm=llm, prompt=prompt)

response = chain.run(problem="If I have 10 apples and I give 3 to John and then buy 5 more, how many apples do I have?")

print(response)

The LLM’s output will ideally be a step-by-step thought process leading to the final answer, forming a single path plan.

- Fixed Action Sequences: For well-defined tasks, the agent might follow a pre-determined sequence of tool calls. This is less flexible but can be efficient for routine operations.

Ex: An agent designed to create a blog post might always follow the sequence:Research -> Outline Generation -> Draft Writing -> Review -> Publish. The planning is hardcoded.

b) Multi-Path Planning:

The agent might generate multiple potential plans or explore different branches of actions simultaneously or sequentially. This allows for more robust problem-solving by considering alternatives.

Popular Methods:

- Tree of Thoughts (ToT): This method extends CoT by allowing the LLM to explore multiple reasoning paths at each step. The agent can maintain a tree of potential thoughts and evaluate them based on certain criteria (e.g., coherence, relevance to the goal). It can backtrack and explore alternative paths if a current path seems unproductive.

Ex: Imagine an agent trying to solve a complex puzzle. At each step, instead of just one thought, it generates a few possible next moves. It evaluates each move (perhaps using a scoring function based on how close it gets to the solution). The agent might pursue the most promising moves further, creating branches in its “thought tree.” If a branch leads to a dead end, it can backtrack and explore other branches. - Graph-Based Planning: The agent can represent the problem space and potential actions as a graph. Nodes represent states, and edges represent actions or transitions. The agent can then search this graph for a path from the initial state to the goal state using algorithms like A* search, guided by heuristics derived from the LLM’s understanding.

Ex: An agent planning a trip might represent different locations as nodes and possible travel methods (driving, flying, train) as edges with associated costs and times. The LLM could provide heuristic estimates of the “distance” to the final destination, guiding the graph search algorithm.

2. Planning With Feedback:

This approach involves the agent executing actions and then using feedback from the environment, humans, or its own internal models to refine its plan dynamically. This allows for adaptation to unforeseen circumstances and improved problem-solving over time.

a) Environmental Feedback: The agent observes the state of the environment after executing an action. This feedback informs its subsequent planning steps.

Popular Methods:

- ReAct (Reason + Act): This framework explicitly structures the agent’s interaction as a cycle of reasoning about the current situation, deciding on an action (which can be using a tool or providing a final answer), and observing the result of that action. The observation from the environment (the tool’s output) is then used to inform the next reasoning step. Ex: Langchain — ReAct Agent:

from langchain.agents import initialize_agent, AgentType

from langchain.llms import OpenAI

from langchain.tools import DuckDuckGoSearchRun

llm = OpenAI(temperature=0)

search_tool = DuckDuckGoSearchRun()

tools = [search_tool]

agent = initialize_agent(

llm=llm,

tools=tools,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

)

response = agent.run("What is the current temperature in San Jose, California?")

print(response)

The agent will “think” about needing the current temperature, “act” by using the search tool, “observe” the search results, and then “reason” about the answer to provide the final response.

b) Human Feedback: Humans can provide explicit feedback on the agent’s plan or the results of its actions. The agent can use this feedback to correct its course or learn better strategies for the future.

Popular Methods:

- Interactive Agents: Systems where the agent presents its plan or intermediate results to the user for approval or modification. The user’s input directly guides the agent’s next steps.

- Ex: An agent designing a marketing campaign might present a draft email to a human marketer for review. The marketer can provide feedback like “Make the tone more persuasive” or “Add a call to action at the end.” The agent then revises its plan based on this human input.

- Reinforcement Learning from Human Feedback (RLHF) for Agent Policies: While more focused on training the underlying LLM, RLHF principles can be applied to fine-tune the agent’s planning policy based on human preferences for how tasks should be solved.

c) Model Feedback (Self-Reflection): The agent uses its own internal models (often the LLM itself or auxiliary models) to evaluate its plan, the results of its actions, or its reasoning process. This self-reflection can help identify errors or areas for improvement.

Popular Methods:

- Self-Critique and Improvement: The LLM can be prompted to critique its own generated plan or answer. Based on this self-critique, it can then revise its approach or refine its output.

Ex: An agent generating a summary of a research paper might be prompted to first generate the summary and then critique it: “Is this summary comprehensive? Does it accurately capture the main findings? Is the language clear and concise?” Based on its self-critique, it can then generate an improved version of the summary. - Using Auxiliary Models for Evaluation: A separate, potentially smaller or specialized model can be used to evaluate the agent’s intermediate steps or final output. For example, a factual correctness model could verify claims made by the agent.

4. Tools

Tools are external functions or systems that the agent can invoke to interact with the environment or perform specific tasks that the LLM itself cannot handle directly. They extend the agent’s capabilities beyond pure language processing. Ex:

- Search Engine: To retrieve up-to-date information from the web.

- Calculator: To perform mathematical calculations.

- APIs: To interact with external services (e.g., sending emails, checking weather).

- Code Interpreter: To execute Python code.

- Database Connectors: To query and retrieve data from databases.

from langchain.agents import load_tools

from langchain.tools import Tool

import requests

import json

# Load built-in search tool

search_tool = load_tools(["ddg-search"], llm=llm)

# Define a custom tool to get the current weather using an API

def get_weather(city: str) -> str:

"""Useful for getting the current weather in a given city."""

base_url = "http://api.openweathermap.org/data/2.5/weather"

params = {"q": city, "appid": "YOUR_OPENWEATHERMAP_API_KEY", "units": "metric"} # Replace with your actual API key

response = requests.get(base_url, params=params)

data = response.json()

if response.status_code == 200:

description = data["weather"][0]["description"]

temperature = data["main"]["temp"]

return f"The current weather in {city} is {description} with a temperature of {temperature}°C."

else:

return f"Could not retrieve weather information for {city}."

weather_tool = Tool(

name="Get Weather",

func=get_weather,

description="Useful for getting the current weather in a given city.",

)

available_tools = search_tool + [weather_tool]

5. Output Parser:

The LLM’s output when using tools is often in a specific format that indicates the tool to use and its parameters. The output parser is responsible for taking this unstructured LLM output and extracting the structured information needed to execute the tool. It also handles the LLM’s final answer.

Ex (Langchain Output Parser — Implicit): Langchain’s agent implementations have built-in output parsers that are specific to the AgentType being used. For example, the ZERO_SHOT_REACT_DESCRIPTION agent expects the LLM to output in a specific "Thought… Action… Observation…" format, and its internal parser extracts the tool name and parameters from the "Action" part.

For more complex scenarios or custom agent logic, you might need to define custom output parsers using Langchain’s BaseOutputParser class. This involves implementing logic to reliably extract the intended information from the LLM's text output.

6. Action Execution:

This component takes the structured output from the Planning Module (via the Output Parser) and executes the intended action. This involves calling the selected tool with the specified parameters and observing the result.

- Example (Langchain — Implicit): The

agent.run()method handles the execution of the chosen tool. When the LLM decides to use the "Get Weather" tool, the Langchain agent framework will extract the city name from the LLM's output and call theget_weatherfunction. The result of this function call is then fed back to the LLM as an "Observation".

Action” step is where the agent interacts with its environment (real or digital) to achieve its goals. It’s the execution of the plans formulated by the Planning component, leveraging the Memory and the agent’s Profile (which includes its capabilities and constraints).

a. Action Target: Why the Agent Acts

The target of an action defines the primary objective the agent aims to achieve by performing that specific action. These targets can be broadly categorized as:

- Task Completion: Actions taken directly to fulfill the user’s request or the agent’s defined goal. This is the most direct and common target.

Ex: If the user asks to “book a flight to London,” the agent’s actions (searching for flights, selecting one, confirming booking) are targeted towards completing this task.

The agent uses tools (like an airline booking API) to perform these actions, following the plan it generated. - Communication: Actions aimed at exchanging information with the user, other agents, or the environment itself. This is crucial for clarifying requirements, providing updates, seeking feedback, or coordinating efforts.

Ex: The agent might ask the user “What date would you like to travel?” to gather necessary information for task completion. It might also inform the user “Booking confirmed. Your flight details have been sent to your email.”

The agent uses its language generation capabilities to formulate these communicative actions, often drawing on its memory of the conversation. - Exploration: Actions taken to gather more information about the environment or to test hypotheses when the optimal course of action is uncertain. This is important for learning and adapting in novel situations.

Ex: If the agent encounters an unfamiliar API endpoint, it might perform a “test” call with safe parameters to understand its functionality and the expected response format.

The agent might use tools like web search or code interpreters in an exploratory manner to understand a new concept or data source before incorporating it into its plan.

b. Action Production: How the Agent Decides What to Do

Action production is the process by which the agent determines the specific action to take at a given point. This process relies heavily on:

- Memory Recollection: The agent retrieves relevant information from its memory (both short-term and long-term) to inform its decision. This includes past interactions, learned knowledge, and the current state of the task.

Ex: If the user has previously expressed a preference for a particular airline, the agent might recall this from its memory when booking a flight.

The LLM accesses its memory (e.g., conversation history) to understand the context and user preferences, influencing its choice of the next action. - Plan Following: The agent executes the steps outlined in its current plan. The planning component has already determined the sequence of actions believed to lead to the goal.

Ex: If the plan to book a flight involves “Search flights -> Select flight -> Enter passenger details -> Confirm booking,” the agent will execute these actions in order.

The LLM, guided by its internal planning logic or the structure of the agent framework, will generate the instructions to use specific tools according to the plan.

c. Action Space: The Agent’s Available Choices

The action space defines the set of all possible actions the agent can take in its environment. This is constrained by the tools and knowledge the agent possesses:

- Tools: These are the external functions or capabilities the agent can invoke. The available tools define the agent’s ability to interact with the world. Ex: Tools can include search engines, calculators, APIs for various services (email, calendar, booking), code interpreters, and more.

The LLM selects which tool to use based on the user’s request and the tool descriptions. - Self-Knowledge: This encompasses the agent’s understanding of its own capabilities, limitations, and internal state. It influences which actions the agent deems feasible or appropriate.

Ex: An agent aware that it doesn’t have access to a specific API won’t attempt to use it. An agent with limited computational resources might choose a simpler planning algorithm.

The LLM’s inherent knowledge about its abilities and the descriptions of the tools it has access to shape its action choices.

d. Action Impact: The Consequences of the Agent’s Deeds

Every action taken by the agent has consequences that affect its environment and its own internal state:

- Environments: Actions directly modify the external world the agent operates in. This could be a physical environment (for a robot) or a digital one (for a software agent).

Ex: Booking a flight changes the state of the airline’s reservation system. Sending an email affects the recipient’s inbox.

Tool calls modify external systems. Communicative actions change the user’s understanding or provide them with information. - Internal States: Actions can also change the agent’s internal state, including its memory, beliefs, and even its goals or plans.

Ex: After a successful search, the agent’s memory is updated with the retrieved information. If a plan fails, the agent might revise its goals or create a new plan.

The agent’s memory is updated with the results of tool calls and its interactions with the user. The planning component might be triggered to revise the plan based on new information or failures.

Simple AI agent

from langchain.llms import OpenAI

from langchain.agents import initialize_agent, AgentType, load_tools

from langchain.tools import Tool

from langchain.memory import ConversationBufferMemory

import requests

import json

# LLM

llm = OpenAI(temperature=0, model_name="gpt-3.5-turbo")

# Tools

search_tool = load_tools(["ddg-search"], llm=llm)

def get_weather(city: str) -> str:

"""Useful for getting the current weather in a given city."""

base_url = "http://api.openweathermap.org/data/2.5/weather"

params = {"q": city, "appid": "YOUR_OPENWEATHERMAP_API_KEY", "units": "metric"} # Replace with your actual API key

response = requests.get(base_url, params=params)

data = response.json()

if response.status_code == 200:

description = data["weather"][0]["description"]

temperature = data["main"]["temp"]

return f"The current weather in {city} is {description} with a temperature of {temperature}°C."

else:

return f"Could not retrieve weather information for {city}."

weather_tool = Tool(

name="Get Weather",

func=get_weather,

description="Useful for getting the current weather in a given city.",

)

available_tools = search_tool + [weather_tool]

# Memory

memory = ConversationBufferMemory(memory_key="chat_history")

# Planning Module & Output Parser (Implicitly handled by initialize_agent)

agent = initialize_agent(

llm=llm,

tools=available_tools,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

memory=memory,

verbose=True,

)

# Action Execution (Handled within agent.run())

user_query = "What is the capital of France and what is the current weather in San Jose?"

response = agent.run(user_query)

print(response)

# Subsequent turn demonstrating memory

response_2 = agent.run("What about the weather there tomorrow?")

print(response_2)

Agentic frameworks

Agentic frameworks are software platforms designed to build, deploy, and manage AI agents-autonomous systems capable of making decisions, managing workflows, and executing tasks independently.

These frameworks provide the foundational architecture, communication protocols, and integration tools necessary for agents to interact with data sources, APIs, external tools, and even other agents.

Key features of agentic frameworks include:

- Pre-built components and abstractions for rapid agent development

- Tool and API integration for access to real-time data and external services

- Memory management for context retention and long-term task execution

- Multi-agent collaboration allowing agents to work together on complex problems

- Planning and reasoning capabilities for goal-setting, sequencing actions, and adapting to obstacles

- Monitoring, debugging, and governance tools to ensure reliability, safety, and compliance

Agentic frameworks streamline the process of creating AI agents by providing reusable patterns, workflow orchestration, and utilities for autonomous operation.

Some of the top AI Agent Frameworks are:

- Langchain: A comprehensive framework providing modular components for building various LLM-powered applications, including agents with tools, memory, and planning capabilities, making it a foundational choice for many agent developers. Its flexibility allows for the creation of diverse agent architectures and workflows.

- LangGraph: Built on top of Langchain, it’s designed for creating stateful, multi-actor applications, enabling the development of more complex and conversational agents with defined lifecycles and interactions between different components. It facilitates building robust and dynamic agentic workflows.

- CrewAI: Focuses on building autonomous AI agents that work collaboratively as a “crew” with defined roles and responsibilities to tackle complex goals through coordinated actions and communication, emphasizing team-based AI problem-solving. It streamlines the creation of multi-agent systems with specific agent functions.

- Swarm (OpenAI): This type of framework enables the creation of agents that operate collectively and often without central control, where complex behaviors emerge from the local interactions of many simple agents. It’s geared towards distributed problem-solving and emergent intelligence.

- AutoGen (Microsoft): Enables the creation of multi-agent conversational AI applications, facilitating collaboration and communication between different agents with defined roles to solve tasks, often incorporating human intervention in the workflow. Its strength lies in orchestrating interactions between multiple AI entities.

- LlamaIndex: Primarily focused on connecting LLMs to external data sources, enabling Retrieval-Augmented Generation (RAG) capabilities that are crucial for grounding agents in specific knowledge and allowing them to access and utilize information beyond their internal training. It excels at making external data accessible to LLM-powered agents.

- Semantic Kernel (Microsoft): Integrates LLMs with traditional programming by allowing developers to define “Skills” (both native and LLM-powered) that agents can utilize, along with planners to automate step-by-step execution using these skills. It aims to bridge the gap between natural language AI and code-based functionalities within agent development.

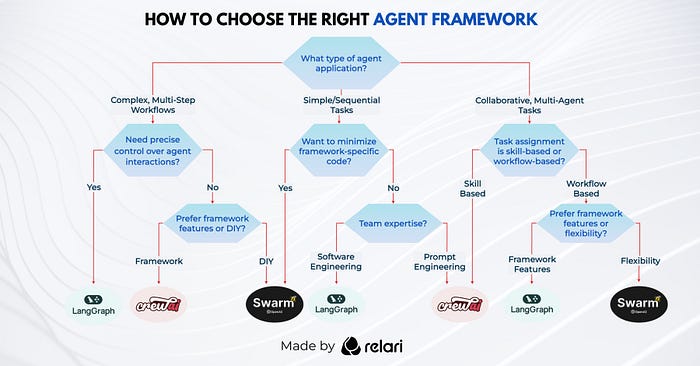

How to choose the right agent framework

Choosing the right agent framework boils down to understanding your project’s core needs and your team’s strengths. First, define the complexity and collaboration requirements of your agent application: is it simple and sequential, intricate with precise control, or a multi-agent system? Second, consider your development style and team expertise: do you prefer leveraging built-in framework features for speed, or a more DIY approach for maximum flexibility, and what is your team’s strength (software engineering or prompt engineering)? The flowchart guides you through these questions to suggest frameworks like LangGraph, CrewAI, or Swarm based on your specific answers.

AI Agents Infrastructure

This image provides a layered view of the ecosystem and key players involved in building and deploying AI Agents. It categorizes different aspects of the infrastructure, from the foundational models to the end-user applications.

Types of Agents

The concept of “agents” has evolved from simple, rule-based systems to sophisticated, adaptive AI entities. Early agents, such as simple reflex agents, were not truly considered AI-they operated purely on predefined rules and immediate inputs, lacking memory, learning, or reasoning capabilities. As technology advanced, agents began incorporating internal models, goal orientation, utility functions, and learning abilities, enabling them to operate in complex, partially observable, and dynamic environments. Here’s a breakdown of common types of AI Agents:

- Simple Reflex Agents:

These are the most basic type. They react directly to the current percept (what they sense) based on a predefined set of condition-action rules. They have no memory of past states and don’t consider the future consequences of their actions.

Ex: A thermostat that turns the heater on when the temperature drops below a threshold and off when it rises above.

Limitations: Limited intelligence, cannot handle situations not explicitly programmed, no learning or adaptation.

- Model-Based Reflex Agents:

These agents maintain an internal “model” of the world, representing how the world evolves independently of the agent’s actions and how the agent’s actions affect the world. This model allows them to make decisions based on the current percept and their understanding of the world’s state.

Generally considered the starting point of AI agents, as they use internal models to handle partially observable environments and adapt to some changes.

They are AI agents, as they involve reasoning, planning, and decision-making beyond simple rule-following.

Ex: A robot vacuum cleaner that has a map of the house. It uses its sensors to determine its current location and uses the map to decide where to go next, even if it hasn’t directly sensed an obstacle in front of it.

Improvements over Simple Reflex: Can handle partially observable environments by reasoning about unobserved aspects of the world.

- Goal-Based Agents:

These agents not only have a model of the world but also have explicit goals they are trying to achieve. Their actions are chosen to reach these goals. They might consider sequences of actions and their potential outcomes.

Ex: A navigation system in a car. It has a map (model) and a destination (goal). It plans a sequence of turns to reach the destination, considering factors like traffic.

Key Feature: Decision-making involves reasoning about future states and choosing actions that lead closer to the goal.

- Utility-Based Agents:

These agents go beyond simply having goals; they also consider a “utility function” that assigns a value (a measure of happiness or desirability) to different states. They aim to maximize their expected utility. This allows them to make more nuanced decisions when multiple goals conflict or when there are trade-offs involved.

Ex: A self-driving car that needs to reach a destination quickly (goal) but also wants to ensure passenger comfort and safety (utility). It might choose a slightly longer route with smoother traffic over a faster but more congested one.

Key Feature: Decision-making involves evaluating different action sequences based on their potential to lead to states with higher utility.

- Learning Agents:

These are the most advanced type in this hierarchy. They can improve their performance over time by learning from their experiences. A learning agent typically has a “performance element” (which acts like one of the agents above), a “learning element” (which modifies the performance element based on feedback), a “critic” (which evaluates the agent’s performance), and a “problem generator” (which suggests new actions to explore).

Ex: A chess-playing AI that learns from playing games, identifying successful strategies and mistakes to improve its future moves.

Key Feature: Ability to adapt and improve their behavior based on data and feedback.

- Multi-Agent Systems (MAS):

A Multi-Agent System is not a single agent but rather a collection of multiple autonomous agents that interact with each other within a shared environment to solve a problem or achieve a common goal. These agents can have varying degrees of autonomy, intelligence, and individual goals, and their interactions can range from cooperation to competition. The complexity arises from managing the interactions, communication, coordination, and potential conflicts between these individual agents.

Ex: A team of robots working together in a warehouse to sort packages. Each robot might have its own specific tasks and sensors, but they need to communicate and coordinate their movements to avoid collisions and efficiently complete the overall sorting process.

Another example could be a swarm of drones working together for search and rescue.

Key Features: Decentralized control, interaction (communication, negotiation, cooperation, competition), emergence of complex behaviors from individual agent interactions, challenges in coordination and conflict resolution.

Lets delve into this in detail:

Multi-Agent Systems

Single-agent architectures, while suitable for many tasks, can face limitations when dealing with complexity, scale, and the need for specialized expertise. Key drawbacks include:

- A single agent can become overwhelmed trying to manage intricate tasks with numerous sub-goals, diverse information sources, and complex decision-making processes.

- A single agent might be forced to handle tasks requiring different skill sets or knowledge domains, leading to suboptimal performance in certain areas.

- A single agent typically operates sequentially, limiting the potential for parallel processing and slowing down the completion of tasks that could be broken down and handled concurrently.

- If the single agent encounters an unexpected situation or fails, the entire system’s progress can be halted. There’s no redundancy or ability for other entities to take over.

- Representing and interacting with inherently multi-faceted environments or simulating the behavior of multiple interacting entities can be challenging for a single agent.

Multi-agent architectures became necessary to overcome these limitations and address more complex real-world problems.

A Multi-Agent System (MAS) is a computational system composed of multiple autonomous agents that interact with each other within a shared environment to achieve individual or collective goals. These agents are typically capable of perceiving their environment, making decisions, and acting upon it.

The complexity of a MAS arises from the need to manage the interactions, communication, coordination, and potential conflicts between these individual agents.

Ex: A customer service center can be effectively modeled as a Multi-Agent System (MAS), where different software components and even human agents operate as autonomous entities interacting to serve customer needs.

- AI Chatbots (Level 1 Support): These are autonomous software agents designed to handle common and simple customer inquiries. They can understand natural language, access basic knowledge bases (FAQs, product information), and provide immediate responses or guide customers through basic troubleshooting steps.

- Specialized AI Agents (Tiered Support): More sophisticated AI agents can be designed to handle specific types of complex issues (e.g., billing inquiries, technical support for a particular product line). They might have access to more specialized knowledge bases and diagnostic tools.

- Human Customer Service Representatives: These are also agents within the system, possessing higher-level reasoning, emotional intelligence, and the ability to handle unique or escalated issues. They rely on their knowledge, experience, and various software tools.

- Knowledge Base Systems: While not always considered active agents, the knowledge bases themselves can be seen as passive information agents that provide data to the active agents.

- Routing and Escalation Systems: These software components act as coordination agents, directing customer inquiries to the most appropriate agent (human or AI) based on the nature of the problem, agent availability, and service level agreements.

- Ticketing Systems: These systems can be viewed as memory agents, storing the history of customer interactions, problem resolutions, and agent assignments, allowing all agents to have context.

Core Components of a Multi-Agent System

While individual agents within a MAS can have their own internal architectures (as discussed previously), a MAS as a whole typically comprises the following core components:

- A Set of Autonomous Agents: As described above, these are the fundamental building blocks of the system, each with its own capabilities and goals.

- A Shared Environment: The space in which the agents operate, perceive, and act. The environment can be:

- Discrete or Continuous: Based on how time and space are represented.

- Static or Dynamic: Based on whether the environment changes independently of the agents’ actions.

- Deterministic or Stochastic: Based on the predictability of the environment’s changes.

- Fully or Partially Observable: Based on how much information each agent can access about the environment’s state.

3. Interaction Mechanisms: The ways in which agents communicate and influence each other. These can include:

- Communication Languages and Protocols: Defining the format and rules for exchanging messages.

- Direct Communication: Agents explicitly sending messages to each other.

- Indirect Communication: Agents modifying the environment, which is then perceived by other agents, influencing their behavior.

- Negotiation Protocols: Rules for reaching agreements on shared goals or resource allocation.

- Coordination Mechanisms: Explicit or implicit methods for aligning agent behaviors to achieve system-level goals (e.g., planning, task allocation, social conventions).

4. Organizational Structure (Optional but Common): The relationships and hierarchies between agents, which can influence their interactions and responsibilities. Examples include teams, coalitions, hierarchies, or market-like structures.

5. Goals (Individual and/or Collective): The objectives that drive the agents’ behavior and the overall system’s purpose.

Multi-agent architectures

Each approach offers different levels of control, communication patterns, and complexity:

1. Network:

Agents are connected through a communication network, allowing them to directly exchange messages with each other. This is often a peer-to-peer or distributed communication model.

- Control: Agents have a high degree of autonomy in deciding when and with whom to communicate. Coordination emerges from these direct interactions.

- Highly flexible, allowing for complex and dynamic interaction patterns. Agents can form ad-hoc teams or collaborations.

- Can be complex to manage and ensure coherent behavior, especially with a large number of agents. Requires robust communication protocols and potentially mechanisms for conflict resolution.

Use Cases: Distributed problem solving, sensor networks, social simulations, robotic swarms.

2. Supervisor:

A designated “supervisor” agent acts as a central coordinator, directing the activities of other agents. The supervisor typically assigns tasks, monitors progress, and facilitates communication.

Agents report to the supervisor, receive instructions, and potentially share results through it.

- Control: The supervisor has significant control over the actions of the other agents.

- Can be less flexible than a pure network model, as agents primarily follow the supervisor’s directives.

- Can simplify coordination but introduces a single point of failure. The supervisor’s logic can become complex for large systems.

Use Cases: Task allocation in manufacturing robots, centralized workflow management, hierarchical control systems.

3. Supervisor (Tool-Calling):

This is a specialized form of the supervisor model, particularly relevant in the context of LLM Agents. The supervisor agent orchestrates other agents by instructing them to use specific “tools” or functions.

The supervisor analyzes the overall goal and breaks it down into steps, then calls upon other agents (or itself) to execute those steps using their designated tools. The output of one tool call can be the input for another.

- Control: Relatively centralized, with the supervisor determining the sequence and purpose of tool calls. However, the agents executing the tools might have autonomy within that specific tool’s operation.

- Offers a structured way to manage complex tasks by leveraging the specific capabilities of different agents (represented by their tools).

- Depends on the sophistication of the supervisor’s planning and tool-calling logic.

Use Cases: Complex task automation where different agents have specialized skills or access to specific APIs, orchestrated workflows involving LLMs and external tools (as seen in frameworks like Langchain’s Agent Executors).

4. Hierarchical:

Agents are organized in a tree-like structure with different levels of authority and responsibility. Higher-level agents manage and coordinate the activities of agents below them.

Communication and control flow up and down the hierarchy. Higher-level agents might decompose tasks for lower-level agents and aggregate their results.

- Control is distributed across the hierarchy, with higher levels exerting more influence over lower levels.

- Offers a balance between centralized control and distributed autonomy. The hierarchy can be designed to reflect the structure of the problem or organization.

- Designing and managing the hierarchical structure and communication protocols can be intricate.

Use Cases: Organizational structures in virtual teams, complex robotic systems with task delegation, multi-layered control systems.

5. Custom Multi-Agent Workflow:

Developers design a specific, tailored workflow that dictates how different agents interact and collaborate to achieve a particular goal. This approach can combine elements of the other methods or introduce entirely new interaction patterns.

The workflow is often explicitly programmed, defining the sequence of agent actions, communication steps, and data flow.

- Control: Can range from highly centralized (if the workflow is rigidly defined) to more decentralized (if agents have some autonomy within the workflow).

- Highly flexible, allowing developers to optimize the interaction pattern for a specific problem.

- Can be complex to design and implement correctly, requiring a deep understanding of the task and the capabilities of the agents.

Use Cases: Specialized applications with well-defined collaboration patterns, custom automation processes, complex simulations requiring specific interaction sequences.

AI Agents Communicate

AI agents in multi-agent systems can communicate and coordinate using several architectural patterns and protocols.

Graph State vs Tool Calls

Graph State Communication:

Agents maintain and update a shared or partially shared graph representing the current state of the problem, environment, or the relationships between agents. Communication happens implicitly through these state updates. Agents observe changes in the graph and react accordingly.

Agents read from and write to the shared graph structure. Changes made by one agent become visible to others based on their access and monitoring of relevant parts of the graph.

Ex: A team of robotic agents collaborating to clean a room. The shared graph could represent the map of the room, the cleanliness status of different areas, and the current locations of the robots.

- Agent 1 cleans a section and updates the graph node for that area to “clean.”

- Agent 2, observing the graph, identifies an adjacent “unclean” area and moves to clean it.

- Agent 3, needing to navigate, queries the graph for the locations of other agents to avoid collisions.

Tool Calls:

Agents communicate by explicitly invoking “tools” or functions provided by other agents or the system. One agent requests another to perform a specific action or provide specific information by calling its tool.

Agent A sends a structured request to Agent B, specifying the tool to use and any necessary parameters. Agent B executes the tool and returns the result to Agent A.

Ex: A multi-agent system for booking travel:

- User Agent receives a request to book a flight and hotel.

- User Agent calls the “Flight Booking Agent”’s

find_flights(destination, date, ...)tool. - Flight Booking Agent executes the search and returns a list of available flights.

- User Agent then calls the “Hotel Booking Agent”’s

find_hotels(location, check_in, check_out, ...)tool. - Hotel Booking Agent returns a list of available hotels.

- User Agent presents the flight and hotel options to the user.

Different State Schemas

This refers to how each agent internally represents and communicates its own state, and the need for interoperability.

Agents might have different ways of structuring and encoding their internal state (e.g., different data structures, ontologies, or even natural language descriptions). Effective communication requires mechanisms to translate or align these different schemas.

Agents might use:

- Agreed-upon standard schemas: All agents adhere to a common way of representing certain types of information.

- Translation mechanisms: Agents have the ability to convert their internal state into a format understandable by other agents (and vice versa).

- Mediator agents: A dedicated agent acts as an intermediary, responsible for understanding and translating between different state schemas.

Example: A system with a weather analysis agent and a plant watering agent:

- The Weather Agent might represent temperature as a numerical value with units (“temperature: 25.5 °C”).

- The Plant Watering Agent might represent temperature as a categorical variable based on plant needs (“temperature_level: warm”).

- For the Watering Agent to understand the Weather Agent’s report, a translation mechanism (e.g., a set of rules mapping numerical ranges to categorical levels) would be needed.

Shared Message List

Agents communicate by posting and reading messages from a shared, ordered list or queue. This acts as a central bulletin board or communication channel where agents can asynchronously exchange information.

Agents write messages containing relevant information or requests to the shared list. Other agents monitor the list and process messages that are relevant to them.

Ex: A distributed task allocation system:

- A Task Dispatcher Agent posts new tasks to the shared message list, including a task ID and description.

- Available Worker Agents monitor the list for new tasks.

- A Worker Agent claims a task by posting a message indicating its intent to work on that specific task ID.

- The Task Dispatcher Agent (or other interested agents) can then track the progress of the task through further messages on the list.

- Completed tasks are also reported via messages on the list.

RAG vs Agents

The crucial decision point when building intelligent applications with LLMs: when to use Retrieval-Augmented Generation (RAG) and when to leverage the power of AI Agents?

They are not mutually exclusive and can even be combined, but they address different core needs.

When to Use RAG:

- Need to ground LLM responses in specific, up-to-date, or proprietary knowledge: When the LLM’s internal knowledge is insufficient, outdated, or doesn’t cover your specific domain.

- Focus on factual accuracy and reducing hallucinations: By providing the LLM with relevant context, RAG helps it generate more truthful and grounded answers.

- Question answering over a known corpus: When the primary task is to answer user questions based on a defined set of documents or data.

- Summarization of specific content: When you need the LLM to summarize information from a given set of documents or web pages.

- Accessing and utilizing information from structured or unstructured data sources: RAG can be used to retrieve relevant chunks from PDFs, websites, databases, etc.

- Maintaining control over the information source: You explicitly define the knowledge base the LLM can access.

Best Scenarios for RAG:

- Customer Support Chatbots: Answering customer inquiries based on company knowledge bases, product manuals, and FAQs.

- Internal Knowledge Bases: Allowing employees to ask questions about company policies, procedures, and internal documentation.

- Research Assistance: Providing researchers with relevant papers, articles, and data based on their queries.

- Legal Document Analysis: Answering questions and summarizing information from legal contracts and case files.

- Educational Tools: Providing students with information and answers based on course materials.

- News and Article Summarization: Summarizing specific news articles or collections of articles.

Why RAG is Best in These Scenarios:

- Factual Grounding: Ensures responses are based on reliable sources you control.

- Reduced Hallucinations: Limits the LLM’s tendency to generate incorrect or nonsensical information.

- Up-to-Date Information: Allows access to the latest information without retraining the entire LLM.

- Domain Specificity: Enables the LLM to become an expert in a specific domain by providing it with relevant knowledge.

When to Use AI Agents:

- Need to perform complex tasks that require multiple steps and interactions with external systems: When the task goes beyond simple question answering and involves taking actions.

- Automation of workflows: When you need an LLM to orchestrate a series of tool calls to achieve a goal.

- Dynamic and unpredictable environments: Agents can observe their environment and adjust their plan based on the outcomes of their actions.

- Tasks requiring planning, decision-making, and potentially iteration: Agents can break down complex problems into smaller steps and decide on the best course of action.

- Conversational interactions where the goal evolves over time: Agents can maintain state and adapt their behavior based on the ongoing conversation.

- Integration with various tools and APIs: Agents can leverage external functionalities to perform actions in the real or digital world.

Best Scenarios for AI Agents:

- Personal Assistants: Booking appointments, setting reminders, sending emails, controlling smart home devices.

- Software Development Automation: Writing and executing code, managing projects.

- Data Analysis and Reporting: Gathering data from multiple sources, performing analysis, and generating reports.

- Complex Problem Solving: Tackling tasks that require reasoning, planning, and multiple steps (e.g., debugging code, planning a trip).

- Autonomous Systems: Controlling robots or other autonomous entities to achieve goals in complex environments.

- Multi-turn Conversational Applications with Actions: Guiding users through multi-step processes or troubleshooting.

Why AI Agents are Best in These Scenarios:

- Actionable Intelligence: Enables the LLM to go beyond providing information and actually do things.

- Task Automation: Automates complex workflows that would otherwise require human intervention.

- Adaptability: Can adjust behavior based on environmental feedback and the results of actions.

- Orchestration: Manages the interaction between different tools and systems.

- Stateful Interactions: Can maintain context and build upon previous interactions.

Agentic RAG

Agentic RAG is an advanced approach that combines the benefits of RAG with the autonomous decision-making capabilities of AI agents. Unlike traditional RAG, where retrieval is often a fixed initial step, Agentic RAG empowers the Language Model (LLM) to strategically decide when, what, and how to retrieve information from external knowledge sources as part of a dynamic and iterative problem-solving process. The LLM acts as an intelligent agent, using retrieval as a tool within its broader cognitive toolkit.

How Does Agentic RAG Work?

Scenario: A user in San Jose, California, asks: “What’s the weather like here right now and are there any ongoing research projects in AI at Stanford University I should know about?”

1. User Query: “What’s the weather like here right now and are there any ongoing research projects in AI at Stanford University I should know about?”

2. Planning & Reasoning (LLM Agent):

The LLM agent analyzes the query and identifies two distinct information needs:

- Sub-task 1: Get the current weather in San Jose, California. This requires a real-time data source.

- Sub-task 2: Find information about ongoing AI research projects at Stanford University. This requires accessing information about academic research.

The agent formulates a plan:

a. Use a “Weather Tool” to get the current weather in the user’s location (which it knows is San Jose based on context).

b. Use a “Research Project Search Tool” to find ongoing AI research at Stanford.

c. Synthesize the weather information and relevant research projects into a coherent response.

3. Strategic Retrieval & Tool Usage:

- Step 1: Getting the Weather

The agent’s internal Router (or planning logic) determines that the “Weather Tool” is appropriate for the first sub-task.

The agent invokes the “Weather Tool” with the parameter: location="San Jose, California".

Data Source (for Weather Tool): This tool likely interacts with a real-time weather API (e.g., OpenWeatherMap, WeatherAPI).

The “Weather Tool” makes an API call and retrieves the current weather conditions (temperature, description, humidity, etc.).

- Step 2: Finding AI Research Projects

The agent’s plan moves to the second sub-task. The Router identifies the “Research Project Search Tool” as suitable.

The agent formulates a query for this tool: institution="Stanford University", research_area="Artificial Intelligence", status="ongoing".

Data Sources (for Research Project Search Tool): This tool might interact with:

a. University Websites (via web scraping or APIs if available): Specifically Stanford’s AI labs or research pages.

b. Research Databases (via APIs): Possibly querying for projects affiliated with Stanford’s AI departments on platforms like NSF Research.gov or similar academic project databases.

c. Pre-indexed Vector Database of University Research: A database containing embeddings of project descriptions and faculty research interests from Stanford’s AI domain.

4. Observation & Integration:

The LLM agent receives the retrieved information:

- From “Weather Tool”: A structured data object or text string containing the current weather information for San Jose. Example:

"The current temperature in San Jose is 22°C with clear skies and low humidity." - From “Research Project Search Tool”: A list of ongoing AI research projects at Stanford, potentially including project titles, brief descriptions, involved faculty, and links to project pages. Example:

[

{"title": "Advancing Natural Language Understanding with Large Models", "description": "Researching techniques to improve the reasoning and comprehension abilities of large language models.", "faculty": "Prof. Christopher Manning"},

{"title": "Robot Learning for Autonomous Navigation", "description": "Developing novel algorithms for robots to learn complex navigation tasks in unstructured environments.", "faculty": "Prof. Fei-Fei Li"},

{"title": "AI for Medical Diagnosis", "description": "Exploring the use of deep learning for early and accurate diagnosis of medical conditions.", "faculty": "Prof. Andrew Ng"}

]

The agent integrates this information into its internal understanding.

5. Iterative Refinement (Optional but Possible):

Depending on the quality and relevance of the initial results, the agent could perform further steps:

- If the research project descriptions are too vague: The agent might use a “Web Browser Tool” to visit the linked project pages for more details on a few promising projects.

- If the user asks for specific sub-areas of AI: The agent could refine its query to the “Research Project Search Tool” (e.g., “ongoing research projects in natural language processing at Stanford”).

6. Response Generation:

The LLM agent synthesizes the gathered information into a coherent and user-friendly response:

“The current weather in San Jose, California is 22°C with clear skies and low humidity. Regarding ongoing AI research at Stanford University, some notable projects include ‘Advancing Natural Language Understanding with Large Models’ led by Professor Christopher Manning, ‘Robot Learning for Autonomous Navigation’ by Professor Fei-Fei Li, and ‘AI for Medical Diagnosis’ with Professor Andrew Ng. You can likely find more detailed information on the websites of Stanford’s AI labs and the faculty involved.”

In summary, Agentic RAG works by:

- Planning: The LLM agent analyzes the user’s request and breaks it down into sub-tasks.

- Routing: It intelligently selects the appropriate tools (like a Weather Tool and a Research Project Search Tool) based on the nature of each sub-task.

- Tool Invocation: It executes these tools with specific queries tailored to the required information.

- Data Sources: Each tool interacts with relevant data sources (weather APIs, university websites, research databases).

- Observation: The agent receives the results from the tools.

- Integration: It combines the retrieved information with its internal knowledge.

- Iteration (Optional): It can refine its plan and perform further retrieval if needed.

- Response Generation: Finally, it generates a comprehensive answer based on all the gathered information.

Types of Agentic RAG Router

- Single Agentic RAG System:

In a single agentic RAG system, the router’s role is to decide which tool or retrieval strategy the single LLM agent should use at a given step to best address the user’s query. This agent orchestrates its own actions, including when and how to retrieve information.

The router within the agent examines the current state of the agent’s reasoning, the user’s query, and potentially the results of previous steps. Based on this, it selects the most appropriate next action, which could be:

a. Using a specific retrieval tool (e.g., a vector database for general knowledge vs. a web search for real-time info).

b. Using a different type of tool (e.g., a calculator, a summarizer).

c. Directly generating a part of the response if enough information is available.

This routing logic can be implemented in several ways:

- Conditional Logic in Prompts: The prompt itself can guide the LLM to decide its next step based on keywords or the current context.

- LLM-Based Decision Making: The LLM can be prompted to explicitly choose the next tool or action from a predefined set.

- Dedicated Routing Modules: Some agentic frameworks provide specific modules designed to route actions based on the agent’s internal state and the user’s input.

Ex: User asks: “Explain attention mechanisms and find recent papers on efficient transformers.”

- The single LLM agent analyzes the query and identifies two parts.

- The router (within the agent’s logic) sees the “explain” keyword and decides to use the “General Knowledge Retrieval Tool” first.

- The agent retrieves information about attention.

- The agent then processes the “recent papers on efficient transformers” part. The router now decides to use the “Research Paper Search Tool” with the query “efficient transformer architectures published after 2023.”

- The agent retrieves a list of papers.

- Finally, the agent synthesizes the explanation and the paper findings.

In this single-agent scenario, the routing happens internally within the LLM’s decision-making process.

2. Multi-Agent RAG System:

In a multi-agent RAG system, the router’s role is to direct the user’s query or specific sub-tasks to the most appropriate agent in a team of specialized agents. Each agent might have its own RAG capabilities and be expert in a particular domain or type of information.

A central router or a distributed routing mechanism analyzes the user’s query and determines which agent or agents are best suited to handle it. This decision is based on the agents’ defined roles, expertise, and the nature of the information required.

- Central Router Agent: A dedicated agent receives the user query and uses its reasoning to dispatch it to other specialized agents.

- Distributed Routing: Each agent might have the ability to assess if it’s the right agent to handle a part of the query and can either process it or forward it to another agent.

- Skill-Based Routing: The router matches the requirements of the query to the defined skills and knowledge domains of the available agents.

Ex: User asks: “What are the common issues users face with deploying large transformer models and are there any community forums discussing solutions?”

- A “Customer Support Router Agent” receives the user’s query.

- The router analyzes the query and identifies two aspects: “deployment issues” and “community forums.”

- Based on its knowledge of the available agents:

- It routes the “deployment issues” part to a “Technical Support Agent (Transformers)” which has RAG capabilities to access documentation and troubleshooting guides for transformer deployment.

- It routes the “community forums” part to a “Community Engagement Agent” which has RAG capabilities to search for and summarize relevant online forums and discussions.

4. The “Technical Support Agent” retrieves information on common deployment issues.

5. The “Community Engagement Agent” retrieves information about relevant forums.

6. A “Response Synthesis Agent” (or the initial router agent) gathers the information from both specialized agents and generates a unified response to the user.

In this multi-agent scenario, the routing happens at a higher level, directing the query to different specialized agents, each potentially performing its own internal RAG process

Function calling

Function calling in large language models (LLMs) is the capability that allows an LLM to interact with external tools, APIs, or predefined functions by producing structured outputs (such as JSON) that specify which function to invoke and with what parameters12358. Instead of just generating text, the LLM can recognize when a task requires an external action-like fetching real-time data, querying a database, or triggering a workflow-and output the necessary instructions to perform that action.

Ex: if a user asks, “What’s the weather in Paris?”, the LLM can generate a structured function call like:

{

"function": "get_current_weather",

"parameters": {

"location": "Paris"

}

}

This output can then be used by an external system to actually call a weather API and return the results to the user.

Model Context Protocol

Model Context Protocol (MCP) is an open standard introduced by Anthropic in late 2024 that defines a universal, standardized way for AI models-especially large language models (LLMs)-to connect with external data sources, tools, and environments. MCP is often described as the “USB-C port for AI applications” because it provides a common interface, allowing any AI assistant or model to seamlessly interact with any data source or tool that supports the protocol, regardless of who built them.

Technically, MCP uses client/server workflows (typically based on JSON-RPC) and defines clear primitives for exchanging context, invoking tools (such as APIs or functions), and accessing resources (like files or database records). This protocol is not a framework or a tool itself, but a set of rules and standards-similar to HTTP for the web or ODBC for databases-that enables interoperability across the AI ecosystem.

Before MCP, integrating AI models with external data and tools was highly fragmented and inefficient:

- Custom Integrations for Each Use Case: Every time a developer wanted to connect an AI model to a new database, cloud service, or application, they had to write custom connectors and prompts. This led to a combinatorial “M×N problem” (M models × N tools), where each connection required bespoke code.

- Information Silos: AI models were often “trapped” behind information silos, unable to access fresh or relevant data outside their static training set.

- Lack of Standardization: Each AI provider (e.g., OpenAI, Anthropic, Meta) had their own way of handling tool use (like function calling or plugins), which meant tools and integrations were not portable across platforms.

- Maintenance Nightmares: The ad-hoc, one-off nature of integrations made AI systems brittle, hard to scale, and difficult to maintain or audit.

- Limited Agentic Capabilities: Without a standard way to access context and tools, AI agents struggled to perform useful actions or reason with up-to-date information.

MCP is designed to address these challenges by:

- Standardizing Integration: MCP provides a single, open protocol for connecting AI models to external tools, APIs, and data sources, eliminating the need for custom integrations for each new combination.

- Reducing Complexity: By turning the M×N integration problem into a much simpler N+M setup, MCP allows developers and tool makers to build to a single standard-once a tool or model supports MCP, it can work with any other MCP-compatible component.

- Enabling Richer, Context-Aware AI: MCP makes it easy for AI models to access real-time context, reference materials, and perform actions, unlocking more capable and agentic AI assistants.

- Fostering an Open Ecosystem: As an open standard, MCP encourages collaboration and the development of shared connectors, benefiting the broader AI community and accelerating innovation.

- Improving Maintainability and Transparency: MCP’s explicit context management makes it easier to audit, update, and understand how AI systems interact with external resources.

In summary, MCP aims to unify and simplify the way AI models interact with the outside world, making AI systems more powerful, maintainable, and interoperable across platforms

Core Components of MCP

MCP is an open standard designed to connect AI models, such as LLMs, with external data sources and tools in a secure, standardized, and scalable way. Its architecture is inspired by the Language Server Protocol (LSP) and aims to unify how AI systems access and act on external context.

- Host:

The AI-powered application or environment where the end-user interacts, such as the Claude desktop app, IDE plugins, or chat interfaces.

Responsible for initializing and managing clients, handling user authorization, and aggregating context from multiple sources. - MCP Client:

Integrated within the host application, the client acts as a bridge between the host and external MCP servers.

Manages stateful, one-to-one connections with servers, routes messages, negotiates protocol versions and capabilities, and manages subscriptions to server resources. - MCP Server:

Provides specialized capabilities, tools, and contextual data to the AI application.

Each server typically exposes a specific integration (e.g., GitHub, Google Drive, Postgres) and manages access to those resources. - Transport Layer:

Defines how clients and servers communicate.

Supports local connections via STDIO (Standard Input/Output) and remote connections via HTTP with Server-Sent Events (SSE)68. - Base Protocol:

The communication foundation for MCP, built on JSON-RPC 2.0.

Standardizes message types (requests, responses, notifications), lifecycle management (connection, capability negotiation), and session control.

Agent Communication Protocol

Agent Communication Protocol (ACP) is an open standard designed to enable seamless communication between AI agents, regardless of their underlying implementation. It aims to solve the problem of fragmented AI systems by providing a common language and set of rules for agents to exchange information, requests, and responses.

Architecture:

- Basic Single-Agent (REST):

- ACP provides a simple REST interface for connecting a client to a single agent.

- This is ideal for direct communication, lightweight setups, and debugging.

- The ACP Server wraps the agent, translating REST calls into internal logic.

2. Advanced Multi-Agent:

- ACP supports flexible multi-agent architectures for advanced orchestration, specialization, and delegation.

- A common design is the Router Agent model, where a central agent:

- Acts as both server and client.

- Breaks down client requests into sub-tasks.

- Routes tasks to specialized agents.

- Aggregates responses into a single result.

- Can use its own tools and those exposed by downstream agents (potentially via extensions like MCP).

Agent2Agent Protocol

A2A (Agent2Agent Protocol) is an open standard designed to enable AI agents to communicate, collaborate, and delegate tasks to each other across different platforms, vendors, and frameworks. Its goal is to allow autonomous agents-regardless of how they are built-to interoperate seamlessly, making it possible to build complex, multi-agent systems that can solve problems collaboratively.

A2A follows a client-server (or client-remote agent) model:

- Client Agent: Initiates communication, discovers other agents, and delegates tasks.

- Remote Agent (A2A Server): Receives, processes, and responds to tasks. It exposes its capabilities for discovery and handles task execution.

- Agents do not share memory or tools directly; instead, they interact via structured, protocol-defined messages, ensuring modularity and security.

Core Components

- Agent Card: A machine-readable JSON manifest that describes an agent’s identity, capabilities, supported input/output formats, endpoints, and authentication requirements. It is published at a well-known URL (e.g.,

.well-known/agent.json) to enable dynamic discovery by other agents. - A2A Server: The persistent interface for an agent, responsible for receiving, validating, and routing incoming requests, managing long-running tasks, enforcing security, and exposing the Agent Card.

- A2A Client: The component that discovers other agents, initiates task requests, manages secure connections, and handles responses and results.

How Agent-to-Agent Communication Happens

- Discovery: The client agent fetches the Agent Card from a remote agent’s well-known endpoint to understand its capabilities and how to interact with it.

- Initiation: The client sends a task request (with a unique Task ID and required data) to the remote agent via standard HTTP endpoints, typically using JSON-RPC 2.0 for message formatting.

- Task Handling: The remote agent processes the request, executes the task, and generates results or artifacts.

- Response: The remote agent returns results, status updates, or notifications to the client. For long-running tasks, updates can be pushed via server-sent events (SSE) or external notification services.

- Completion: The task is marked as completed, failed, or canceled, and the client can retrieve final results.

This structured protocol allows agents to collaborate on both simple and complex workflows, supporting asynchronous operations and secure, scalable integration across organizations

When to Use ACP, MCP, and A2A

ACP (Agent Communication Protocol), MCP (Model Context Protocol), and A2A (Agent-to-Agent Protocol) are emerging standards for connecting AI models, tools, and agents. Each serves a distinct purpose in AI system design:

MCP (Model Context Protocol)

- Use MCP when you want a single large language model (LLM) to access external data, tools, or APIs in a standardized way.

- Ideal for tool-augmented AI (e.g., a chatbot pulling live data from a CRM) or dynamic context scenarios (like an AI analyst querying databases).

- MCP is model-agnostic and works across different LLM providers, making it a strong choice for applications needing flexible, secure, and dynamic tool access.

ACP (Agent Communication Protocol)

- Use ACP if you want a local-first environment to run and coordinate multiple open-source agents, especially when you want fine-grained control over agent interactions within a single environment.

- ACP is best for orchestrating agent communication and collaboration in contained setups, such as local agent frameworks or platforms focused on privacy and autonomy.

A2A (Agent-to-Agent Protocol)

- Use A2A when you need agents from different vendors or platforms to discover, communicate, and collaborate-especially in multi-agent, cross-vendor, or enterprise automation scenarios.

- A2A is designed for horizontal communication, enabling agents to share tasks, orchestrate workflows, and operate securely across organizational boundaries.

Thank you for taking the time to explore the fascinating world of AI Agents with me. Your feedback and questions are always welcome. Your claps and comments are highly appreciated!

This overview provides a foundational understanding of AI Agents and their key concepts. In upcoming articles, we will be diving into practical implementation strategies and exploring specific use cases.

Stay tuned for more insights into building the next generation of AI Agents!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!