Learn Reinforcement Learning from Human Feedback (RLHF): Your 9-Hour Study Plan

Last Updated on January 31, 2025 by Editorial Team

Author(s): Peyman Kor

Originally published on Towards AI.

Introduction

This blog post is for people who have heard the term Reinforcement Learning from Human Feedback (RLHF) and are curious about this method. In this blog, you will learn how RLHF works, why it matters, and how you can master the fundamentals in just 9 hours.

In short, RLHF has been developed to transform Large Language Models (LLM) from AI models to AI assistants. That is the core idea. This means that AI companies (OpenAI, Google, or Anthropic, etc.) can make AI products that are more helpful and useful for their customers. Indeed that is a crucial aspect of any product development strategy because ultimately, you want to build products that are useful for your human customers.

Background on Foundation Models

Now let’s see how modern Large Language Models (LLMs) are trained and developed. The training process of these models typically involves three phases:

Phase 1: Pre-training

In this phase, the model learns from vast amounts of internet data (typically trillions of tokens) to predict the next word in a sequence. Think of it as learning the statistical patterns of language. For example, given the phrase “My favorite football team in UK is the ___“, the model learns that ” Manchester United or Liverpool are more likely completions (higher probability) than “car” or “pizza”. This phase helps the model to develop a basic understanding of language structure and meaning. To get an intuition about how the probability of the next word is predicted, I encourage you to go and play with the Transformer Explainer website [here (https://transformer.huggingface.co/). You add a sentence at the top, and on the far right side, you can see the probability of the next word.

If you look at the far right part of the above figure, you can see the likelihood of each word to complete the sentence:

“My favorite football team in UK is the ___“

As you can see, here are the probabilities of completion of the sentence:

- Manchester: around 6%

- Rams?? : around 5 %

- List goes on

Phase 2: Supervised Fine-tuning (SFT)

Language is inherently flexible — there are many valid ways to respond

to any prompt. For example, if asked “How do I make pasta?”, valid

responses could include:

A) You can learn by watching YouTube

B) Giving you a listing of ingredients, explaining cooking steps,

or suggesting serving sizes.

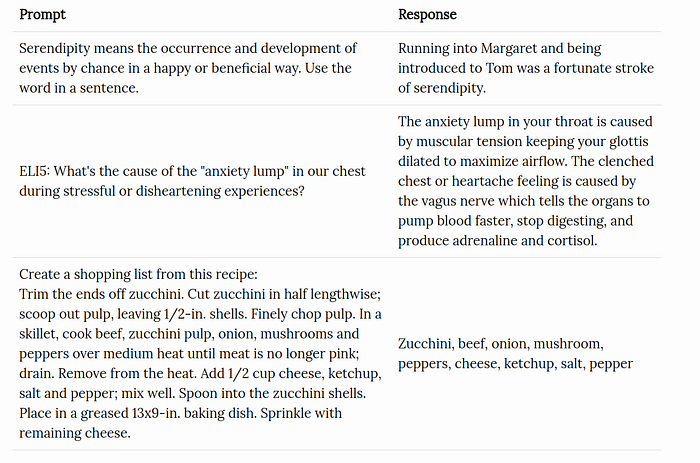

And of course, as a user, you prefer option B, which is a completion you prefer. In the Supervised Fine-tuning stage (Phase 2), we show the model good examples of questions and answers to teach it how to respond well. The data used in this phase is typically collected from highly educated workers. They are high-quality data. Here are some examples of supervised fine-tuning datasets:

Phase 3: Reinforcement Learning from Human Feedback (RLHF)

Even after phase 2, some responses are better than others from a human

perspective. In Phase 3 we show the model’s various responses and ask

humans to rate them. Essentially, we would like to optimize the model for

the output to get higher ratings from humans. This stage that brings

human feedback into the loop is called Reinforcement Learning from Human Feedback (RLHF).

Here is a simple example. We give the prompt “Explain the moon landing to a 6-year-old in a few sentences” to LLM — Then LLM gives two possible answers, and we ask humans which one they prefer. Then, this preference becomes a “signal” to LLMs, so that they need to make more of the response that humans would like to see.

Now with this background, let’s see how we can learn about RLHF.

Study Roadmap

To help you navigate this topic, I’ve organized learning resources into five main categories.

- Introductory Tutorials

- Key Research Papers

- Hands-on Codes

- Online Courses

- RLHF Book

Let's get started:

1. Introductory Tutorials

Here the goal is to get a high-level understanding of RLHF. I suggest

you start with Chip Huyen’s blog post on RLHF. It is a great introduction to the topic. then you can continue with rest. I should note that Nathan Lambert is quite active on Twitter about this topic, and I suggest following him if you want to keep updated with RLHF.

Resources:

- 📚 20m: Introduction to RLHF by Chip Huyen [Read

here]

2. 📺 40m: RLHF: From Zero to ChatGPT by Nathan Lambert [Watch

here]

3. 📺 60m: Lecture on RLHF by Hyung Won Chung [See

here]

2. Key Research Papers

Here are some important research papers that I think are worth reading to get a deeper understanding of RLHF.

Resources:

– 📚 30m: InstructGPT Paper — Applying RLHF to a general language

model [Read here]

– 📚 30m: DPO Paper by Rafael Rafailov [Read here]

– 📚 30m: Artificial Intelligence, Values, and Alignment — [Read here]

3. Hands-on Codes

Here I am adding some codes that you can run on your local machine (though unfortunately only if you have a lot of GPUs :)) to get a hands-on experience of RLHF. I would like to note that TRL seems to a

nice library that has been used for RLHF, maybe that can be go to go library when it comes to RLHF implementation.

– 📚 2h: Detoxifying a Language Model using PPO [Read here]

– 📚 2h: RLHF with DPO & Hugging Face [Read

here]

– 📚 1h: TRL Library for Fast Implementation— Minimal and efficient

[Read here]

4. Online Course

If you are looking for a more structured learning experience, I

recommend taking an online course. Here is a course that covers

RLHF:

- 📚 1h: Reinforcement Learning from Human Feedback by Nikita

Namjoshi [Course link]

5. RLHF Book

Finally, if you want to dive deep into RLHF, I recommend reading the book [A Little Bit of Reinforcement Learning from Human Feedback (https://rlhfbook.com/book.pdf) by Nathan Lambert. This book is in progress and you can read it online for free.

Conclusion

I hope this study guide helps you get started with Reinforcement

Learning from Human Feedback (RLHF). It seems it becoming more evident that Reinforcement learning is going to play an ever-increasing role in making LLMs better, especially with the release of DeepSeek, which has a pure RL framework for training.

Hope you liked the blog and remember that the key to learning any topic is consistency and being curious!

References:

[1 ]: Ouyang, Long, et al. “Training language models to follow instructions with human feedback.” Advances in neural information processing systems 35 (2022): 27730–27744.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.