Using AI to improve your literature review

Last Updated on September 2, 2022 by Editorial Team

Author(s): Kevin Berlemont, PhD

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

Using AI To Improve Your Literature Review

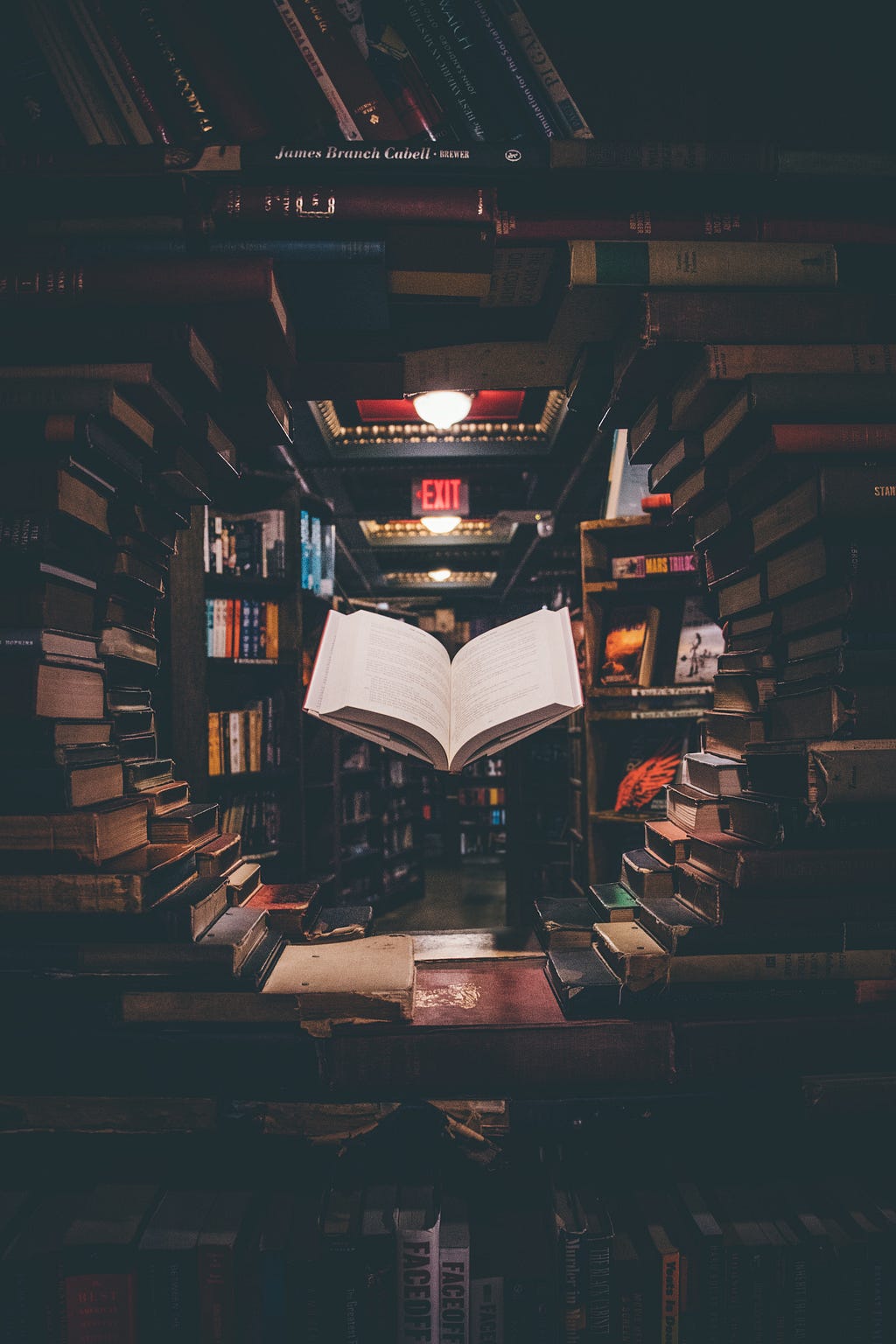

The past twenty years have witnessed exponential growth in the number of published scientific papers. In 2021 alone, the number of papers mentioning “machine learning” was bigger than 9000. The challenge is real for academic researchers, industry experts, or even data science enthusiasts to keep track of relevant publications or to get invested in a new topic.

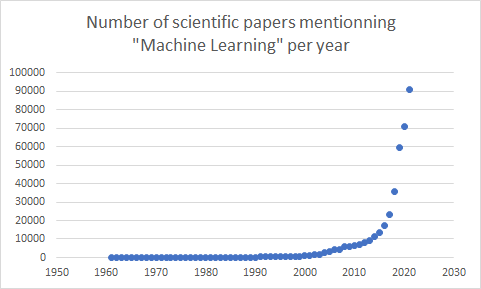

A few months ago, a non-profit research lab, Ought, released free software that they described as a research assistant at https://elicit.org/. The algorithm is built on language models, such as GPT-3, and shows relevant papers to the query you wrote. It has been tuned on research questions and academic papers to increase the relevance of the results. I have been using it in my day-to-day academic research for a few months now, and the team has been improving the features regularly. I am going to describe the general workflow and how to use some of the features efficiently.

General workflow of Elicit

The first step is to ask a research question such as this one:

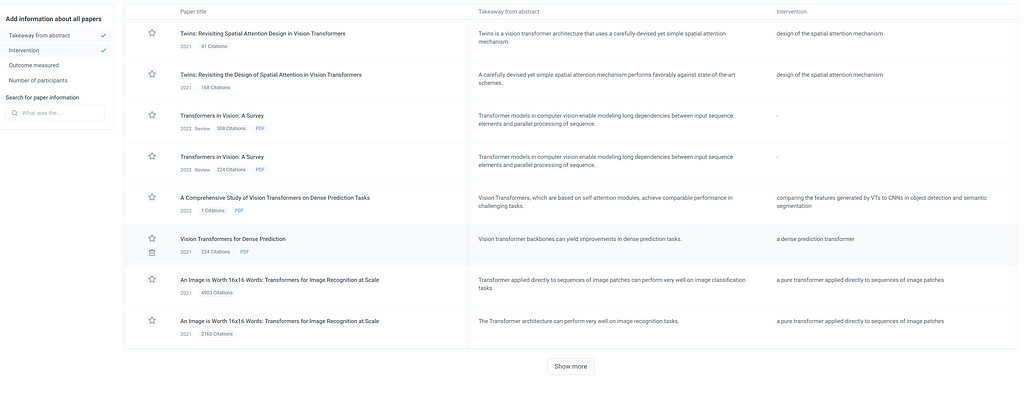

This query will result in Elicit showing the top 8 most relevant papers according to the language model. An interesting feature of the GPT-3 model is that Elicit will find relevant papers even if they don’t match keywords as it uses the similarity measure between words. Once the papers have been selected, Elicit is going to provide more information about them: a summary of the abstract, a description of the study types, a PDF, and so on. For every paper in the results, the abstract is going to be summarized according to your prompt. Thus you get an easy overview of the relevance of the paper according to your question.

Finally, opening the paper will give you insight into possible critiques, takeaways, and publications … All of this information is generated using GPT-3 like models that are fine-tuned and prompted on specific instructions.

Making the most use of Elicit

Getting a brief overview of the literature

The use of language models to generate information about papers enables Elicit to filter the results across an impressive amount of fields:

- randomized controlled trials

- meta-analyses

- different types of reviews

Thus, the table of results gives you a direct overview of which papers are relevant to what you have in mind without having to find the information within the text.

Community Tasks

Until now, I have described the workflow of Elicit under the “Literature Review” task. The goal of the model is to provide an answer to a research question from papers. In addition to this predefined task, users can create tasks that can be made available to everybody. This expands the range of research questions that can be answered using Elicit, making it a necessary tool for literature review. Here are a couple of my favorite tasks Elicit can be used to help you with finding relevant scientific papers:

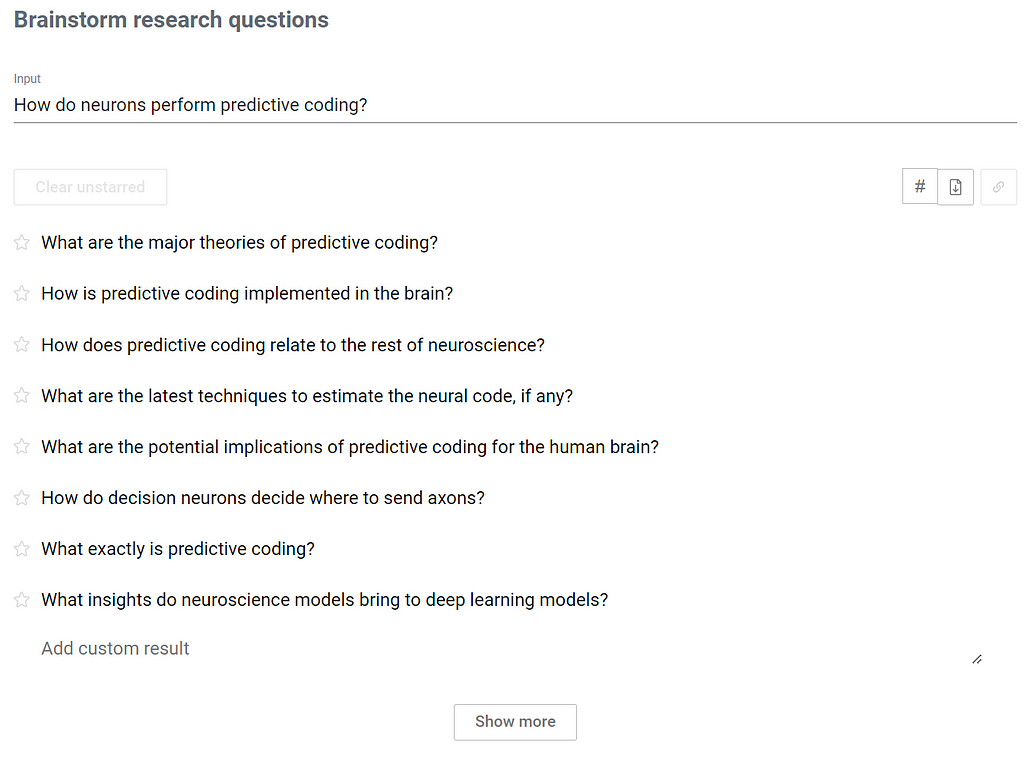

Brainstorm task

Let’s say you have a topic or a research direction in mind. How find the correct question to ask in order to get relevant results is not always an easy task. The brainstorming task helps you with this issue as you provide a topic or a research question, and Elicit will return related questions or a new way to formulate the question, like in the example below.

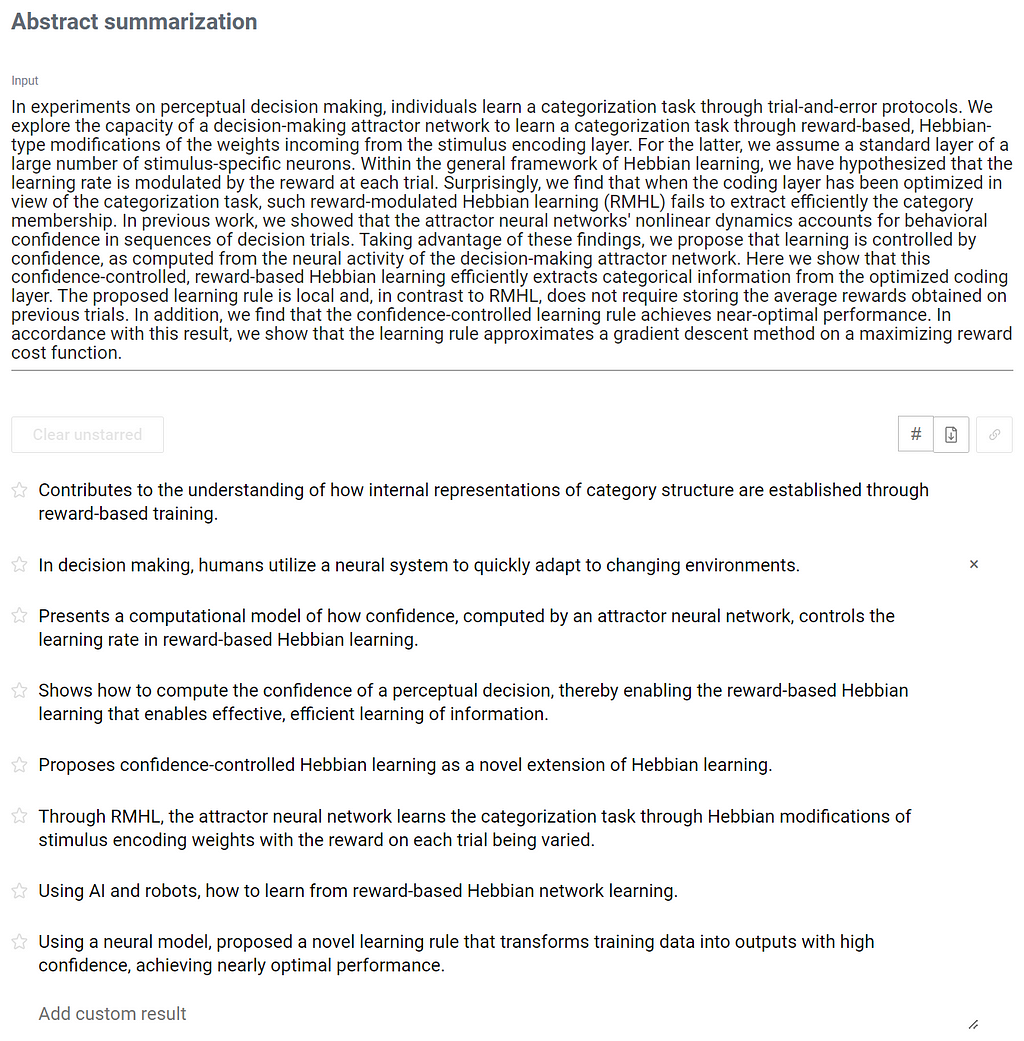

Abstract summarization

The model that provides a summary of the abstract when searching through the literature review is available to perform the abstract summarization task. When provided with a scientific abstract, it will return a set of sentences summarizing the abstract (see below).

I like this task for two main reasons:

- You can use it to make sure your abstract delivers the argument you want to.

- You can get a brief overview of any paper just by copying the abstract into the model.

Final Thoughts

In summary, Elicit is an AI-powered research assistant that will scan the scientific literature to answer your question. From a very narrow question to a general topic, you will always get relevant results. The summarization of the abstracts is by far my favorite feature as it allows for an efficient filter of relevant and non-relevant scientific papers. And if you can’t find the specific task you would want, there is always the possibility to design it yourself!

Using AI to improve your literature review was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.