Stop the Stopwords using Different Python Libraries

Last Updated on July 24, 2023 by Editorial Team

Author(s): Manmohan Singh

Originally published on Towards AI.

Alphabet letters are building blocks for words in the English language. These words group together to form a sentence by following grammatical rules. Because of grammatical reasons, some words occur more frequently than other words. The main goal of these words is to connect different words in a sentence. These words are known as Stopwords. Generally, Stopwords carry no dictionary meaning.

Stopwords generally used along concerning Natural Language Processing (NLP). Try to remove stopwords before processing your data for the NLP task.

Different libraries have a different count of words in their stopwords collection. No standard keeps track of a collection of stopwords. This lead to the libraries having their collection of stopwords.

In this article, we will go through these libraries.

1. Natural Language ToolKit (NLTK)

NLTK is a leading python tool for text preprocessing. Removal of stopwords using the NLTK library.

from nltk.corpus import stopwordsnltk_stopwords = set(stopwords.words(‘english’))text = f”The first time I saw Catherine she was wearing a vivid crimson dress and was nervously “ \

f”leafing through a magazine in my waiting room.”text_without_stopword = [word for word in text.split() if word.lower() not in nltk_stopwords]print(f”Original Text : {text}”)

print(f”Text without stopwords : {‘ ‘.join(text_without_stopword)}”)

print(f”Total count of stopwords in NLTK is {len(nltk_stopwords)}”)

NLTK library has 179 words in the stopword collection. As you can observe, most frequent words like was, the, and I removed from the sentence.

Note: All the words in the default library’s stopword list are in lower case. For better result, convert the documents/sentences words in lower case. Otherwise, stopword not got removed from your data.

2. SpaCy

Spacy also widely used libraries in NLP. Removal of Stopwords using the spaCy library.

import spacysp = spacy.load(‘en_core_web_sm’)

spacy_stopwords = sp.Defaults.stop_wordstext = f”The first time I saw Catherine she was wearing a vivid crimson dress and was nervously “ \

f”leafing through a magazine in my waiting room.”text_without_stopword = [word for word in text.split() if word not in spacy_stopwords]print(f”Original Text : {text}”)

print(f”Text without stopwords : {‘ ‘.join(text_without_stopword)}”)

print(f”Total count of stopwords in SpaCy is {len(spacy_stopwords)}”)

SpaCy has 326 words in their stopwords collection, double than the NLTK stopwords. Spacy and NLTK shows the different output after removing stopwords. Its outcome is that libraries have their definition of stopwords, which drive their count of words in stopwords. They include first, second, twelve, etc. numerical words. Their list also includes frequently occurring verbs like go, find, etc.

3. Gensim

Removal of Stopwords using genism library.

from gensim.parsing.preprocessing import remove_stopwords

import gensimgensim_stopwords = gensim.parsing.preprocessing.STOPWORDStext = f”The first time I saw Catherine she was wearing a vivid crimson dress and was nervously “ \

f”leafing through a magazine in my waiting room.”print(f”Original Text : {text}”)

print(f”Text without stopwords : {remove_stopwords(text.lower())}”)

print(f”Total count of stopwords in Gensim is {len(list(gensim_stopwords))}”)

Gensim has 337 words in their stopwords collection. Their stopwords collection is similar to Spacy. The remove_stopwords function removes stopwords directly from the sentence.

4. Scikit-learn

Scikit-learn is highly prevalent in Data modeling. Removal of Stopwords using Scikit-learn library.

from sklearn.feature_extraction.text import ENGLISH_STOP_WORDStext = f”The first time I saw Catherine she was wearing a vivid crimson dress and was nervously “ \

f”leafing through a magazine in my waiting room.”text_without_stopword = [word for word in text.split() if word not in ENGLISH_STOP_WORDS]print(f”Original Text : {text}”)

print(f”Text without stopwords : {‘ ‘.join(text_without_stopword)}”)

print(f”Total count of stopwords in Sklearn is {len(ENGLISH_STOP_WORDS)}”)

Scikit-learn has 318 words in their stopwords collection. Their stopwords collection is similar to Spacy and Gensim.

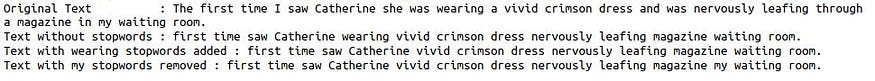

Note: Sometimes, words that can classify as stopwords are not available in the above library’s default stopwords list. You can modify the existing collection of stopwords as per your choice. Use append to add or remove to delete the words from stopwords collection.

Modification of the library’s default stopwords list.

from nltk.corpus import stopwords

nltk_stopwords = stopwords.words(‘english’)text = f”The first time I saw Catherine she was wearing a vivid crimson dress and was nervously “ \

f”leafing through a magazine in my waiting room.”text_without_stopword = [word for word in text.split() if word.lower() not in nltk_stopwords]print(f”Original Text : {text}”)

print(f”Text without stopwords : {‘ ‘.join(text_without_stopword)}”)# ‘wearing’ added as a stopwords in nltk stopwords collectionnltk_stopwords.append(‘wearing’)

text_without_stopword = [word for word in text.split() if word.lower() not in nltk_stopwords]print(f”Text with wearing stopwords added : {‘ ‘.join(text_without_stopword)}”)# ‘my’ removed as a stopwords in nltk stopwords collectionnltk_stopwords.remove(‘my’)text_without_stopword = [word for word in text.split() if word.lower() not in nltk_stopwords]print(f”Text with my stopwords removed : {‘ ‘.join(text_without_stopword)}”)

Conclusion

The selection of python libraries for stopwords solely depends on the NLP task. If you use the NLTK library for text processing, then using the Gensim library for stopwords is not advisable. Stopwords removal decreases the processing time and disk space and increases accuracy. So clean your data with stopwords removal before training your model.

Hopefully, this article helps you with NLP models and problems.

Other Articles by Author

- First step in EDA : Descriptive Statistic Analysis

- Automate Sentiment Analysis Process for Reddit Post: TextBlob and VADER

- Discover the Sentiment of Reddit Subgroup using RoBERTa Model

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.