Query Your DataFrames with Powerful Large Language Models using LangChain.

Last Updated on August 1, 2023 by Editorial Team

Author(s): Pere Martra

Originally published on Towards AI.

Get ready to use your own data with Large Language Models From Hugging Face Using a Vector Database and LangChain!

In the previous article, I explained how to use a vector database like ChromaDB to store information and to use it in creating a powered prompt for querying Large Language Models from Hugging Face.

In this article, we will see how to use LangChain for the same task. LangChain will handle the task of searching through our information stored in ChromaDB and directly passing it to the language model being used.

This way, we can use our data with Large Language Models without the need to perform model fine-tuning.

Since we will be using Hugging Face models, which can be downloaded and hosted on our own servers or private cloud spaces, the information doesn’t have to go through companies like OpenAI.

Let’s describe the steps we’ll follow in this article:

- Install the necessary libraries, such as ChromaDB or LangChain

- Load the dataset and create a document in LangChain using one of its document loaders.

- Generate embeddings to store in the database.

- Create an index with the information.

- Set up a retriever with the index, which LangChain will use to fetch the information.

- Load the Hugging Face model.

- Create a LangChain pipeline using the language model and retriever.

- Utilize the pipeline to ask questions.

What technologies are we using?

The vector database we will use is ChromaDB. It is possibly the most well-known option among open-source vector databases.

For the models, we have chosen two in the Hugging Face library. The first one is dolly-v2–3b and the other flan-t5-large. It’s worth noting that these are not only two different models but also trained for different functions.

T5 is a text2text-generation model family, designed to generate text based on the datasets they were trained on. They can be used for text generation, but their responses may not be very creative.

On the other hand, Dolly is a pure text-generation family. These models tend to produce more creative and extensive responses.

The star library is LangChain, an open-source platform that enables the creation of natural language applications harnessing the power of large language models. It allows us to chain inputs and outputs between these models and other libraries or products, such as databases or various plugins.

Let’s start the project with LangChain.

The code is available in a notebook on Kaggle. This article and notebook are part of a course on creating applications with large language models, which is available on my GitHub profile.

If you don’t want to miss any lessons or updates to the existing content, it’s best to follow the repository. I will be publishing new lessons in the public repository as I complete them.

Ask your documents with LangChain, VectorDB & HF

Explore and run machine learning code with Kaggle Notebooks U+007C Using data from multiple data sources

www.kaggle.com

GitHub – peremartra/Large-Language-Model-Notebooks-Course

Contribute to peremartra/Large-Language-Model-Notebooks-Course development by creating an account on GitHub.

github.com

Installing and loading the libraries.

If you are working in your personal environment and have already been testing these technologies, you may not need to install anything. However, if you are using Kaggle or Colab, you will need to install the following libraries:

- langchain: The revolutionary library that enables the creation of applications with large language models.

- sentence_transformers: We will have to generate embeddings of the text we want to store in the vector database, for which we require this library.

- chromadb: The vector database to be used. Notably, ChromaDB stands out for its user-friendly interface.

!pip install chromadb

!pip install langchain

!pip install sentence_transformers

In addition to these libraries, we will also import the two most used Python libraries in data science: pandas and numpy.

import numpy as np

import pandas as pd

Load the Datasets.

As I mentioned earlier, the notebook has been prepared to work with two different datasets. These datasets are the same ones used in the previous example of RAG (Retrieval Augmented Generation).

RAG means using your data with large language models, commonly referred to as ‘Interrogating Your Documents’.

Both datasets are tabular and contain news-related information:

The content of both datasets is similar, but the column names and stored information differ. Personally, I believe that using multiple datasets can provide a good value, allowing you to validate and generalize the results with different data sources.

It would be beneficial to explore a third different dataset and replicate the functionality with it upon completing the notebook.

As we are working with limited resources on Kaggle, it’s essential to be mindful of memory constraints. Therefore, we won’t be working with the entire dataset to avoid exceeding Kaggle’s memory limit, which is 30 GB, if you don’t use GPUs.

Working with a smaller subset of the dataset will still allow us to explore and demonstrate the functionality of LangChain effectively while staying within the resource limitations.

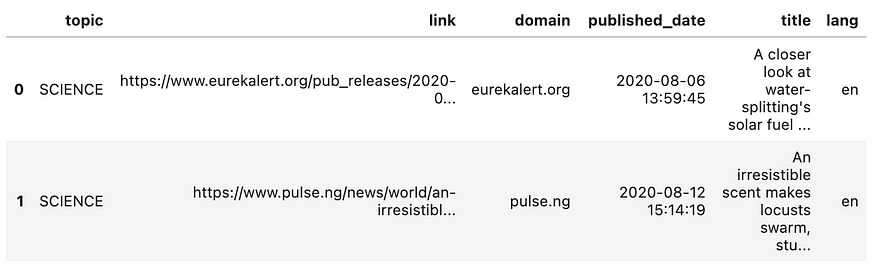

Let’s take a look at the first two records of the topic-labeled-news-dataset.

In the first dataset, we will use the title column as our document. Although the texts may not be very lengthy, it serves us as a perfect example. We can use it to search through the database of articles and find those that discuss a specific topic.

news = pd.read_csv('/kaggle/input/topic-labeled-news-dataset/labelled_newscatcher_dataset.csv', sep=';')

MAX_NEWS = 1000

DOCUMENT="title"

TOPIC="topic"

#news = pd.read_csv('/kaggle/input/bbc-news/bbc_news.csv')

#MAX_NEWS = 500

#DOCUMENT="description"

#TOPIC="title"

#Because it is just a course we select a small portion of News.

subset_news = news.head(MAX_NEWS)

We have created the DataFrame subset_news containing a portion of the news from the dataset.

To use one dataset or the other, it’s as simple as uncommenting the lines corresponding to the dataset we want to use. In each case, we adjusted the name of the column to be used as data and the number of records that the subset will contain. This approach allows us to easily switch between datasets.

Generate the Document from the DataFrame.

To create the document, we will use with LangChain, using one of its loaders. For our example, we will use the DataFrameLoader, but there are various loaders available for a wide range of sources, such as CSVs, text files, HTML, JSON, PDFs, and even loaders for products like Confluence.

from langchain.document_loaders import DataFrameLoader

from langchain.vectorstores import Chroma

Once we have the library loaded, we need to create the loader. To do this, we specify the DataFrame and the name of the column we want to use as the document’s content. This information will be passed to the vector database, ChromaDB, where it will be stored and used by the language model when generating its responses.

df_loader = DataFrameLoader(subset_news, page_content_column=DOCUMENT)

To create the document, we simply need to call the load function of the Loader.

df_document = df_loader.load()

display(df_document)

Let’s take a look at the content of the document:

[Document(page_content="A closer look at water-splitting's solar fuel potential", metadata={'topic': 'SCIENCE', 'link': 'https://www.eurekalert.org/pub_releases/2020-08/dbnl-acl080620.php', 'domain': 'eurekalert.org', 'published_date': '2020-08-06 13:59:45', 'lang': 'en'}),

Document(page_content='An irresistible scent makes locusts swarm, study finds', metadata={'topic': 'SCIENCE', 'link': 'https://www.pulse.ng/news/world/an-irresistible-scent-makes-locusts-swarm-study-finds/jy784jw', 'domain': 'pulse.ng', 'published_date': '2020-08-12 15:14:19', 'lang': 'en'}),

As we can see, it has created a document where each page corresponds to the content of a record from the specified column. Additionally, we find the other data in the ‘metadata’ field, labeled with the column name.

I encourage you to try with the other dataset and see how the data looks.

Creating the Embeddings.

First, in case it’s necessary, let’s understand what Embedding is. It’s simply a numerical representation of any data. In our specific case, it will be the numerical representation of the text to be stored.

This numerical representation takes the form of vectors. A vector is simply a representation of a point in a multidimensional space. In other words, we don’t have to visualize the point on a two-dimensional or three-dimensional plane, as we are used to. The vector can represent the point in any number of dimensions.

For us, it may seem complicated or hard to imagine, but mathematically, there isn’t much difference between calculating the distance between two points whether they are in two dimensions, three, or any number of dimensions.

These vectors allow us to calculate the differences or similarities between them, making it possible to search for similar information very efficiently.

The trick lies in determining which vectors we assign to each word, as we want words with similar meanings to be closer in distance than those with more different meanings. Hugging Face libraries take care of this aspect, so we don’t have to worry too much. We just need to ensure consistent conversion for all the data to be stored and the queries to be performed.

Let’s import a couple of libraries:

- CharacterTextSplitter: We will use this library to group the information into blocks.

- HuggingFaceEmbeddings or SentenceTransformerEmbedding: In the notebook, I have used both, and I haven’t found any difference between them. These libraries are responsible for retrieving the model that will execute the embedding of the data.

from langchain.text_splitter import <a></a><a>CharacterTextSplitter

#from langchain.embeddings import HuggingFaceEmbeddings

There is no 100% correct way to divide the documents into blocks. The key consideration is that larger blocks will provide the model with more context. However, using larger blocks will also increase the size of our Vector Store, which can be memory-intensive.

It’s essential to find a balance between context size and memory usage to optimize the performance of our application.

I have decided to use a block size of 250 characters with an overlap of 10. This means that the last 10 characters of one block will be the first 10 characters of the next block. It is a relatively small block size, but it is more than sufficient for the type of information we are working with.

text_splitter = CharacterTextSplitter(chunk_size=250, chunk_overlap=10)

texts = text_splitter.split_documents(df_document)

Now, we can create embeddings with the text.

from langchain.embeddings.sentence_transformer import SentenceTransformerEmbeddings

embedding_function = SentenceTransformerEmbeddings(model_name="all-MiniLM-L6-v2")

#embedding_function = HuggingFaceEmbeddings(

# model_name="sentence-transformers/all-MiniLM-L6-v2"

#)

As you can see, I used SentenceTransformerEmbeddings instead of HuggingFaceEmbeddings. You can easily change it by modifying the commented line.

With both libraries, you can call the same pre-trained model for generating embeddings. I’m using all-MiniLM-L6-v2 for both. Therefore, while there might be slight differences between the embeddings generated by each library, they will be minimal and won’t affect the performance significantly.

Initially, SentenceTransformerEmbeddings is specialized in transforming sentences, whereas HuggingFaceEmbeddings is more general, capable of generating embeddings for paragraphs or entire documents.

Indeed, given the nature of our documents, it is expected that there would be no difference when using either library.

With the generated embeddings, we can create the index

chromadb_index = Chroma.from_documents(

texts, embedding_function, persist_directory='./input'

)

This index is what we will use to ask questions, and it is specially designed to be highly efficient! After all this effort, the last thing we would want is for it to be slow and imprecise :-).

Let’s start using LangChain!

Now comes the fun part: chaining the actions with LangChain to create our first small application using a large language model!

The application is very simple, consisting of only two steps and two components. The first step will involve a retriever. This component is used to retrieve information from documents or the text we provide as the document. In our case, it will perform a similarity-based search using embeddings to retrieve relevant information for the user’s query from what we have stored in ChromaDB.

The second and final step will involve our language model, which will receive the information returned by the retriever.

Therefore, we need to import the libraries to create the retriever and the pipeline.

from langchain.chains import RetrievalQA

from langchain.llms import HuggingFacePipeline

Now, we can create the retriever, using the embeddings index we created earlier.

retriever = chromadb_index.as_retriever()

We have completed the first step of our chain, or pipeline. Now, let’s move on to the second one: the language model.

In the notebook, I have used two different models available in Hugging Face.

The first model is dolly-v2–3b, the smallest one in the Dolly family. I personally like this model a lot. While it might not be as popular as some others, the responses it generates are significantly better than those from GPT-2, reaching a level similar to what we could achieve with OpenAI’s GPT-3.5. With 3 billion parameters, it is close to the memory limit we can load on Kaggle. This model is trained for text generation, resulting in well-crafted responses.

The second model is from the T5 family. Pay attention because this model is specifically designed for text-2-text generation, resulting in much more concise and short responses.

Make sure to try both models at least to see how they perform.

model_id = "databricks/dolly-v2-3b" #my favourite textgeneration model for testing

task="text-generation"

#model_id = "google/flan-t5-large" #Nice text2text model

#task="text2text-generation"

Perfect! We have everything we need to create the pipeline! Now let's do it!

hf_llm = HuggingFacePipeline.from_model_id(

model_id=model_id,

task=task,

model_kwargs={

"temperature": 0,

"max_length": 256

},

)

Let’s see what each of the parameters means:

- model_id: The identifier of the model in Hugging Face. You can obtain it from Hugging Face, and it usually consists of the model name followed by the version.

- task: Here, we specify the task for which we want to use the model. Some models are trained for multiple tasks. You can find the supported tasks for a specific model in the model’s Hugging Face documentation.

- model_kwargs: This parameter allows us to specify additional arguments specific to the model. In this case, I’m providing the temperature (how creative we want the model to be) and the maximum length of the response.

Now, it’s time to configure the pipeline using the model and the retriever.

document_qa = RetrievalQA.from_chain_type(

llm=hf_llm, chain_type="stuff", retriever=retriever

)

In the variable chain_type, we indicate how the chain should function, and we have four options:

- stuff: The simplest option, it just takes the documents it deems appropriate and uses them in the prompt to pass to the model.

- refine: It makes multiple calls to the model with different documents, trying to obtain a more refined response each time. It may execute a high number of calls to the model, so it should be used with caution.

- map reduce: It tries to reduce all the documents into one, possibly through several iterations. It can compress and collapse the documents to fit into the prompt sent to the model.

- map re-rank: It calls the model for each document and ranks them, finally returns the best one. Similar to `refine`, it can be risky depending on the number of calls expected.

Now we can use the newly created chain to ask our questions, and they will be answered considering the data from our DataFrame, which is now part of the Vector Database.

#Sample question for newscatcher dataset.

response = document_qa.run("Can I buy a Toshiba laptop?")

#Sample question for BBC Dataset.

#response = document_qa.run("Who is going to meet boris johnson?")

display(response)

So, the answer obtained will depend, as it is evident, on the Dataset used and also on the Model. For the question of whether we can buy a Toshiba laptop, we get two very different responses depending on the model:

Dolly: “No, Toshiba officially shuts down their laptops in 2023. The Legendary Toshiba is Officially Done With Making Laptops. Toshiba shuts the lid on laptops after 35 years. Toshiba officially shut down their laptops in 2023.”

T5: “No.”

As you can see, each model adds its own personality to the response!

Conclusions and Continuing Learning!

It's actually been a lot simpler than one might think. It's much easier now than it was before the explosion of large language models and the emergence of tools like LangChain.

We have used a Vector Database to store the data that we previously loaded into a DataFrame. Although, we could have used any other data source.

We used them as input for a couple of language models available in Hugging Face and observed how the models provided a response considering the information from the DataFrame.

But don’t stop here, make your own modifications to the notebook and solve any issues that may arise. Some ideas include:

- Use both datasets, and preferably search for a third one. Even better, do you think you can adapt it to read your resume? I’m sure it can be achieved with some minor adjustments.

- Try using a third Hugging Face model.

- Change the data source. It could be a text file, an Excel file, or even a document tool like Confluence.

The full course about Large Language Models is available at Github. To stay updated on new articles, please consider following the repository or starring it. This way, you’ll receive notifications whenever new content is added.

GitHub – peremartra/Large-Language-Model-Notebooks-Course

Contribute to peremartra/Large-Language-Model-Notebooks-Course development by creating an account on GitHub.

github.com

This article is part of a series where we explore the practical applications of Large Language Models. You can find the rest of the articles in the following list:

Large Language Models Practical Course

View list4 stories

I write about Deep Learning and machine learning regularly. Consider following me on Medium to get updates about new articles. And, of course, You are welcome to connect with me on LinkedIn.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.