Evaluating RAG Metrics Across Different Retrieval Methods

Last Updated on February 3, 2024 by Editorial Team

Author(s): Harpreet Sahota

Originally published on Towards AI.

In this post, you’ll learn about creating synthetic data, evaluating RAG pipelines using the Ragas tool, and understanding how various retrieval methods shape your RAG evaluation metrics.

My journey with AI Makerspace’s LLMOps cohort (learn more here) has been instrumental in shaping my approach to these topics. This exploration is a direct application and extension of knowledge gained from the LLMOps program. The core of this post is a remixed version of a homework assignment from the cohort. I talked with Chris Alexiuk (connect with him), the author of the homework assignments and co-founder of AI Makerspace. He’s given a thumbs-up to remix his work for this blog, and I credit him as a coauthor since I’ve used the valuable insights I’ve learned from him.

Here’s what you’ll learn in this blog:

- U+1F9EA Synthetic Data Creation: Understanding the process and importance of generating synthetic data for RAG evaluation.

- U+1F6E0️ Utilizing the Ragas Tool: Learning how to use Ragas to assess RAG model performance across various metrics comprehensively.

- U+1F50D Impact of Retrieval Methods: Exploring how different retrieval approaches influence the effectiveness and accuracy of RAG models.

- U+1F4A1 Practical Application: Applying these concepts through examples and exercises to solidify understanding and skills in RAG evaluation.

Let’s begin our exploration, starting with the essentials of synthetic data creation, moving through the detailed process of RAG evaluation using ragas, and delving into the subtle influences of different retrieval methods. Refer to this blog post for a helpful primer on building a RAG system using LangChain.

First, start with some preliminaries: installing the libraries you’ll need and setting your OpenAI API key:

%%capture

!pip install -U langchain==0.1

!pip install -U langchain_openai

!pip install -U openai

!pip install -U ragas

!pip install -U arxiv

!pip install -U pymupdf

!pip install -U chromadb

!pip install -U tiktoken

!pip install -U accelerate

!pip install -U bitsandbytes

!pip install -U datasets

!pip install -U sentence_transformers

!pip install -U FlagEmbedding

!pip install -U ninja

!pip install -U flash_attn --no-build-isolation

!pip install -U tqdm

!pip install -U rank_bm25

!pip install -U transformers

import os

import openai

from getpass import getpass

openai.api_key = getpass("Please provide your OpenAI Key: ")

os.environ["OPENAI_API_KEY"] = openai.api_key

Data Collection

Let’s start by loading papers from arXiv.

In a rather ironic manner, I’m choosing to pick papers about evaluating LLMs, including some papers about evaluating RAG pipelines. You can collect these documents individually using the ArxivLoader document loader from LangChain, and then merge them into one document object.

- Evaluation Metrics in the Era of GPT-4: Reliably Evaluating Large Language Models on Sequence to Sequence Tasks

- A Survey on Evaluation of Large Language Models

- An Evaluation on Large Language Model Outputs: Discourse and Memorization

- A Closer Look into Automatic Evaluation Using Large Language Models

- Large Language Models are Not Yet Human-Level Evaluators for Abstractive Summarization

- ARES: An Automated Evaluation Framework for Retrieval-Augmented Generation Systems

- RaLLe: A Framework for Developing and Evaluating Retrieval-Augmented Large Language Models

- Benchmarking Large Language Models in Retrieval-Augmented Generation

- Evaluating the Effectiveness of Retrieval-Augmented Large Language Models in Scientific Document Reasoning

Let’s grab these documents using the ArxivLoader and then merge them using the MergedDataLoader.

from langchain_community.document_loaders import ArxivLoader

from langchain_community.document_loaders.merge import MergedDataLoader

papers = ["2310.13800", "2307.03109", "2304.08637", "2310.05657", "2305.13091", "2311.09476", "2308.10633", "2309.01431", "2311.04348"]

docs_to_merge = []

for paper in papers:

loader = ArxivLoader(query=paper)

docs_to_merge.append(loader)

all_loaders = MergedDataLoader(loaders=docs_to_merge)

all_docs = all_loaders.load()

You can run the following code for a printout of each document's metadata:

for doc in all_docs:

print(doc.metadata)

Creating an Index

To create an index, you need to do the following:

- Pick a text-splitting method, for example,

RecursiveCharacterTextSplitter,CharacterTextSplitter, etc. - Decide on hyperparameters such as

chunk_size,chunk_overlap, andlength_function - Pick an embedding model; for example, you can use a model from OpenAI or pick an open-source embedding model from the MTEB Leaderboard.

- Pick a vectorstore provider, for example

FAISS,Chroma, etc.

The default recommended text splitter is the RecursiveCharacterTextSplitter, so let’s use that.

RecursiveCharacterTextSplitter:Recursively splits text. Splitting text recursively serves the purpose of trying to keep related pieces of text next to each other. This is the recommended way to start splitting text.

This text splitter takes a list of characters. It tries to create chunks based on splitting on the first character, but if any chunks are too large, it then moves on to the next character, and so forth. By default, the characters it tries to split on are ["\n\n", "\n", " ", ""] . TheChunkviz utility is a great way to visually assess how your text is split up and assess the impact of the splitting parameters.

In addition to controlling which characters you can split on, you can also control a few other things:

• chunk_size: the maximum size of your chunks (as measured by the length function).

• chunk_overlap: the maximum overlap between chunks. It can be nice to have some overlap to maintain continuity between chunks (e.g. do a sliding window).

• length_function: how the length of chunks is calculated. It defaults to counting the number of characters, but passing a token counter here is pretty common.

Once the documents are split, we can embed them and send each embedding to our Chroma VectorStore using HuggingFaceBgeEmbeddings.

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import Chroma

from langchain_community.embeddings import HuggingFaceBgeEmbeddings

model_name = "BAAI/bge-large-en-v1.5"

encode_kwargs = {'normalize_embeddings': True} # set True to compute cosine similarity

hf_bge_embeddings = HuggingFaceBgeEmbeddings(

model_name=model_name,

model_kwargs={'device': 'cuda'},

encode_kwargs=encode_kwargs

)

text_splitter = RecursiveCharacterTextSplitter(chunk_size=512,

chunk_overlap = 16,

length_function=len)

docs = text_splitter.split_documents(all_docs)

vectorstore = Chroma.from_documents(docs, hf_bge_embeddings)

After splitting our documents up, we have 1338 chunks of text that we have embedded and sent to our vector database. Confirm it by running the following line of code:

len(docs)

As a sanity check, you can verify the largest chunk size. It should be less than or equal to 512.

print(max([len(chunk.page_content) for chunk in docs]))

Let’s convert our Chroma vectorstore into a retriever with the .as_retriever() method.

Now, to give it a test! We pass a query to our retriever; the retriever will grab chunks relevant to our query.

relevant_docs = base_retriever.get_relevant_documents("What are the challenges in evaluating Retrieval Augmented Generation pipelines?")

Since we defined k, the number of chunks to retrieve, as 5, we should expect to see only 5 documents. You can do a sanity check like so:

len(relevant_docs)

You can inspect the retrieved documents if you’d like:

for doc in relevant_docs:

print(doc.page_content)

print('\n')

Instantiate the LLM, Text Generation Pipeline and Set Up a QA Chain with a Baseline Retriever

For this tutorial, you’ll use a version of DeciLM-7B that was fine-tuned for RAG tasks by our friends at Ai Bloks. This model, part of their DRAGON series, is known as dragon-deci-7b-v0.

Over the past year and a half, our friends at AI Bloks, creators of LLMWare, have dedicated efforts to developing specialized instruct datasets in specific areas:

- Focused Domains: Concentrating on sectors like financial services, insurance, legal, compliance, and regulatory.

- Closed-Context Analysis: Aim for answers derived from specific source documents instead of general knowledge.

- Fact-Based Question Answering: Enhancing skills in key-value extraction, concise Q&A, basic analytics, and short-form (like xsum) and longer-form (bullet lists) summarization.

- Essential RAG Skills: Building targeted training sets for Boolean Yes/No, “not found” recognition, common-sense math and logic, table reading, and multiple-choice questions.

- Concise, Clear Answers: Focusing on brief responses for ease of programmatic handling, correlation with evidence sources, reduced risk of hallucinations, and quicker inference processing.

I recommend watching the video below for more technical depth regarding how they built the model:

Their approach involves fine-tuning leading open-source foundation models.

Targeted training in specific domains and skills and enabling smaller models to perform beyond their size is the future of highly effective and cost-efficient RAG workflows.

The Ai Bloks team applied their methodology to DeciLM-7B, similar to other 7B foundation models in the Dragon series (such as Mistral, Yi, Llama-2, Stable-LM, RedPajama-INCITE, and Falcon). As you can see in the table below, DeciLM-7B has shown the strongest results!

The training for dragon-deci-7b-v0 was conducted on a single Nvidia A100 80GB. They used small batches and gradient accumulation to simulate larger batches, optimizing hyper-parameters over several days. Their training process was straightforward, following best practices with a simple prompt wrapper inspired by EleutherAI, Pythia, and RedPajama, using a ” ” format (which you can see below).

Post-training testing of DeciLM-7B revealed impressive performance, particularly in areas like Not Found classification, Yes-No Boolean classification, and Math/logic, with over 90% accuracy — a first in the DRAGON series.

Inference speeds were notably faster than other 7B models, offering an “apples to apples” speed advantage.

Creating a Retrieval Augmented Generation Prompt

Now, we can set up a prompt template that will be used to provide the LLM with the necessary contexts, user queries, and instructions!

from langchain.prompts import ChatPromptTemplate

template = """<human>: Answer the question based only on the following context. If you cannot answer the question with the context, please respond with 'I don't know':

### CONTEXT

{context}

### QUESTION

Question: {question}

\n

<bot>:

"""

prompt = ChatPromptTemplate.from_template(template)

from operator import itemgetter

import torch

from langchain_openai import ChatOpenAI

from langchain_community.huggingface_pipeline import HuggingFacePipeline

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig, GenerationConfig, pipeline

from langchain_core.output_parser import StrOutputParser

from langchain_core.runnable import RunnableLambda, RunnablePassthrough

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4",

# Note: this tutorial is running on an A100m if you're not using an A100

# or ampere supported GPU then bfloat won't work for you

bnb_4bit_compute_dtype=torch.bfloat16

)

model = AutoModelForCausalLM.from_pretrained("llmware/dragon-deci-7b-v0",

quantization_config = quantization_config,

trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained("llmware/dragon-deci-7b-v0",

trust_remote_code=True)

generation_config = GenerationConfig(

max_length=4096,

temperature=1e-3,

do_sample=True,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.eos_token_id

)

pipeline = pipeline("text-generation",

model=model,

tokenizer=tokenizer,

max_length=4096,

temperature=1e-3,

do_sample=True,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.eos_token_id

)

deci_dragon = HuggingFacePipeline(pipeline=pipeline)

This code defines a pipeline for a question-answering system with retrieval augmentation named retrieval_augmented_qa_chain.

It starts by taking a user’s question and uses it both directly as the question and as input to a base retrieval system (base_retriever) to fetch relevant context. The retrieved context and the original question are then passed through RunnablePassthrough for subsequent use, maintaining the context intact for reference. Finally, the response is generated by a primary question-answering model (deci_dragon), which takes the formatted prompt, consisting of the context and the question, and produces an answer.

The design of this pipeline allows for the integration of external information to enhance the quality of responses to user queries.

retrieval_augmented_qa_chain = (

{"context": itemgetter("question") U+007C base_retriever, "question": itemgetter("question")}

U+007C RunnablePassthrough.assign(context=itemgetter("context"))

U+007C {"response": prompt U+007C deci_dragon, "context": itemgetter("context")}

)

Let’s test it out!

question = "Describe evaluation criteria for retrieval augmented generation pipelines"

result = retrieval_augmented_qa_chain.invoke({"question" : question})

print(result['response'])

1. Noise Robustness - how well does the system handle noise in the input?

2. Negative Rejection - how well does the system reject negative responses?

3. Information Integration - how well does the system integrate information?

4. Counterfactual Robustness - how well does the system handle false information?

Create a Synthetic Dataset For RAG Evaluation Using GPT-3.5-turbo and GPT-4

Note: This section might take you a long time to run.

To evaluate Retrieval-Augmented Generation (RAG) pipelines effectively using LangChain, you need to create a synthetic dataset with four essential components:

- U+1F914 Questions: These are the prompts your RAG model will tackle. Make sure your dataset includes a diverse array of questions. This diversity tests the model’s ability to handle various topics and question complexities.

- U+1F3AF Ground Truths: These are the correct answers to your questions. You’ll use them as a benchmark to measure how accurately your RAG model responds.

- U+1F52E Predicted Answers: These are the responses your RAG model generates. Your key task is to compare these answers against the ground truths to evaluate the model’s accuracy.

- U+1F310 Contexts: These provide the necessary background or supplementary information your RAG model uses to craft its answers. Understanding how your model leverages this context is vital for assessing its effectiveness in incorporating external information into its responses.

U+1F4CA Structuring Your Dataset

Organize your dataset in a tabular format. Each element should have separate columns: questions, ground truths, predicted answers, and contexts. Each row in your table is a unique test case comprising a question, its correct answer, the RAG model’s response, and the contexts involved.

U+1F6E0️ How to Use LangChain for Data Creation

You’ll use LangChain to generate realistic questions and contexts. Then, run these through your RAG model to get the predicted answers. Aim for realism and variety in your dataset to challenge your RAG model and thoroughly evaluate its capabilities.

U+1F4DD Using the Dataset for RAG Evaluation

You’ll analyze how closely your RAG model’s predictions match the ground truths and how effectively it uses the provided contexts. This structured approach offers a detailed view of the model’s strengths and improvement areas, shedding light on its performance across varied questions and contexts.

U+1F4D0 ResponseSchema

TheResponseSchema class acts as the architectural blueprint for data elements in a response. It’s basically a template for consistent output.

Every ResponseSchema instance is defined by:

- U+1F3F7️ Name: Serves as the identifier for the data element, akin to a key in a JSON object.

- U+1F4DD Description: Offers a clear, human-readable explanation of the data element’s role or content.

- U+1F520 Type: Indicates the data type (e.g.,

string,list,integer), specifying what the element should store.

The key role of ResponseSchemais to outline each part of a structured response, ensuring the data is consistent and clear.

from langchain.output_parsers import ResponseSchema

from langchain.output_parsers import StructuredOutputParser

question_schema = ResponseSchema(

name="question",

description="a question about the context."

)

question_response_schemas = [

question_schema,

]

U+1F5C2️ StructuredOutputParser

TheStructuredOutputParser decodes and processes outputs and structures it in whatever format you define. For example, use this if you need your language model to output responses structured as JSON.

This class helps with:

- U+1F4DA Managing Response Schemas: Utilizes a set of

ResponseSchemaobjects to define the expected blueprint of the output. - U+1F6E0️ Facilitating Structured Output: Generates guides or templates, ensuring the output is predictably structured and easier to handle.

- U+1F9E9 Parsing Capability: Transforming a structured string (such as JSON-formatted text) into a clearly defined format in line with

ResponseSchemaguidelines.

U+1F31F High-Level Summary

- U+1F4D0

ResponseSchema: A template for setting up the expected format of each response element. - U+1F5C2️

StructuredOutputParser: Uses those templates to verify the output’s structure, then parses it into the required format.

question_output_parser = StructuredOutputParser.from_response_schemas(question_response_schemas)

format_instructions = question_output_parser.get_format_instructions()

question_generation_llm = ChatOpenAI(model="gpt-3.5-turbo-1106")

bare_prompt_template = "{content}"

bare_template = ChatPromptTemplate.from_template(template=bare_prompt_template)

Generating Questions

Now, you can generate questions. This is what the next code block will do for you:

from langchain.prompts import ChatPromptTemplate

qa_template = """\

<human>: You are a University Professor creating a test for advanced students. For each context, create a question that is specific to the context. Avoid creating generic or general questions.

question: a question about the context.

Format the output as JSON with the following keys:

question

context: {context}

<bot>:

"""

prompt_template = ChatPromptTemplate.from_template(template=qa_template)

messages = prompt_template.format_messages(

context=docs[0],

format_instructions=format_instructions

)

question_generation_chain = bare_template U+007C question_generation_llm

response = question_generation_chain.invoke({"content" : messages})

output_dict = question_output_parser.parse(response.content)

for k, v in output_dict.items():

print(k)

print(v)

question

What are the specific NLP benchmarks used for the preliminary and hybrid evaluation of generative LLMs in the research?

Generating Context

Now, we can generate the contexts.

from tqdm import tqdm

import random

random.seed(42)

qac_triples = []

# randomly select 100 chunks from the ~1300 chunks

for text in tqdm(random.sample(docs, 100)):

messages = prompt_template.format_messages(

context=text,

format_instructions=format_instructions

)

response = question_generation_chain.invoke({"content" : messages})

try:

output_dict = question_output_parser.parse(response.content)

except Exception as e:

continue

output_dict["context"] = text

qac_triples.append(output_dict)

You can inspect what these look like:

qac_triples[5]

Generate “Ground Truth” Answers using GPT-4

Notice that we used GPT-3.5 to generate questions and contexts. For ground truth, we’ll use the more powerful GPT-4.

The code below will generate the ground truth answers for you.

answer_generation_llm = ChatOpenAI(model="gpt-4-1106-preview", temperature=0)

answer_schema = ResponseSchema(

name="answer",

description="an answer to the question"

)

answer_response_schemas = [

answer_schema,

]

answer_output_parser = StructuredOutputParser.from_response_schemas(answer_response_schemas)

format_instructions = answer_output_parser.get_format_instructions()

qa_template = """\

<human>: You are a University Professor creating a test for advanced students. For each question and context, create an answer.

answer: a answer about the context.

Format the output as JSON with the following keys:

answer

question: {question}

context: {context}

<bot>:

"""

prompt_template = ChatPromptTemplate.from_template(template=qa_template)

messages = prompt_template.format_messages(

context=qac_triples[0]["context"],

question=qac_triples[0]["question"],

format_instructions=format_instructions

)

answer_generation_chain = bare_template U+007C answer_generation_llm

# Let's inspect the result of one

response = answer_generation_chain.invoke({"content" : messages})

output_dict = answer_output_parser.parse(response.content)

for k, v in output_dict.items():

print(k)

print(v)

answer

The purpose of the 2S2ORC dataset in the evaluation of scientific document reasoning is to test the ability of retrieval-augmented Large Language Models (LLMs) to understand and reason across a wide range of scientific domains. By covering 19 different scientific disciplines, the dataset provides a comprehensive benchmark to assess whether these models can accurately recognize the domain of a given text passage (as in the FoS task) and make predictions without prompting bias. Furthermore, the dataset allows for the evaluation of the models' ability to avoid hallucinations by retrieving and using factual information from a diverse scientific corpus, thereby enhancing the interpretability and verifiability of the model predictions in scientific document reasoning tasks.

question

What is the purpose of the 2S2ORC dataset in the evaluation of scientific document reasoning?

Now, generate ground truth for all questions and contexts:

for triple in tqdm(qac_triples):

messages = prompt_template.format_messages(

context=triple["context"],

question=triple["question"],

format_instructions=format_instructions

)

response = answer_generation_chain.invoke({"content" : messages})

try:

output_dict = answer_output_parser.parse(response.content)

except Exception as e:

continue

triple["answer"] = output_dict["answer"]

RAG Evaluation Using ragas

Alright, let’s dive straight into the popular RAG metrics. Understanding these metrics is key to evaluating your RAG model effectively.

U+1F3AF Answer Relevancy

- What It Measures: This metric assesses how pertinent your RAG model’s answer is to the given prompt. You’re looking for answers that hit the nail on the head, not ones that beat around the bush.

- Scoring: It’s a game of precision, with scores ranging from 0 to 1. Higher scores mean your model’s answers are right on target.

Example:

- U+2753 Question: What causes seasonal changes?

- ⬇️ Low relevance answer: The Earth’s climate varies throughout the year

- ⬆️ High relevance answer: Seasonal changes are caused by the tilt of the Earth’s axis and its orbit around the Sun.

U+1F4DA Faithfulness

- What It Measures: Here, you’re checking if the answers stick to the facts provided in the context. It’s all about staying true to the source.

- Scoring: Also on a scale of 0 to 1. Higher values mean your answer is a faithful representation of the context.

Example:

- U+2753 Question: What is the significance of the Apollo 11 mission?

- U+1F4D1 Context: Apollo 11 was the first manned mission to land on the Moon in 1969.

- ⬆️ High faithfulness answer: Apollo 11 is significant as it was the first mission to bring humans to the Moon.

- ⬇️ Low faithfulness answer: Apollo 11 was significant for its study of Mars.

U+1F50D Context Precision

- What It Measures: This one’s about whether your model ranks all the relevant bits of information at the top. You want the most important pieces front and center.

- Scoring: Once again, it’s a 0 to 1 scale. Higher scores indicate your model is doing a great job at prioritizing the right context.

Example:

- U+2753 Question: What are the health benefits of regular exercise?

- ⬆️ High precision: The model ranks contexts discussing cardiovascular health, mental well-being, and muscle strength at the top.

- ⬇️ Low precision: The model prioritizes contexts unrelated to health, such as sports history.

U+2705 Answer Correctness

- What It Measures: This is about accuracy — how well does the answer align with the ground truth?

- Scoring: Judged on a 0 to 1 scale, higher scores signal a bullseye match with the ground truth.

Example:

- U+1F7E2 Ground Truth: Photosynthesis in plants primarily occurs in the chloroplasts.

- ⬆️ High answer correctness: Photosynthesis occurs in plant cells' chloroplasts.

- ⬇️ Low answer correctness: Photosynthesis occurs in the mitochondria of plants.

In a nutshell, you’ve got four powerful tools to assess your RAG model. Each metric provides a different lens to view your model’s performance, from how relevant and faithful its answers are to how precise it is with contexts and how correct its answers align with known truths.

Think of these metrics as your RAG model’s report card — they tell you where it excels and where it needs a bit more homework!

from ragas.metrics import (

answer_relevancy,

faithfulness,

context_recall,

context_precision,

context_relevancy,

answer_correctness,

answer_similarity

)

from ragas.metrics.critique import harmfulness

from ragas import evaluate

def create_ragas_dataset(rag_pipeline, eval_dataset):

rag_dataset = []

for row in tqdm(eval_dataset):

answer = rag_pipeline.invoke({"question" : row["question"]})

rag_dataset.append(

{"question" : row["question"],

"answer" : answer["response"],

"contexts" : [context.page_content for context in answer["context"]],

"ground_truths" : [row["ground_truth"]]

}

)

rag_df = pd.DataFrame(rag_dataset)

rag_eval_dataset = Dataset.from_pandas(rag_df)

return rag_eval_dataset

def evaluate_ragas_dataset(ragas_dataset):

result = evaluate(

ragas_dataset,

metrics=[

context_precision,

faithfulness,

answer_relevancy,

context_recall,

context_relevancy,

answer_correctness,

answer_similarity

],

)

return result

Using `dragon-deci-7b-v0` to generate answers

Note that generating answers for 100 questions took ~20 minutes on an A100.

from tqdm import tqdm

import pandas as pd

basic_qa_ragas_dataset = create_ragas_dataset(retrieval_augmented_qa_chain, eval_dataset)

RAG Evaluation

basic_qa_result = evaluate_ragas_dataset(basic_qa_ragas_dataset)

And we can inspect the results of the evaluation below

import matplotlib.pyplot as plt

def plot_metrics_with_values(metrics_dict, title='RAG Metrics'):

"""

Plots a bar chart for metrics contained in a dictionary and annotates the values on the bars.

Args:

metrics_dict (dict): A dictionary with metric names as keys and values as metric scores.

title (str): The title of the plot.

"""

names = list(metrics_dict.keys())

values = list(metrics_dict.values())

plt.figure(figsize=(10, 6))

bars = plt.barh(names, values, color='skyblue')

# Adding the values on top of the bars

for bar in bars:

width = bar.get_width()

plt.text(width + 0.01, # x-position

bar.get_y() + bar.get_height() / 2, # y-position

f'{width:.4f}', # value

va='center')

plt.xlabel('Score')

plt.title(title)

plt.xlim(0, 1) # Setting the x-axis limit to be from 0 to 1

plt.show()

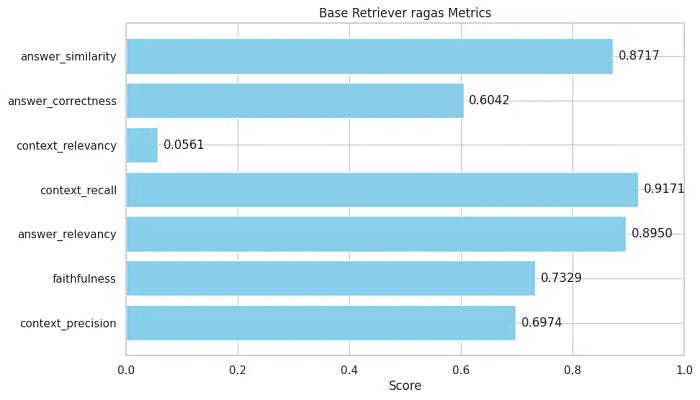

plot_metrics_with_values(basic_qa_result, "Base Retriever ragas Metrics")

Wow! I must say, I’m quite impressed by the performance of the DRAGON fine-tune of DeciLM-7B!

Let’s break down each metric:

- Context Precision (0.6974): This metric, at approximately 0.70, indicates that the retriever is fairly good at selecting relevant information from the given context. While it’s not perfect, it can prioritize relevant content fairly well.

- Faithfulness (0.7329): A score of around 0.73 suggests that the answers generated are generally faithful to the provided context. This means that most of the information in the responses can be traced back to the context, showing good factual consistency.

- Answer Relevancy (0.8950): A high score close to 0.90 indicates that the answers are very relevant to the questions. It shows that the retriever effectively understands and addresses the specific query.

- Context Recall (0.9171): This impressive score suggests that the retriever is excellent at retrieving almost all relevant information from the database or context for a given query.

- Context Relevancy (0.0561): This low score is a bit concerning. It implies that the context being retrieved, while comprehensive (as suggested by the high context recall), is not always relevant to the query. This could lead to the retriever pulling in unnecessary or irrelevant information.

- Answer Correctness (0.6042): This metric is just above average, indicating moderate answer accuracy. It suggests that while many answers are correct, a significant portion may not be entirely accurate.

- Answer Similarity (0.8717): A high score here shows that the answers generated by the retriever are similar to the expected answers, indicating a good understanding of the query and the context.

Overall Analysis:

- Strengths: The retriever excels in answer relevancy, context recall, and answer similarity, suggesting it understands queries well and can pull a comprehensive set of relevant data.

- Areas for Improvement: Context relevancy is a major area of concern. Improving context filtering to ensure only relevant information is retrieved could enhance other metrics like answer correctness. The answer correctness itself also has room for improvement, suggesting a need to refine how the retriever interprets and uses the context.

This analysis shows a competent retriever in understanding and responding to queries but with potential improvements in the precision of context retrieval and the accuracy of its answers.

U+1F468U+1F3FDU+1F52C Experimenting With Other Retrievers

Now we can test how changing our Retriever impacts our RAG evaluation!

We’ll build this simple qa_chain_factory to create a standardized qa_chainswhere the retriever is the only different component.

def create_qa_chain(retriever, primary_qa_llm):

created_qa_chain = (

{"context": itemgetter("question") U+007C retriever,

"question": itemgetter("question")

}

U+007C RunnablePassthrough.assign(

context=itemgetter("context")

)

U+007C {

"response": prompt U+007C primary_qa_llm,

"context": itemgetter("context"),

}

)

return created_qa_chain

Parent Document Retriever

One of the easier ways we can imagine improving a retriever is to embed our documents into small chunks and then retrieve a significant amount of additional context that “surrounds” the found context.

You can read more about this method here!

The basic outline of this retrieval method is as follows:

- Obtain User Question

- Retrieve child documents using Dense Vector Retrieval.

- Merge the child's documents based on their parents. If they have the same parents — they become merged.

- Replace the child documents with their respective parent documents from an in-memory store.

- Use the parent documents to augment generation.

from langchain.retrievers import ParentDocumentRetriever

from langchain.storage import InMemoryStore

parent_splitter = RecursiveCharacterTextSplitter(chunk_size=1536)

child_splitter = RecursiveCharacterTextSplitter(chunk_size=256)

vectorstore = Chroma(collection_name="split_parents", embedding_function=hf_bge_embeddings)

store = InMemoryStore()

parent_document_retriever = ParentDocumentRetriever(

vectorstore=vectorstore,

docstore=store,

child_splitter=child_splitter,

parent_splitter=parent_splitter,

)

parent_document_retriever.add_documents(docs)

Create, test, and evaluate the Parent Document Retriever Chain

parent_document_retriever_qa_chain = create_qa_chain(parent_document_retriever, deci_dragon)

parent_document_retriever_qa_chain.invoke({"question" : "What are some metrics to evaluate RAG pipelines?"})["response"]

pdr_qa_ragas_dataset = create_ragas_dataset(parent_document_retriever_qa_chain, eval_dataset)

pdr_qa_result = evaluate_ragas_dataset(pdr_qa_ragas_dataset)

plot_metrics_with_values(pdr_qa_result, "Parent Document Retriever ragas Metrics")

The results from the Parent Document Retriever reveal some interesting insights about its performance, especially when compared to the base retriever.

Let’s analyze these metrics in light of the retriever’s design and functionality:

- Context Precision (0.5650): This score has decreased compared to the base retriever. It suggests that while retrieving larger chunks for context, the Parent Document Retriever may not be as precise in fetching only the most relevant information.

- Faithfulness (0.6159): The decrease in this score indicates that the answers generated are less consistently faithful to the retrieved context. This could be due to the larger document chunks introducing more varied information, some of which might not be directly relevant to the query.

- Answer Relevancy (0.8457): While still relatively high, this score is lower than the base retriever. It implies that the answers are generally relevant, but the larger context chunks might introduce slight deviations or less focused responses.

- Context Recall (0.9186): Similar to the base retriever, this score is high, indicating the retriever is effective at retrieving a comprehensive set of information for a given query.

- Context Relevancy (0.0505): Consistent with the base retriever, this low score is a concern. It implies that the larger document chunks retrieved are often not highly relevant to the query, potentially due to each chunk's broader scope of information.

- Answer Correctness (0.5199): This score has seen a notable decrease. It suggests that the accuracy of the answers may be compromised when using larger document chunks, possibly due to the inclusion of more extraneous information.

- Answer Similarity (0.8711): This metric remains high, indicating that the answers generated are similar to the expected responses despite the larger context chunks.

Overall Analysis:

- Comparative Insights: Compared to the base retriever, the Parent Document Retriever shows decreased precision, faithfulness, relevancy, and correctness. However, it maintains high recall and answer similarity.

- Interpreting the Impact: The Parent Document Retriever’s approach of fetching larger document chunks appears to introduce more comprehensive information but potentially at the cost of focused relevancy and accuracy. This could be due to the broader variety of information in each chunk, which might dilute the specific context relevant to a query.

- Potential Adjustments: Tweaking the chunk size or refining the criteria for selecting parent documents could help balance context relevance with the need for comprehensive information.

This analysis suggests that while the Parent Document Retriever effectively gathers extensive information, its approach might benefit from adjustments to improve the precision and relevance of the context and, consequently, the accuracy of the answers.

Create, test, and evaluate the Ensemble Retrieval chain

Next, let’s look at ensemble retrieval. You can read more about this here!

The basic idea is as follows:

- Obtain User Question

- Hit the Retriever Pair: Retrieve Documents with BM25 Sparse Vector Retrieval and Retrieve Documents with Dense Vector Retrieval Method

- Collect and “fuse” the retrieved docs based on their weighting using the Reciprocal Rank Fusion algorithm into a single ranked list.

- Use those documents to augment our generation.

Ensure your weights list – the relative weighting of each retriever – sums to 1!

from langchain_community.retrievers import BM25Retriever, EnsembleRetriever

text_splitter = RecursiveCharacterTextSplitter(chunk_size=512, chunk_overlap=16)

docs = text_splitter.split_documents(all_docs)

bm25_retriever = BM25Retriever.from_documents(docs)

bm25_retriever.k = 3

vectorstore = Chroma.from_documents(docs, hf_bge_embeddings)

chroma_retriever = vectorstore.as_retriever(search_kwargs={"k": 3})

ensemble_retriever = EnsembleRetriever(retrievers=[bm25_retriever, chroma_retriever], weights=[0.42, 0.58])

ensemble_retriever_qa_chain = create_qa_chain(ensemble_retriever, deci_dragon)

ensemble_qa_ragas_dataset = create_ragas_dataset(ensemble_retriever_qa_chain, eval_dataset)

ensemble_qa_result = evaluate_ragas_dataset(ensemble_qa_ragas_dataset)

plot_metrics_with_values(ensemble_qa_result, "Ensemble Retriever ragas Metrics")

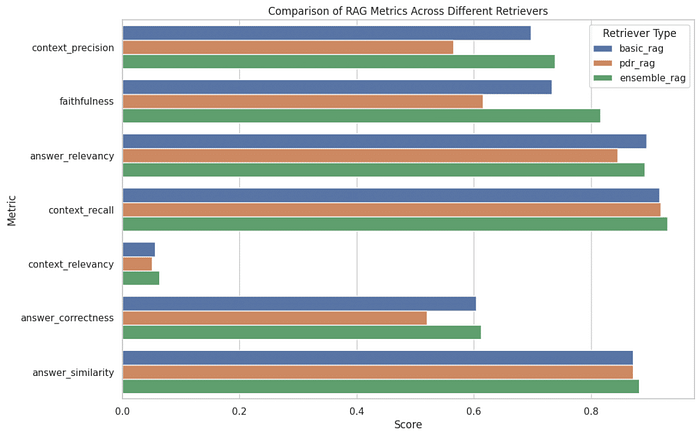

The results from the Ensemble Retriever, which combines multiple retrieval methods, provide a comprehensive view of its effectiveness.

Let’s analyze these metrics in the context of its hybrid search approach:

- Context Precision (0.7378): This score is significantly higher than the Parent Document Retriever and slightly higher than the base retriever. It indicates that combining sparse and dense retrievers effectively narrows down the most relevant information, balancing keyword-based and semantic relevance.

- Faithfulness (0.8158): A noticeable improvement compared to the base and Parent Document Retrievers. This suggests that the answers generated are more consistently faithful to the retrieved context, likely benefiting from the ensemble approach’s comprehensive capture of relevant information.

- Answer Relevancy (0.8914): This score is almost as high as the base retriever, indicating that the answers are highly relevant to the questions. The hybrid approach appears to enhance the ability to address specific queries accurately.

- Context Recall (0.9300): This is the highest score among the three retrievers, implying that the Ensemble Retriever is exceptionally effective at retrieving comprehensive information for each query.

- Context Relevancy (0.0634): Though still low, this score is slightly improved compared to the other retrievers. It indicates that while the context retrieved is more relevant than before, there’s still room for improvement in ensuring only pertinent information is fetched.

- Answer Correctness (0.6122): This score is higher than the Parent Document Retriever but lower than the base. It reflects a moderate level of accuracy in the answers, suggesting that while the hybrid approach captures a broad range of information, it may not always precisely align with the ground truth.

- Answer Similarity (0.8820): The highest among the three, showing that the answers generated are very similar to the expected responses, benefitting from the combined strengths of different retrieval methods.

Overall Analysis:

- Strengths: The Ensemble Retriever excels in context recall and answer similarity, benefiting from the combined strengths of sparse and dense retrievers. Its context precision and faithfulness are also notably improved.

- Areas for Improvement: While context relevancy has improved, it’s still weak. Additionally, answer correctness, though better than the Parent Document Retriever, doesn’t match the base retriever’s performance.

- Implications: The Ensemble Retriever’s hybrid approach shows a balanced performance across metrics. It retrieves comprehensive and relatively precise information by leveraging keyword and semantic similarities.

This analysis indicates that the Ensemble Retriever’s hybrid approach effectively creates a well-rounded retrieval performance, making it a robust choice for diverse RAG applications.

Conclusion

We’ll also look at combining the results and looking at them in a single table so we can make inferences about them.

def create_df_dict(pipeline_name, pipeline_items):

df_dict = {"name" : pipeline_name}

for name, score in pipeline_items:

df_dict[name] = score

return df_dict

basic_rag_df_dict = create_df_dict("basic_rag", basic_qa_result.items())

pdr_rag_df_dict = create_df_dict("pdr_rag", pdr_qa_result.items())

ensemble_rag_df_dict = create_df_dict("ensemble_rag", ensemble_qa_result.items())

results_df = pd.DataFrame([basic_rag_df_dict, pdr_rag_df_dict, ensemble_rag_df_dict])

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

def plot_rag_metrics(df):

"""

Plots a comparison of RAG metrics for different retrievers.

Args:

df (pd.DataFrame): DataFrame containing the RAG metrics with columns:

'name', 'context_precision', 'faithfulness', 'answer_relevancy',

'context_recall', 'context_relevancy', 'answer_correctness',

'answer_similarity'

"""

# Setting up the plot

plt.figure(figsize=(12, 8))

sns.set(style="whitegrid")

# Melting the DataFrame for easy plotting with Seaborn

df_melted = df.melt(id_vars="name", var_name="metric", value_name="score")

# Creating a bar plot

sns.barplot(x="score", y="metric", hue="name", data=df_melted)

plt.title("Comparison of RAG Metrics Across Different Retrievers")

plt.xlabel("Score")

plt.ylabel("Metric")

plt.legend(title="Retriever Type")

plt.show()

results_df.sort_values("answer_correctness", ascending=False)

plot_rag_metrics(results_df)

In this blog, we’ve tackled the nuts and bolts of RAG pipeline evaluation.

You’ve seen firsthand how different retrievers — basic, Parent Document, and Ensemble — perform under scrutiny. From the detailed analysis of metrics for the basic, Parent Document, and Ensemble retrievers, we’ve seen how each approach brings unique strengths and challenges. The Ensemble Retriever's hybrid approach stands out for its balanced performance across multiple metrics, highlighting the value of combining different retrieval strategies.

Let’s recap the key findings:

- The basic retriever excels in answer relevancy and faithfulness but stumbles in context precision.

- The Parent Document Retriever, designed for larger context chunks, showed a dip in most metrics, notably in answer correctness and faithfulness.

- The Ensemble Retriever emerged as a robust contender, balancing all metrics, particularly context recall and answer similarity.

Your takeaway?

There is no one-size-fits-all in RAG evaluation.

Each retriever has its trade-offs. The Ensemble Retriever’s hybrid approach offers a more balanced performance, but your choice depends on the performance aspect most critical to your needs.

As we conclude this exploration, I extend my gratitude to AI Makerspace and the LLMOps cohort (discover more) for their invaluable teachings. A special thanks to Chris Alexiuk (connect with him) for his collaboration and guidance in enriching this journey.

Their contributions have been instrumental in deepening our understanding of RAG evaluation.

If you want all the codes, they’re right here.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.