Building Blocks of Transformers: Attention

Author(s): Akhil Theerthala

Originally published on Towards AI.

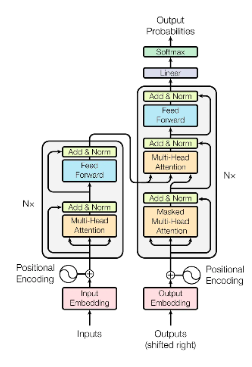

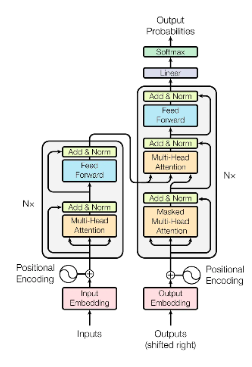

It’s been 5 years…and the Transformer architecture seems almost untouchable. During all this time, there was no significant change in the structure behind a transformer. However, the world, for sure, has changed a lot because of it. We are now seeing amazing things achieved with this architecture. Starting from having simple conversations, to now controlling the device settings, everything was made possible by the same design.

What’s the reason? Why does this architecture almost always work for anything?

This was the question I had every time I used this architecture. I knew what attention was, I knew the basic idea behind this, but almost always the answer seemed to elude me. That’s when it hit me that the understanding I have was not enough. Through this article series, I plan to re-learn each and every aspect again from scratch. Trying to understand this better.

Attention Mechanism

It’s now almost undeniable that the core advantage of a transformer comes from the so-called “Attention” mechanism. Almost all the articles introducing attention, refer back to the same machine translation paper from 2014. (P.S. It’s funny how this is referred to as a TV Show by Google.)

The core idea that the paper presented was simple, since RNNs fail to keep remembering the long-term context, they suggested to add the context somewhere else, so that we can refer back to it when needed. Well, that’s what we do too. We note things down because we almost always can’t remember everything.

During the lecture: “CS-25-V2: Introduction to Transformers”, Andrej Karpathy shared a few comments behind the person’s perspective. It was stated that the author, who was learning to speak English, almost always referred back to the previous words to understand the contextual meaning. Later on, that’s what led to the attention mechanism and transformer. Simple enough, right?

You can understand “Why” the attention mechanism is needed. However, The most troubling part for me was always the “How”. It’s always this equation:

You find some “affinity” between the Q (query) and K(Key) and then get a softmax from it, finally projecting the V (value) on it. You somehow get the context vector. The simple explanation of “looking back to remember the context” is now an equation. However, most of the discussions still explain this either from the perspective of the database or from the perspective of the model.

However, what finally made me understand was the interpretation given by Andrej Karpathy, in the earlier mentioned lecture. In this he mentioned that he wants to interpret the attention mechanism like a conversation phase of the transformer. Inspired from that interpretation, I realised that this is also what happens when someone is trying to borrow money. Hence came this article.

My narrative.

Before we go into the narrative, let’s set the setting. We have two people, A and B. The place they live in trades using coins. Because of some situations, A now needs 500 more coins than the 416 coins he has to buy his favorite phone. B, however, has saved 1000 coins.

Now based on the setting, we can know that B can give twice the required amount of A. As in, A’s chances of getting 500 coins from B are 2.

However, as data scientists, we speak probabilities. Hence, A’s probability of getting his needs satisfied from B = 2/2 =1.

However, reality is not as beautiful. A and B are practically strangers. Hence B can at most give A 100 coins. Now, how much money can A expect?

After the expected amount, A now has 500 coins.

Now, let’s complicate the scene. A also has a friend C, who saved 250 coins, and is willing to give A 150 coins.

Now, A’s chances to get his goal satisfied with the help of C is 0.5 and the probability of A’s goals being met from B and C are,

Now, how much can A expect to get before he asks anyone

A can expect to have 516 coins after borrowing.

How is this even relevant to the Attention mechanism?

Well…replace the persons with words. The coins with the context bank of each word. The “context bank” of a word is nothing but the contribution of a word to the meaning of the sentence. This finally makes the amount each person is willing to give be the additional context that we add to the word.

If you don’t want to speak about words, then we can also relate this to the images.

- Coins A wants → Additional information about the image patch

- Coins B,C has → Contextual information that is relevant to the image

- Coins A additionally gets → Additional context of the image obtained from the other patches.

Now going back to the original Q,K,V terms,

How does the model get these Q,K,V? These aren’t made manually right?

The goal of having a model in itself is to avoid manual processing. Hence there is no way these 3 are made manually. (You can work on it if you want, but it’s a very laborious task). We instead try to get these from the inputs itself. To do this, we define 3 different weights, (query_weights, key_weights, value_weights), and let the model iteratively obtain weights.

The following image finally explains what happens in attention:

That concludes my interpretation of attention. Of course, this is not the end. There is still much to discuss, like Multi-head Attention, Cross Attention, Global Attention, Soft-attention, etc. However, what we discussed in this article is the basic building block. Whatever forms there are are just obtained by changing a few details of the attention mechanism we discussed.

If you want to get updates on these, feel free to give me a follow! Also you can checkout my other articles on my site.

References

- “Attention is all you need” Paper. Link.

- CS-25 Transformers United: V2 Introduction to Transformers. Link.

- Chapter-11,Dive into Deep Learning book. Link.

- StackExchange Discussion regarding key, value and queries. Link.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.