Bayesian Networks: how to make Computers infer Cause and Effect

Last Updated on July 26, 2023 by Editorial Team

Author(s): Ina Hanninger

Originally published on Towards AI.

Can computers reason about cause and effect in the same way humans can?

In the realm of machine learning, there are a few camps for the approach of how to predict an output of interest. One which can be seen as agnostic to the underlying causal mechanisms and interrelations that generate a particular data point, which I consider as the (pure) discriminative models — e.g. logistic regression, decision trees etc. For instance, to determine which class an input x belongs to, a decision boundary is back-fitted using a training set that simply maximizes the separation of the labelled training points of that set. The other, which is known as generative models, makes some effort at least to model the underlying data generating process — in the form of a joint probability distribution of observed and target variables. These allow us to express dependencies between other variables and furthermore to actually generate our own sample data.

Without being too dogmatic about it, I’d place myself on the camp of believing that the latter approach leads to more reliable, generalisable and useful predictions. Particularly because of its consideration of explainability and the way this mimics the more sophisticated mental models of how humans make predictions. One type of generative model I find particularly interesting is a Bayesian network, and in this article I will explain what it is and why it holds great potential for changing the way we approach machine learning.

What is a Bayesian network?

- A probabilistic graphical model — aka. a graphical representation of conditional probability distributions between variables which are computed using Bayesian inference

- A directed acyclic graph (DAG), G = ( V, E ), where each of the n nodes in V represent a random variable of interest, and the directed edges E encode informational or causal influences in the form of conditional probability dependence.

What is special about Bayesian networks?

Without even mentioning the potential for causal inference, Bayesian networks are a useful and elegant representation of probability distributions for a number of reasons.

Fundamentally, they allow us an efficient representation of joint probability functions. Given a distribution P of n discrete variables, X1, X2, … Xn, we already know that the chain rule of probability calculus allows us to decompose this joint probability distribution into the product of it’s conditional distributions. However with Bayesian networks, we can simplify this even further. As shown in the equation below, we can decompose this joint such that the conditional distribution of each node, Xi, is only a function of it’s ‘parent’ nodes, πi. Here we define the parent nodes, πi, as the minimal set of predecessors of Xi which renders Xi independent of all it’s other predecessors.

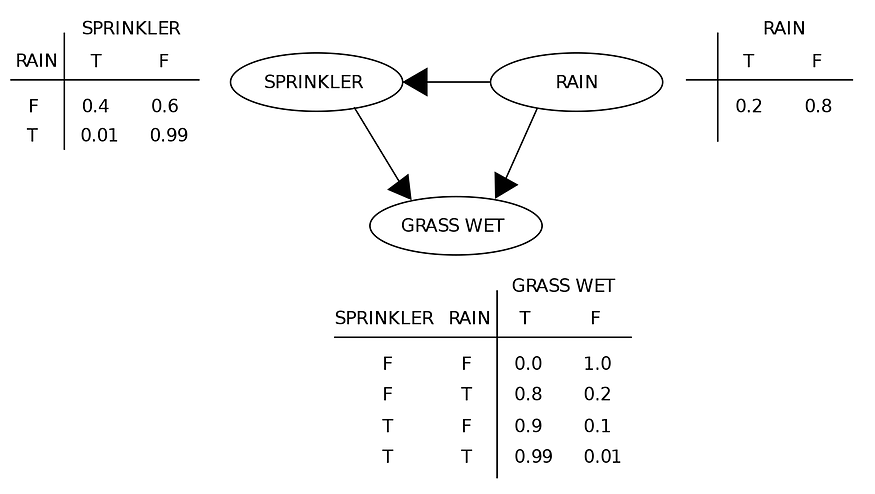

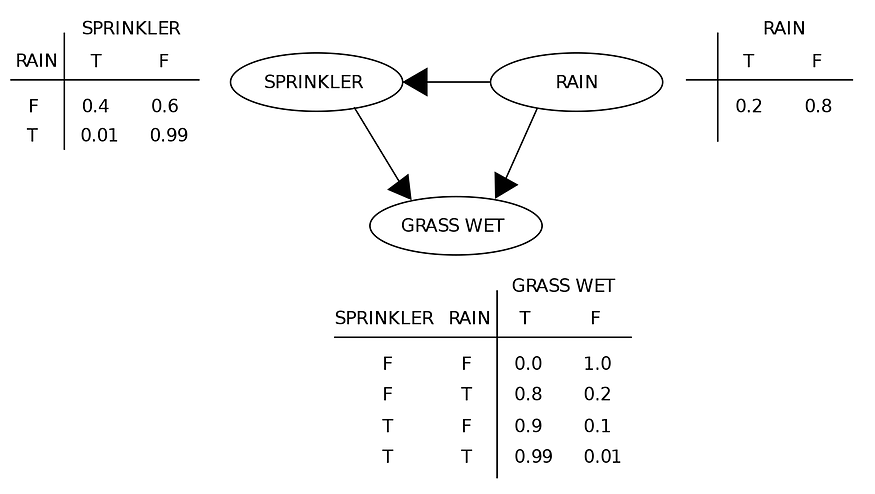

Looking at the example network above, P(Sprinkler U+007C Rain, WetGrass) = P(Sprinkler U+007C Rain) because of this property. Thus we can also say that the joint probability P(Sprinkler, Rain, WetGrass) = P(Rain)*P(Sprinkler U+007C Rain)*P(WetGrass U+007C Rain, Sprinkler). This is essentially how to read a Bayesian network.

Why is it the case that Xi can be independent of all other nodes besides its parents? In practice, this is something we observe and ascertain from our data, and then establish as a defining assumption of any Bayesian network. This is known as the Local Markov Property.

Local Markov Property = each node is conditionally independent of all non-descendent nodes given it’s parents.

But what does it mean for a variable to be “conditionally independent” ?

Seeing as conditional independence is an integral part of Bayesian networks and its eventual assertion to deriving causality, I think it’s important to spend a bit of time defining this and building some intuition on what that really means.

A is said to be conditionally independent of B given C if and only if:

P(A,BU+007CC) = P(AU+007CC) P(BU+007CC)

i.e. in discrete terms, the probability that both event A and event B happens given that event C happened is equivalent to the probability that A happens given C happened and the probability that B happens given C happened. Not very intuitive…

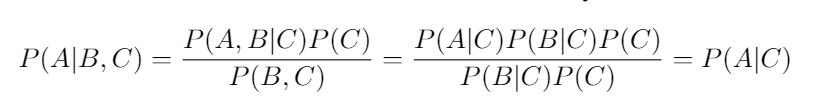

Another equivalent — and probably more useful — definition is derived from this using Bayes rule:

This is to say, once the outcome of C is known, learning the outcome of B would not influence our belief in the outcome of A.

Linking back to the definition of the Local Markov Property above, once the value/outcome of a node’s parents are observed, the node is independent of all other predecessors (nodes that are not it’s children). This is just like what we mentioned above — P(Sprinkler U+007C Rain, WetGrass) = P(Sprinkler U+007C Rain) — the sprinkler is conditionally independent of wet grass, given the rain. Given it rained, knowing the grass is wet does not change our belief in whether the sprinkler was on or not. Putting in some interpreted rationale behind this, it’s because we know that the rain makes it far less likely that the sprinkler would be on, as shown also by the probability tables— it’s probably the case that whoever turns on the sprinklers would be less inclined to do so if they saw it just rained. And since the rain also causes wet grass, knowing that the grass is wet wouldn’t necessarily tell us anything about whether the sprinkler was on, especially as there is a directionality to this cause and effect relationship. Hopefully through this example, you might start to see how conditional independence, information/belief flow and causality are linked together…

Now the factorization we saw in equation 1 is useful to us for a number of reasons. Firstly because it significantly reduces the number of parameters required to compute a joint probability distribution. If we take n binary variables (where the outcome is either true or false, as opposed to a continuous value), the non-simplified joint distribution would require a complexity of O(2^n), whereas in a Bayesian network with at most k parents per node, this would only be O(n*2^k). So for example, with 20 variables and at most 4 parents per node, this would be the difference between computing over 1 million probabilities versus just 320. This makes evaluations of probabilities for different combination of events occurring, i.e. probabilistic inference, actually tractable.

As wonderful as this is computationally, I believe the real significance comes from how this factorization allows us to construct a representation of cause and effect between different variables. And furthermore, how it gives us a mathematical framework to learn these complex relationships through data!

Bayesian networks as a way to infer causality

If we think about how humans learn to reason about the world, we live out our days collecting mass amounts of data about various events happening around us, building in our heads a subconscious sense of conditional probabilities of each of these events. In the example of the wet grass, if we were not taught anything else about the world, we would only be able to learn that rain causes the grass to be wet by noticing a higher frequency of instances that there is wet grass when it has rained. We would develop an association between wet grass and rain… however, with atemporal observation of data (not seeing one event happen after another in time) we wouldn’t necessarily interpret it as rain causing wet grass — i.e. how do we know it isn’t actually the reverse (as crazy as that sounds to us), or how do we know there’s not some other variable confounding the cause? Perhaps there’s some phenomenon where clouds trigger water to leak out of the plant cells in the grass, and because it gets cloudy when it rains, we mistakenly think it’s the rain that wets the grass instead of the clouds? This would be silly of course, but the point I’m trying to illustrate here is that our interpretation of one thing causing another relies on having some other reference variable to observe and relate with.

Similarly with Bayesian networks, having a conditioning variable is crucial to determining the direction of any arrow between two nodes — how else could we learn the conditional independence relations from data without something to condition with? And as we touched on before, causality is inherently coupled with the idea of conditional independence… Given that the value of a conditioning variable is fixed, if the state of variable A influences our belief in the state of variable B then this provides us a foundation for believed causation! Now the philosophy of how exactly we define and formalize causality proves to be pretty complex and the topic of another future article… But in essence, in much the same way we learn about the world by observing situations and events numerous times with slight variations in different factors to build up an instinctive picture of what causes what — so can this be achieved computationally!

What I find most powerful about Bayesian networks is this very formalisation and ability to learn causal structures from everyday real world data — known as Structure Learning. Using algorithms such as constraint-based (performing conditional independence tests between variables using data) or score-based learning (optimising a Bayesian/information-theoretic scoring function), we can learn an optimal network that obeys the local Markov property, fitting the conditional independent relations detected. For example, using the data we have and frequencies of data points in different states, we can detect edges between 3 nodes by checking if P(AU+007CB,C) = P(AU+007CC). Then, by knowing the states of different variables for each data point, and the parent nodes each node could be conditioned on, we can compute the conditional probability tables for the data given the graph — known as Parameter Learning.

This is compelling because, not only can we interpret a chain of cause and effect that might teach us something we don’t already know, we can then also compute the probability of a new data point being in a specific combination of states, aka a joint probability. This would allow our AI to predict outcomes of far more nuanced and comprehensive states of the world than our current machine learning methods, considering and expressing how multiple factors interrelate, thus doing so in a far more explainable, human-like fashion.

References / Further reading:

- Generative vs Discriminative — http://audentia-gestion.fr/MICROSOFT/Bishop-Valencia-07.pdf

- Graphical models lecture slide — http://www.stats.ox.ac.uk/~steffen/teaching/grad/graphicalmodels.pdf

- Causality by Judea Pearl — http://bayes.cs.ucla.edu/BOOK-2K/

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.