Another Get-Rich-Quick Scheme Using LLMs, RAG, Semantic Routing & Prompts Driven Feedback

Last Updated on March 28, 2024 by Editorial Team

Author(s): Claudio Mazzoni

Originally published on Towards AI.

Build an obedient assistant, a chatbot, that will interact with any documents, answer questions, share insights, give a domain, topic, and task-specific answers… As a service.

The other day I was sitting in my desk thinking about all the careers that will inevitably meet their end due to the rise of AI models/ LLMs capable of mimicking human logic and understanding.

It then dawned on me that engineering as we know it will be one of the first careers to go, after all, many companies are already using LLMs to do code auto completion yet other companies like Devin.ai have built applications capable of writing full stacks of code.

This, to me, meant that even I could be replaced in the near future. Needless to say when that thought hit me, I mentally froze, just like a deer in the middle of the highway frozen by the headlights of a truck going at 85 miles per hour.

The only way out that came to mind was to start preparing for the inevitable end in a well thought out and intelligent manner. By shopping for MRE rations and first aid kits and prepare for the rise of Skynet.

After the initial panic had faded, I decided that Actually the best way to prepare was to build a service that could be marketable, highly sought after by an industry not currently saturated with AI, and be designed around LLM models that would keep my skills relevant, and hopefully to create a new source of income, in case my job was terminated by our future AI overlords.

As you probably already suspected, this idea isn’t new, many companies are already wrapping OpenAIs API over an app and marketing as a service.

So before I embarked in this endeavor, I decided to first do some research to see which industry is yet to embrace the robotic hug of AI.

This was easier said than done, the race for AI service supremacy has been happening way before LLMs came into the picture and before the current war of supremacy between giants like Google, Amazon and Microsoft/OpenAI.

After two hours of brainstorming without a breakthrough on what innovative service I could offer, I turned to my usual source of wisdom when at a crossroads: my wife.

With her expertise in Public Relations, she illuminated how the PR field thrives on crafting inventive and distinctive ideas to craft client messages effectively to their audience. She highlighted how PR professionals adeptly navigate clients through crises and launch new initiatives with strategic finesse. The industry’s reliance on creative problem-solving and strategic planning, she noted, makes it a prime candidate for integrating advanced AI technologies. As far as I know, not many services has been build for their tasks either. This insight positioned the PR sector as an untapped market ripe for my AI-centric entrepreneurial venture.

I’ll start with a poof of concept, a chatbot that will be able to interact with the user. No different than 99.9% of bots out there, but this one will be tailored for the PR industry, will require data from the user to work and will revolve around clever prompting and document driven insights. As I am told, most of the work a PR professional does on a daily basis revolves around ingesting massive amounts of documents such as press releases, media and speeches to spin them into a concise message for their client. It will be a PR AI Agent

The Approach:

The Agent is designed as a QA bot empowered by;

- RAG (Retrieval Augmented Generation) which is a methodology used to enhance AI responses. The short of it is that it first retrieves relevant information to the user questions and it will be used to ‘contextualize’ the message passed to an LLM. This helps it be more accurate, nuanced, and contextually relevant.

- Semantic Routing, which is an approach derived from the concept of LLM Tools; which is a way of prompting an LLM to make tool-use decisions. This method however can be both costly and slow as it requires the LLM to make multiple inferences before it can derived to the final result. Semantic routing on the other hand leverages vector spaces to make those decisions, routing requests using semantic meaning. For our use case it is a very useful technique because it will allow us to route the user query to the most relevant task driven prompt. The design of this application will be as follows:

Document Ingestion:

File data extraction:

The user will feed media of any content type (text, images, sound), for now the app will handle PDFs, images and audio and be transformed into clean text. This will be done differently depending on the media type, for PDFs I will use traditional methods (I used the ‘PyMuPDF’ framework) of extraction but leveraging parallelism to improve performance.

For images and PDF files with images or table objects imbedded in them will be processed using OpenAI image-to-text flavor of GPT-4 ‘gpt-4-vision-preview’. Media inside PFDs, will be converted into text and re-inserted into the parsed document where the object originally was, maintaining the structure of the document.

For sound, I will use an open-source model called ‘whisper-medium.en’. It transforms English speech into text.

Preprocessing and Storage:

Once all the data from all the files has been converted into text, each file will be considered a ‘Document’, broken down into smaller semantic subsets, and then mapped into a searchable numerical vector space for ingestion into a vector database.

Vector databases are very powerful tools when it comes to document retrievals using natural language, they work exceptionally well fetching meaningful content from large data sources and it can be used to search for embedded data of multiple kinds with ease (images, videos, etc).

For this application, I used LanceDB, an open-source vector database for multi-modal AI. Its highly scalable and can stream data directly from object storage. Unlike other Vector Databases that store data In-memory, LanceDB uses a disk-based index & storage with a columnar data format called ‘Lance’, which is 100x faster than the Parquet file format. Vector Search can be used to find the nearest neighbors (ANN) of a given vector in under one millisecond.

One aspect of Vector Databases is that before the text is embedded and stored, it first needs to be split into ‘chunks’. This is pivotal for the fast and correct retrieval of the content we want within our documents. The challenge is what approach to take for splitting the data. Most common, and often seen in demos or small applications, is to split the data into even parts with an arbitrary overlapping of text to avoid losing semantic information at the retrieval stage.

The problem with this naïve approach is that there are instances where some content related to what we are searching for could be in another part of the vector space, meaning that there could be useful information not retrieved, or the semantics of the document be missing due to how the data was split thus missing the correct data entirely.

Many techniques have been used to solve this problem. The most comprehensive one I’ve read about came from a jupyter notebook authored by Greg Kamradt called ‘5 Levels Of Text Splitting’. The notebook acts as an educational/ demonstration article showcasing the pros and cons of the most common approaches to splitting documents. In my opinion the most accurate and well performing splitting method discussed is called ‘Semantic Chunking’.

By examining the semantic relationships between embeddings (converted text), the method identifies meaningful breakpoints within the document. This process involves creating combined sentence embeddings and calculating cosine distances to find significant changes indicating new semantic sections.

This approach in my experience delivers more contextually relevant text segmentation than fix splitting methods, although it should be noted that not all documents are made equal and individual evaluation should be perform to confirm this assumption. Maybe in another article.

Once the documents are split, I indexed them in a LanceDB table and saved to disk.

Below is a UML representation of the process.

Now that the app has ingested all the content provided, I will move forward with the second phase of this project.

RAG + Vector Search & Semantic Routing driven Inferences:

Document Retrieval:

Retrieval augmented generation (RAG) is widely used to provide contextual information to a prompt, this strategy helps reduce LLM hallucinations (when the LLM generates an answer that is not correct due to lack of knowledge).

The challenge is how to select the correct data from a large corpus of documents to pass in the prompt. Surprisingly this step of the process is relatively easy. In part because LanceDB vector storage framework makes querying much easier, in another because Semantic Chunking is very powerful at splitting documents in a way where most of the content we are searching for will mostly likely be mapped to the correct data.

I began by calling an orchestrator class, this will initiate the retrieval engine. After the user sends an initial message, the retrieval engine will be called to fetch the require information needed within the Vector DB to answer the users questions or tasks.

The retrieval engine will not be called again unless the follow-up messages trigger criteria that tell the process that new information is required.

- Any keywords associated with more information request is found within the message. This method has many shortcomings but will help us route a significant amount of the questions asked by the users correctly, for example messages that use keywords such as ‘Explain’ or ‘What does it mean?’ most likely require a combination of history and new context to expand on.

- A new topic or task is introduced, if a user input is re-routed to a new topic via semantic routing the Vector database retrieval process will kick off again to retrieve relevant data. If new data is retrieved, it will be used along with the contextualized and summarized chat history for further inferences, if not, the existing history will be used as content for the LLM to make further inferences.

These steps will take place before inferencing and will help build the context required. This step alone is enough to answer must questions asked by an user. However to go one step further I decided to add more nuance to the message we can send the LLM, the next step will discuss a method of Routing to the desire reponse via selecting the correct prompt for the users asks.

Semantic Routing:

Since this application is for people involved in the PR industry, sending context and the question alone to the LLM might not be enough to give the user the desire response, it also opens up the possibility of sending a response to the user that is not properly formatted or is outright contradicting to the ask.

To narrow the variability of the responses and ensure the responses are curated to what the PR professional wants I selected 4 most common task within public relations. These are:

- Press Releases: Writing/modifying/reviewing them.

- Client Brief Clarification: Analyzing/expanding/pivoting

- Content Strategy Suggestion

- Speech Writing

The app employs prompts for each of these to guide the language model’s responses. The next step is finding a efficient way of matching the users question to the desire task.

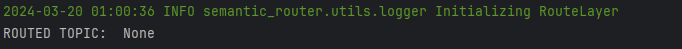

To do this I am used ‘semantic_router’ framework by Aurelio-Labs which is an easy-to-use package that allows you to pass relevant sentences/utterances as a way to guide the encoder model to match the user input to a ‘‘Route’’ via semantic similarity, similarly to how we implemented chunking, semantic routing uses embeddings to pair text along a corpus of utterances mapped to a Route. This method functions as an LLM Agent but without the overhead of an LLM call, reducing cost and response time.

Semantic routing is fast and, with enough utterances, very good at mapping a user's message/ query to the correct route. This process can be enhanced further with finetuning but for the sake of maintaining this article neat I will leave it for another time.

Once the selected the topic is chosen the correct prompt is selected and used to craft the message sent to the LLM, this will prevent the LLM from providing the user with wrong, inaccurate or malicious answers. Ensuring that the inferences delivered are coherent, concise.

By leveraging Document Retrieval and Semantic Routing with a RAG approach the code can handle various types of questions, relying little on the LLM trained knowledge.

The combination of these three approaches allows our app to perform all the PR tasks defined for our POC, along with tasks related to these, from straightforward definitions, inquiries, to requests for detailed explanations, adapting its responses based on the interaction history, the data provided and the users asks.

Now that our App logic is complete, all we have to do is put an UI to it. I am not an front end guy so for this I am using ‘streamlit’, which I was told its a super fast and easy framework for building and deploying of web apps, using simple python code.

This money-making scheme is in the bag!

App UI development:

Okey!.. So apparently, streamlit is great at building simple apps, but it becomes extremely difficult to operate once you account for all the optimization techniques I applied to the file ingestion process of my code. Apparently, streamlit does not like multiprocessing. If you want to run it, you’ll need to pass your documents first.

After much refactoring and testing, the app is finally up and running! The UI is simple and pretty much a nod to current chatbots in production today.

The app is simple: if you have streamlit secrets set, including your API keys, they will be automatically set; if not, you’ll be asked to submit one. Once that is done, the first step is to select the model to use. GPT-4 is by far the best out here, but in my opinion not needed since the LLM will mostly rely on the information given, GPT-3 will yield great results as well when it comes to delivering on our content driven asks.

Temperature will allow you to measure how ‘creative’ the LLMs responses will be. In simple terms the parameter sets how ‘random’ will the LLM responses be. Because our bot is prompt and context driven, I recommend to set its value low or close to zero as to mitigate the chances of it hallucinating, (making stuff up).

For this exercises, I created some mock data to test the poc app, the first file is a speech generated by Chatgpt on the shortcomings of Public Relations as an industry and what can be done to mitigate it. Second is a chart showing the issues with diversity within PR firms and the other two are two pdf documents summarizing PR demographics and what kind of services a PR agency usually provide.

The first question uses the context extracted to answer the question but does not trigger any special routing.

SPEECH_WRITING = """

You are a Public Relations Agent working for an agency.

You have been tasked with creating a speech that aligns closely with the sentiment and tone of the client's provided document or brief for an upcoming event or engagement.

Your response should start with a concise summary and utilize bullet points for detailed explanations.

Base your speechwriting on the sentiment and tone found in the provided document, offering a rationale for each aspect of the tone you decide to mirror in the speech:

Your response should always be in Markdown format.

Review the attached Document or Brief.

Identify the predominant sentiment and tone conveyed in the document and how they align with the client's objectives.

Add 3-5 ways to ensure the speech reflects the document's sentiment and tone, emphasizing emotional resonance and audience connection.

Maintain consistency in tone throughout the speech while effectively conveying the key messages.

Leveraging the document's tone to enhance audience engagement and message retention.

IF the Document or Brief includes it:

Consider how the document's tone fits with the client's usual communication style and propose adaptations for authenticity in the speech.

Public Relations (PR) Documents:

{document}

"""

The user message routed the question to a new prompt with detail instructions on how to proceeding with the speech writing. This will trigger relevant context extraction and with this information the LLM will proceed to complete the task as instructed.

Conclusion:

The journey from a moment of existential dread to the creation of a marketable AI-driven service for the Public Relations industry exemplifies not just resilience but a forward-thinking approach to the inevitable integration of advanced AI technologies in professional fields.

The essay we leveraged dabbed briefly across multiple frameworks to build an AI-powered chatbot app tailored for PR professionals, leveraging methodologies like Retrieval Augmented Generation (RAG), Semantic Routing, and prompt-driven feedback.

This approach to document ingestion, preprocessing, storage, and retrieval, culminating in a user-friendly application, showcases the potential for AI to transform and streamline the use of LLMs across any industry by providing nuanced, contextually relevant responses and insights.

The advantage of this architecture is that instead of relying on LLMs for the execution the application this approach navigates data extraction and task decision using semantic search instead, reducing API cost significantly. However I would like to disclaim that this is just a simple POC and no means an actual application ready to go to production. Further work could expand on its capabilities, usability, latency and cost.

For example we could add an ‘index table’ to the vectorstore where we would store metadata from each document, such as; file name, type, and a summary of the content. This would allow the application to first identify out of all the documents given by the user, which ones are closely related to the request, narrowing down document retrieval later.

Another example is that we could finetune various mid-size LLM models to learn domain terminology, simple tasks, and methodologies used in the PR industry instead of relying on ChatGPTs.

We could build a ‘knowledge graph’ that allows for more insightful thought processes and accessing and retrieving information. And getting to the real ask of the user without hallucinating or failing to complete it.

This could be done by first identifying the high level request, identifying what information is needed and then allowing the application to ‘ask’ the user for clarification to complete it if needed. Allows to get to the core need of the user and generate the answer as a meaningful and safe inference.

Project Repo:

GitHub – cmazzoni87/MessageAssistant

Contribute to cmazzoni87/MessageAssistant development by creating an account on GitHub.

github.com

Methods and Stack used:

- Semantic Chunking Approach: Provides a method to break down text into related chunks, enhancing data retrieval efficiency: Semantic Chunking Tutorial on GitHub

- LanceDB: Used for document retrieval, ensuring contextually relevant responses

- Streamlit: A free and open-source framework for rapidly building and sharing machine learning and data science web apps

- LangChain: Leading Framework for buildin AI/LLMs apps

- Semantic-Router: Decision-making layer using semantic vector space to determine the ‘route’ or pre-defined keyword search.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.