AlexNet: Implementation from Scratch

Last Updated on July 17, 2023 by Editorial Team

Author(s): Muhammad Arham

Originally published on Towards AI.

A PyTorch series for people starting with Deep Learning. Following an implementation-based approach of various well-known architectures.

Introduction

The Alexnet architecture was a breakthrough at the time of its publication, achieving minimal loss on the ImageNet classification task. It uses sequential convolutional blocks with some fully connected layers for the classification task. In this article, we understand the architecture and code it in PyTorch.

Architecture

The flowchart shows the basic outline of the process.

The input image is of size 227, width and height with 3 color channels i.e., RGB. They are passed through a series of Convolutional blocks consisting of Convolutional, ReLU, Normalization, and Pooling layers. The output is then flattened to a one-dimensional array and passed through several dense layers. The result is a one-dimensional array, with the size of the vector representing the total number of classes.

The exact dimensions and details are extracted directly from the Alex Net paper.

The pooling layers reduce the input size and the convolutional filters vary the total channels in the input. The output of the convolutional blocks is a (256,6,6) tensor that is flattened to a single dimension, i.e. 256x6x6 dimensional vector that equals 9216. The vector is passed through two dense layers consisting of 4096 neurons. The last fully connected layer reduces the total output neurons to the number of possible classes.

Convolutional Layers

If we look into the convolutional layers inside, there are 5 similar blocks, each composed of similar layers.

The input to the first block is an RGB image with 3 color channels. Each block processes the input and passes the output to the next block in a sequential manner. The output sizes of each block are shown in the image.

Convolutional Block

The flowchart shows the layers each block is composed of.

Each block, except 3 and 4 has the above structure. Layers 3 and 4 have no normalization and pooling layers at the end.

Convolutional Sizes

Each block has different sizes of convolutional layers. The table summarizes the sizes used in the paper. Each convolutional layer uses a ReLU activation that is shown explicitly.

Normalization

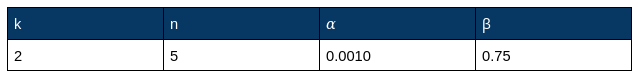

The paper uses LocalResponseNormalization that helps generalized, and as per the paper, improved performance on the classification task. For normalization hyperparameters, they use the following values.

Refer to the documentation to learn about what these parameters represent.

Pooling Layers

The paper proposes to use overlapping pooling where the stride is different from the kernel size. Experimentally, the overlapping pooling reduced the error rates relative to the non-overlapping pooling with the same stride and kernel. Therefore, all pooling layers in the convolutional blocks use stride 2 and kernel size 3.

Implementation

We start our implementation from the convolutional block structure, which is generalizable to all of the 5 blocks. This can allow modularity, and allow reuse when implementing the complete Alex Net architecture. This reduces code duplication.

class AlexNetBlock(nn.Module):

def __init__(

self,

in_channels,

out_channels,

kernel_size,

stride,

padding,

pool_and_norm: bool = True

) -> None:

super(AlexNetBlock, self).__init__()

self.conv_layer = nn.Conv2d(

in_channels, out_channels, kernel_size, stride, padding)

self.relu = nn.ReLU()

self.pool_and_norm = pool_and_norm

if pool_and_norm:

self.norm_layer = nn.LocalResponseNorm(

size=5, alpha=0.0001, beta=0.75, k=2)

self.pool_layer = nn.MaxPool2d(stride=2, kernel_size=3)

def forward(self, x):

x = self.conv_layer(x)

x = self.relu(x)

if self.pool_and_norm:

x = self.norm_layer(x)

x = self.pool_layer(x)

return x

The code is self-explanatory, where we receive parameters for the convolutional layer. In addition, we use a pool_and_norm boolean value that will be set to False for block 3 and block 4. The above block can be used in the complete Alex Net architecture.

The below code shows the complete Alex Net model, that uses the above block.

class AlexNet(nn.Module):

def __init__(self, num_classes, in_channels) -> None:

super(AlexNet, self).__init__()

self.block1 = AlexNetBlock(

in_channels, 96, 11, 4, 0, pool_and_norm=True)

self.block2 = AlexNetBlock(96, 256, 5, 1, 2, pool_and_norm=True)

self.block3 = AlexNetBlock(256, 384, 3, 1, 1, pool_and_norm=False)

self.block4 = AlexNetBlock(384, 384, 3, 1, 1, pool_and_norm=False)

self.block5 = AlexNetBlock(384, 256, 3, 1, 1, pool_and_norm=True)

self.flatten = nn.Flatten()

self.fc1 = nn.Linear(256 * 6 * 6, 4096)

self.dropout1 = nn.Dropout(0.5)

self.fc2 = nn.Linear(4096, 4096)

self.dropout2 = nn.Dropout(0.5)

self.classification_layer = nn.Linear(4096, num_classes)

def forward(self, x):

x = self.block1(x)

x = self.block2(x)

x = self.block3(x)

x = self.block4(x)

x = self.block5(x)

x = self.flatten(x)

x = self.fc1(x)

x = self.dropout1(x)

x = self.fc2(x)

x = self.dropout2(x)

x = self.classification_layer(x)

return x

As per the flowchart, we create 5 Alex Net blocks with the configuration described. We then sequentially pass the input through all the layers, till we achieve a one-dimensional array representing the probability of each possible class.

For this specific instance, we need two initialization parameters for AlexNet. One is input channels that are by default three for RGB images. The second is the total number of classes that defines our output size. In the paper, the value used was 1000 for the Image Net task. For my implementation, I use the CIFAR-10 dataset so I set it to ten.

The below image summarizes the Alex Net architecture.

Conclusion

The above architecture can be trained for a classification task from scratch. I trained it for the CIFAR-10 dataset for ten epochs. The below graph shows the loss progression.

For just ten epochs, the Cross-Entropy Loss is reducing gradually, and we can do well for simple classification tasks.

The article only shows code snippets for understanding. For complete implementation and training code, refer to my GitHub repo. For a detailed understanding of the architecture, consider reading the paper.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.