Preference Alignment

Last Updated on February 18, 2025 by Editorial Team

Author(s): Sarvesh Khetan

Originally published on Towards AI.

Preference Alignment

Reinforcement Learning with Human Feedback (RLHF)

Table of Content

- Prerequisites

- Method 1— Preference Alignment using Reinforcement Learning with Human Feedback (RLHF) Algorithm

2.a. LLM as Reinforcement Learning Agent

2.b. Reward Modelling using Supervised Learning — Preference Model / Bradley-Terry Model / Reward Model - Method 2 — Preference Alignment using Supervised Learning with Human Feedback — Direct Preference Optimization (DPO) Algorithm

Prerequisite

Kindly go through this medium article of mine discussing about Policy Gradient methods for Deep Reinforcement Learning to create an agent which can play Ping Pong Game. (But instead you could also use Actor Critic Reinforcement Learning Methods too, infact original paper implemented Actor-Critic and not Policy Gradient)

Preference Alignment using Reinforcement Learning with Human Feedback (RLHF)

Researchers thought of a simple idea i.e. to finetune the above LLM (using Reinforcement Learning) such that it is given a low reward incase it generates a response which does not aligns with human preferences and high reward if it aligns with human preferences

So first we will have to draw parallels between an LLM and RL!

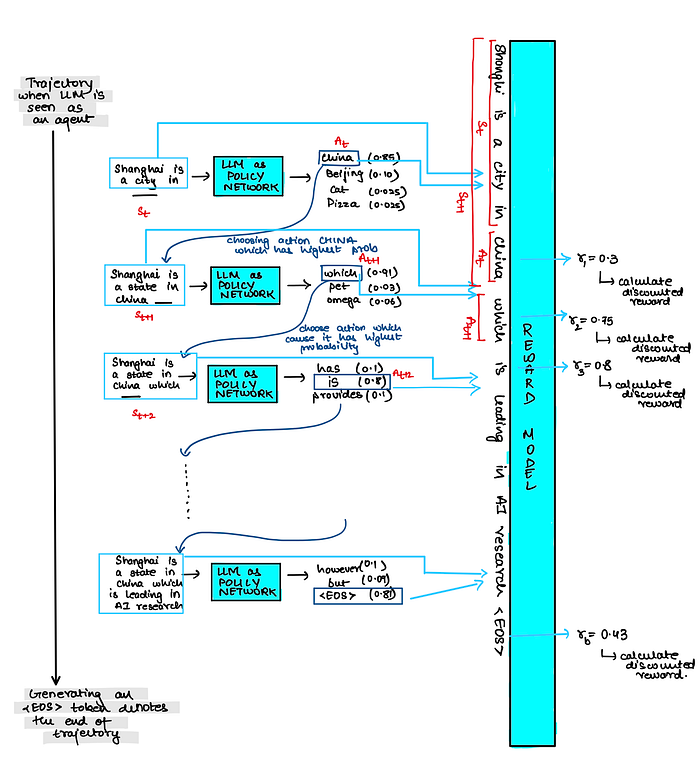

LLM as a Reinforcement Learning (RL) Agent

Hence we can see that LLM can be thought of as an Reinforcement Learning Agent with

- Input Text as State,

- Predicted Next Word as Actions

- And LLM itself as the Policy Network

But we are missing one important thing in this RL setup i.e. REWARDS!! We need a reward model to tell the LLM that the output that it generated (next token) was good or bad. And this good or bad should be in lines with human preferences!! Hence there was a need for a REWARD Model (we will see later the architecture diagram for this reward model and how to train such a reward model).

Above is a highly inefficient approach to make the reward model since we will have to perform inference on the reward model so many times for a single trajectory and hence researchers came up with this new approach so that we get all the rewards in one go

Once we have this Reward Model we will use it to finetune our LLM to nudge it to give human like responses. You can use any RL algorithm discussed here like PPO / TRPO / GRPO / …

Reward Modelling Using Supervised Learning — Preference Model / Bradley-Terry Model / Reward Model

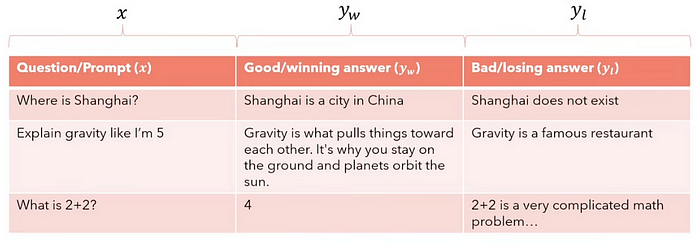

A model which can give a score to the LLM output based on how the humans would have scored that same output !! Since we are using supervised learning to train this reward model, we need to create a dataset which looks as follows

In the above dataset, we need to provide ground truth rewards and we will use human annotators to create these ground truths. But there is a issue here

- One person might assign the output ‘Shanghai is a city in China’ with a high reward (say 0.95) because it is to the point

- While another person might assign the same output with a low reward (say 0.4) because it is very short answer

Hence we can conclude that it is hard to trust on humans for correct numeric score to these samples because it can be very noisy and hence to solve this issue, instead of asking humans to give a numeric score, they should be asked to just give a comparison i.e humans are responsible for relative non numeric scoring eg s1 > s2. (As shown in below figure)

Another way of representing the same above dataset is as follows — called Preference Dataset since one answer is preferred over another answer

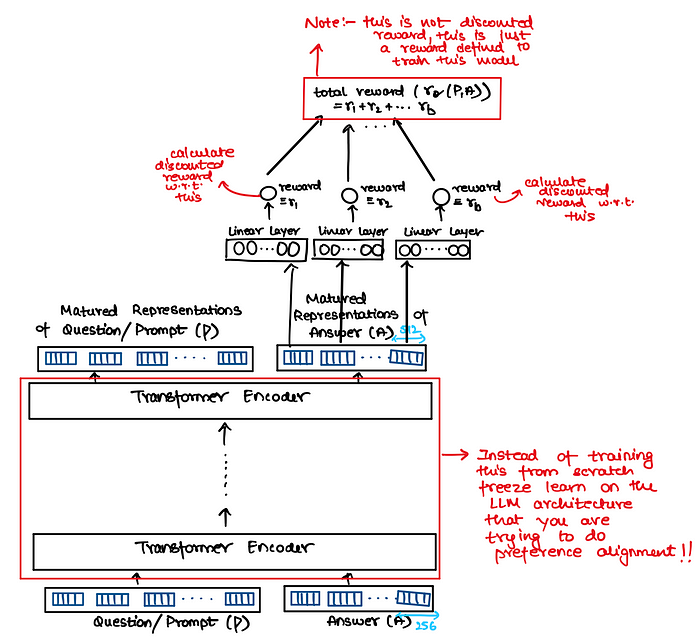

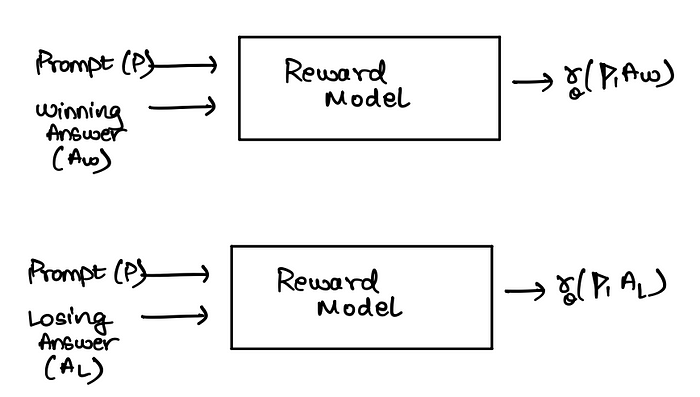

Now that we have dataset of preferences, we need to find a way to convert these preferences into a numeric score (reward). We will use a reward model as shown below to do this

Now the question is how would you train the above model architecture?? We need to train the model with the objective that rewards for winning answers (answers chosen by human annotaters) are higher compared to rewards for losing answers (answers not chosen by human annotaters). This objective can be translated to probability based objective as follows — Probability of winning answer should be higher than the probability of losing answer!!

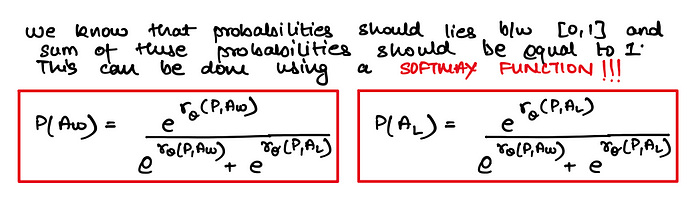

Now the question is how to convert these rewards into probability of winning answer and probability of losing answer??

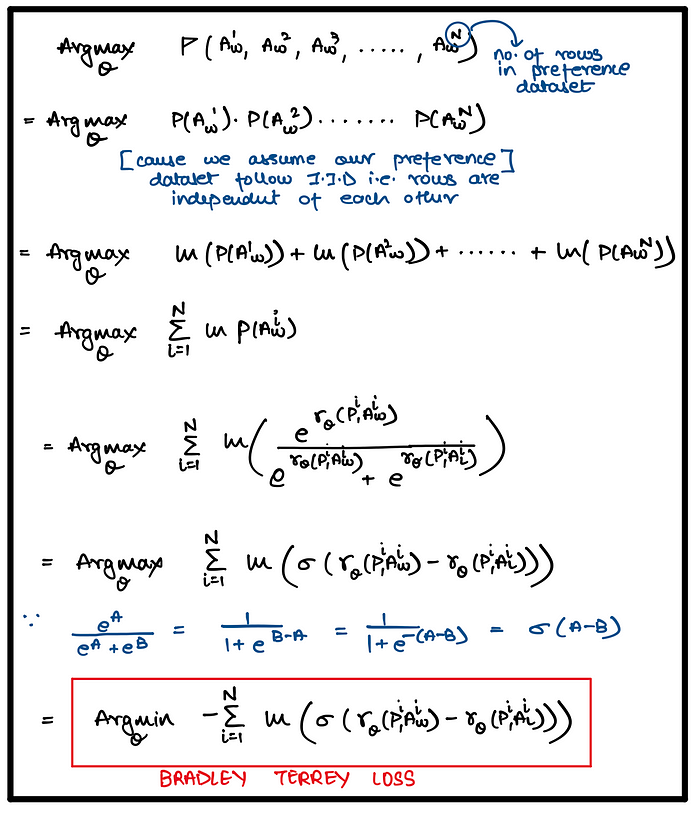

As decided earlier, our objective function is to maximize the probability of winning answer which can be mathematically be written as follows

Preference Alignment Using Supervised Learning with Human Feedback — Direct Preference Optimization (DPO)

DPO Loss Function

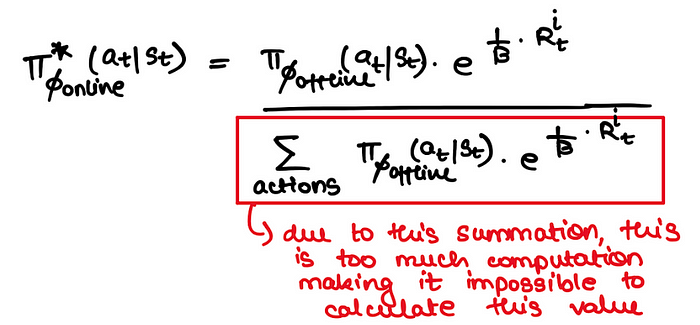

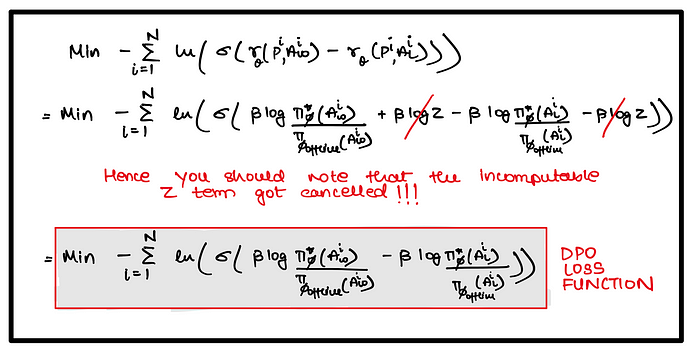

Let’s say we use TRPO algorithm for training our above RL algorithm, and over here we proved that there exists an exact solution to this TRPO optimization problem i.e.

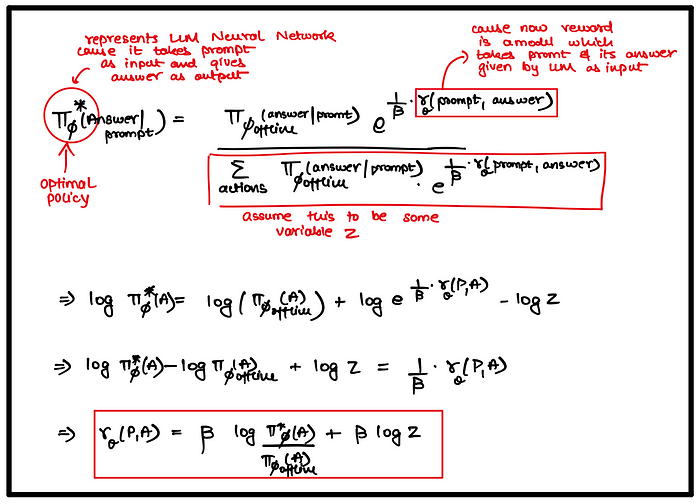

Now this exact solution cannot be calculated due resource constraints but assume that somehow, magically we have access to an optimal policy then how would the reward function for this optimal policy look like??

Now if we substitute this back to the Bradley-Terry Loss derived above we will get as follows :

Hence, we can conclude that our LLM is secretly our reward model itself because, as we can see above, the optimization equation for the reward model is trying to optimize the LLM

DPO Algorithm

- Unlike traditional RLHF methods that require separate reward models and complex reinforcement learning, DPO directly finetunes the LLM using preference data by treating it as a classification problem (binary cross-entropy loss). Hence it got it’s name — Direct Preference Optimization !!

- DPO simplifies this process by defining a loss function that directly optimizes the model’s policy based on preferred vs non-preferred outputs.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.