Meta Learners: Measuring Treatment Effects with Causal Machine Learning

Last Updated on September 27, 2024 by Editorial Team

Author(s): Hajime Takeda

Originally published on Towards AI.

TL;DR: In recent years, algorithms combining causal inference and machine learning have been a hot topic. In my previous article, I discussed the basics of Causal Machine Learning. This article aims to explain Meta Learners and discuss its underlying algorithms. Finally, I will demonstrate a case study using EconML with data from social experiments.

1. What is a Treatment Effect?

Before diving deeper, let’s quickly recap the key concepts from the previous article. Causal inference focuses on determining whether there is a cause-and-effect relationship between a specific action (the “treatment”) and its result (the “outcome”).

In e-commerce or marketing, “treatments” refer to actions like sending coupons, running advertisements, or launching direct mail campaigns. The “outcomes” could be metrics such as sales or purchase rates.

Meta-learners are designed to measure the treatment effect, but what does that actually mean? To define the treatment effect, we turn to Donald Rubin’s Potential Outcomes Framework, which is conceptually straightforward.

For example, if the potential purchase rate with a coupon is 20% and the purchase rate without the coupon is 10%, the treatment effect is 10%, calculated as the difference between 20% and 10%.

💡 Treatment Effect: The difference in outcomes caused by a treatment between a group that receives the treatment and one that does not.

2. Types of Treatment Effects

Now, let’s explore the three types of treatment effects we’ll focus on today:

- Average Treatment Effect (ATE): This is the most common metric, measuring the effect across all customers.

- Conditional Average Treatment Effect (CATE): This measures the effect within specific segments, such as males, females, or people in their 30s.

- Individual Treatment Effect (ITE): This calculates the effect at the individual level.

3. How to Calculate the Treatment Effect

Consider this question: Did a coupon increase sales? To answer this, we look at data from various customers, including attributes like age, gender, and location, as well as information on the treatment (Z) and outcome (Y).

The column “Y1” represents the outcome when the coupon is used, while “Y0” represents the outcome without the coupon. If we had both columns for the same individual, calculating the treatment effect would be as simple as subtracting one from the other. However, the challenge is that we can only observe one outcome for each individual.

To overcome this dilemma, we turn to meta-learners

Here, meta-learners are the framework to predict these counterfactual values using machine learning.

Meta-learners provide a framework to estimate these counterfactual values using machine learning techniques. By predicting and filling in the missing values(shown in red) with machine learning, we can estimate the Individual Treatment Effect (ITE).

While this is a simplified example, let’s now dive deeper into the different types of meta-learners.

4. Types of Meta Learners

There are five major methods: S-learner, T-learner, X-learner, DR learner, and DML. S-learner is the simplest approach, and DML is the most complex but robust approach.

5. S Learner

Concept

The “S” in S-learner stands for “single,” as it relies on a single machine learning model. In this approach, all available data is used to train one model that predicts outcomes for both treated and untreated scenarios. The machine learning model used within meta-learner frameworks is known as the base learner, which could be any supervised algorithm like XGBoost or Random Forest.

To better understand how the S-learner works, let’s examine its pseudo-code.

Pseudo-Code

The pseudo-code shows the simplicity of this method. The model is trained using features such as age, gender, location, and treatment status as inputs (X) and sales as the target variable (y). The trained model then predicts potential outcomes for both the treated and untreated cases. The difference between these predictions gives us the treatment effect. Here, we use XGBoost, but other algorithms like LightGBM or Random Forest could also be employed.

# Setting features and target variable

X = df[['age', 'gender', 'location', 'treatment']]

y = df['sales']

# Training the model

model = xgb.XGBRegressor()

model.fit(X, y)

# Predicting sales if treated and not treated

df['sales_pred_treated'] = model.predict(df[['age', 'gender', 'Location', 'treatment']].assign(treatment=1))

df['sales_pred_control'] = model.predict(df[['age', 'gender', 'Location', 'treatment']].assign(treatment=0))

# Calculating ATE

df['treatment_effect'] = df['sales_pred_treated'] - df['sales_pred_control']

ATE = df['treatment_effect'].mean()

6. T learner

concept

The “T” in T-learner stands for “two,” as it utilizes two separate machine learning models. One model is trained to predict the outcomes for customers who receive the treatment (e.g., a coupon), while the other predicts outcomes for customers who do not. This dual-model approach allows for a more nuanced understanding of differences between treated and untreated customers.

Let’s take a closer look at the pseudo-code for this method.

Pseudo-Code

The T-learner involves splitting the training data into two groups: treated and control. A separate model is trained for each group. The Model_treated redicts responses for customers who receive the treatment, while the Model_control predicts responses for customers who do not.

# Splitting the data into treated and control groups

df_treated = df[df['treatment'] == 1]

df_control = df[df['treatment'] == 0]

# Training the model for the treated group

model_treated = xgb.XGBRegressor()

model_treated.fit(df_treated[['age', 'gender', 'location']], df_treated['sales'])

# Training the model for the untreated group

model_control = xgb.XGBRegressor()

model_control.fit(df_control[['age', 'gender', 'location']], df_control['sales'])

# Predicting sales

df['sales_pred_treated'] = model_treated.predict(df[['age', 'gender', 'location']])

df['sales_pred_control'] = model_control.predict(df[['age', 'gender', 'location']])

# Calculating ATE

df['treatment_effect'] = df['sales_pred_treated'] - df['sales_pred_control']

ATE = df['treatment_effect'].mean()

7. Advanced Meta Learners

Beyond the S-learner and T-learner, there are more sophisticated meta-learners designed for specific challenges:

- X-Learner: An advanced extension of the T-learner, particularly effective for imbalanced datasets because it incorporates propensity scores to adjust for selection bias.

- DR-Learner: Combines a propensity score model and an outcome model, offering robustness against model misspecification; consistent as long as one of the models is correctly specified.

- Double Machine Learning (DML): Utilizes a two-stage process with machine learning models to handle high-dimensional data; reduces bias and overfitting through orthogonalization techniques.

- Double Machine Learning (DML): A complex but highly robust method that also uses two base learners for treatment and outcome prediction.

8. Choosing the Right Method

How do you choose the most appropriate meta-learner? The optimal method depends on the nature and characteristics of your data. Here’s a recommended strategy:

- Start Simple: Begin with simpler models like the S-learner or T-learner to establish a baseline.

- Experiment and Evaluate: Test different methods and compare their performance using accuracy metrics such as MAPE or RMSE. Assess robustness through refutation techniques, which I will introduce later.

In the next section, I’ll provide a brief overview of how to implement these meta-learners.

9. Sample Code

Useful Libraries for Causal Machine Learning

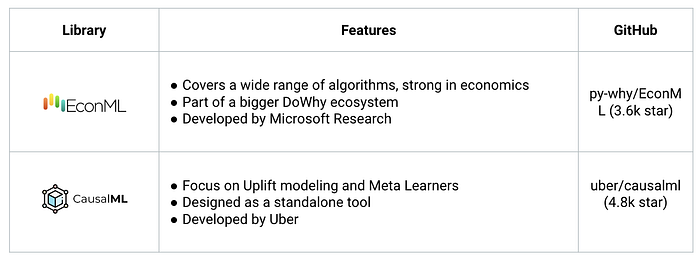

Let’s take a look at two powerful open-source libraries: EconML and CausalML.

EconML, primarily developed by Microsoft, offers a broad range of algorithms and tools for various causal inference tasks. On the other hand, CausalML, developed by Uber, is specifically designed for uplift modeling and marketing use cases.

When implementing meta-learners, EconML is recommended due to its extensive algorithm support and simplified methods for robustness checks, such as refutation testing.

Code Walkthrough

Let’s dive into a case study in economics. In this example, we will estimate the Average Treatment Effect (ATE) using meta-learners.

Full code

You can access the full code here:

Effective-Uplift-Modeling/simple_lalonde_analysis_econml.ipynb at main ·…

Contribute to takechanman1228/Effective-Uplift-Modeling development by creating an account on GitHub.

github.com

Import Libraries

To get started, install the necessary libraries: DoWhy and EconML.

pip install dowhy econml

DoWhy provides a general causal inference API, and EconML offers estimators based on machine learning.

import dowhy

from dowhy import CausalModel

from dowhy import datasets

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

from lightgbm import LGBMRegressor

import matplotlib.pyplot as plt

# Avoiding unnecessary log messges and warnings

import logging

logging.getLogger("dowhy").setLevel(logging.WARNING)

import warnings

from sklearn.exceptions import DataConversionWarning

warnings.filterwarnings(action='ignore', category=DataConversionWarning)Dataset

Dataset

We will use the Lalonde dataset, derived from an employment program conducted by Lalonde in the 1970s. The program aimed to assist disadvantaged workers in the U.S. in securing long-term employment through job training.

# Load the data

data = dowhy.datasets.lalonde_dataset()

data.head()

This dataset includes participants with a treatment label, six features such as age, ethnicity, marital status, and educational attainment, and their real earnings before and after the program.

Framing

Next, we define a causal model to represent the cause-and-effect relationships between variables. The goal is to understand the impact of training (treatment) on the real earnings for the following year (outcome) while controlling for confounding variables.

- Treatment: The

treatcolumn indicates participation in the job training program (1 if participated, 0 otherwise). - Outcome: The

re78column represents real earnings in 1978. - Confounders: Other columns like

age,educ,black,hisp,married,nodegr,re74, andre75are confounders that affect both treatment and outcome.

# Set features and target

features = ['age', 'educ', 'black', 'hisp', 'married', 'nodegr', 're74', 're75']

X = data[features]

y = data['re78']

T = data['treat']

# Scale the features

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# Define the causal model using DoWhy

model = CausalModel(

data=data,

treatment='treat',

outcome='re78',

common_causes=features

)

# Identify the causal effect

estimand = model.identify_effect(proceed_when_unidentifiable=True)

Estimate & Calculate ATE

In this step, we will estimate the effect of the job training program on earnings using Double Machine Learning (DML). In this example, LightGBM will predict the outcome (earnings), and logistic regression will predict the treatment (participation in the job training program). Feel free to experiment with other machine learning models by adjusting the method name.

# Estimate the causal effect using DML

estimate = model.estimate_effect(

identified_estimand=estimand,

method_name='backdoor.econml.dml.LinearDML',

target_units='ate',

method_params={

'init_params': {

'model_y': LGBMRegressor(n_estimators=100, max_depth=3, verbose=-1),

'model_t': LogisticRegression(max_iter=1000),

'discrete_treatment': True,

},

'fit_params': {}

})

# Display the estimated causal effect

print(f"Estimated Average Treatment Effect (ATE): {estimate.value}")

> Estimated Average Treatment Effect (ATE): 1679.2355823720495

Refutation

To verify the robustness of our causal model, we apply refutation methods. In causal inference, it is crucial to check whether the assumed causal graph is robust. We will use two methods: Random Common Cause and Placebo Treatment.

refutation_methods = [

"random_common_cause",

"placebo_treatment_refuter"

]

for method in refutation_methods:

result = model.refute_estimate(estimand, estimate, method_name=method)

print(result)

With the Random Common Cause test, we introduced a random variable into the dataset as a potential confounder. The idea here is that if our original estimate is truly robust, adding this random factor shouldn’t significantly alter the causal estimate.

Refute: Add a random common cause

Estimated effect:1679.2355823720495

New effect:1684.4755652949018

p value:0.94

The original estimated effect was $1679, and the new effect, after adding the random variable, was $1684. The p-value came out to 0.94, well above the standard threshold of 0.05. This result indicates that our estimate remains stable even when a random variable is added, suggesting the model’s robustness.

Next, we applied the Placebo Treatment test, where we replaced the actual treatment variable with a random one. In a valid model, substituting the treatment with random noise should result in an effect close to zero, as there is no real causal relationship to be found.

Refute: Use a Placebo Treatment

Estimated effect:1679.2355823720495

New effect:32.2951646306353

p value:0.8999999999999999

The original estimated effect was $1679, but with the placebo treatment, it dropped to $32 — effectively close to zero. This outcome reinforces that our original estimate was valid, as it accurately reflects the absence of a causal effect when the treatment is randomized.

Visualization of ATE and selection bias

This code visualizes the relationship between the ATE and selection bias, decomposing the difference in earnings between the treatment and control groups into ATE and selection bias.

import matplotlib.pyplot as plt

import numpy as np

# Calculate necessary data

control_earnings = data[data['treat'] == 0]['re78'].mean()

treatment_earnings = data[data['treat'] == 1]['re78'].mean()

ate = estimate.value

# Calculate selection bias

selection_bias = treatment_earnings - control_earnings - ate

# Create the plot

fig, ax = plt.subplots(figsize=(12, 7))

# Create bars for control group and treatment group

ax.bar('Control Group', control_earnings, color='skyblue', edgecolor='black', label='Base Earnings')

ax.bar('Treatment Group', control_earnings, color='skyblue', edgecolor='black')

# Stack bars for selection bias and ATE

ax.bar('Treatment Group', selection_bias, bottom=control_earnings, color='lightgreen', edgecolor='black', label='Selection Bias')

ax.bar('Treatment Group', ate, bottom=control_earnings + selection_bias, color='orange', edgecolor='black', label='ATE (True Effect)')

# Add text labels

ax.text(0, control_earnings / 2, f'${control_earnings:.0f}', ha='center', va='center', color='black', fontsize=10)

ax.text(1, control_earnings / 2, f'${control_earnings:.0f}', ha='center', va='center', color='black', fontsize=10)

ax.text(1, control_earnings + selection_bias / 2, f'${selection_bias:.0f}', ha='center', va='center', color='black', fontsize=10)

ax.text(1, control_earnings + selection_bias + ate / 2, f'${ate:.0f}', ha='center', va='center', color='black', fontsize=10)

# Customize the plot

ax.set_ylim(0, treatment_earnings * 1.2)

ax.set_ylabel('1978 Earnings ($)')

ax.set_title('Decomposing Treatment Effect on 1978 Earnings')

# Add legend

ax.legend()

# Set Y-axis format

ax.yaxis.set_major_formatter(plt.FuncFormatter(lambda y, _: '${:,.0f}'.format(y)))

plt.tight_layout()

plt.show()

# Output results

print(f"Control Group Average Earnings: ${control_earnings:.2f}")

print(f"Treatment Group Average Earnings: ${treatment_earnings:.2f}")

print(f"Average Treatment Effect (ATE): ${ate:.2f}")

print(f"Selection Bias: ${selection_bias:.2f}")

Control Group Average Earnings: $4554.80

Treatment Group Average Earnings: $6349.14

Average Treatment Effect (ATE): $1679.24

Selection Bias: $115.11

Note: The monetary value around 1980 differs significantly from today, roughly by a factor of four.

8. Conclusion

Thank you for reading! If you have any questions/suggestions, feel free to contact me on Linkedin! Also, I would be happy if you follow me on Medium.

- Meta Learners : Meta-learners help estimate treatment effects by predicting counterfactual outcomes. Different types, like S-learners, T-learners, X-learners, DR-learners, and DML, offer various levels of complexity and robustness, depending on your data and goals.

- EconML: The EconML library is a powerful tool for causal inference. Robustness checks like the Random Common Cause and Placebo Treatment tests are crucial for validating causal assumptions.

- Selecting the Right Method: Start with simpler models (S-learner, T-learner) and move to more advanced ones (X-learner, DR-learner, DML) as needed. Use metrics and refutation tests to validate the model’s accuracy and reliability.

9. Reference

- EconML : https://github.com/uber/causalml

- Lalonde Dataset : https://ailab.criteo.com/criteo-uplift-prediction-dataset

- Causal Inference and Discovery in Python: https://www.amazon.com/Causal-Inference-Discovery-Python-learning/dp/1804612987

- Sample Code : https://github.com/takechanman1228/Effective-Uplift-Modeling/blob/main/simple_lalonde_analysis_econml.ipynb

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.