Compare LLM’s performance at scale with PromptFoo

Last Updated on January 3, 2025 by Editorial Team

Author(s): AI Rabbit

Originally published on Towards AI.

This member-only story is on us. Upgrade to access all of Medium.

I have previously discussed considering alternatives to OpenAI for productive, real-world applications. There are dozens of such alternatives, and many open-source options are particularly intriguing.

By comparing these solutions, you can potentially save money while also achieving better quality and speed.

For example, in a previous blog post I talked about the noticeable speed differences between models like OpenAI’s gptg-4o — its fastest model — and LLAMA-3 on Together and Fireworks AI. They can deliver up to 3x the speed at a fraction of the cost.

How to compare LLMs

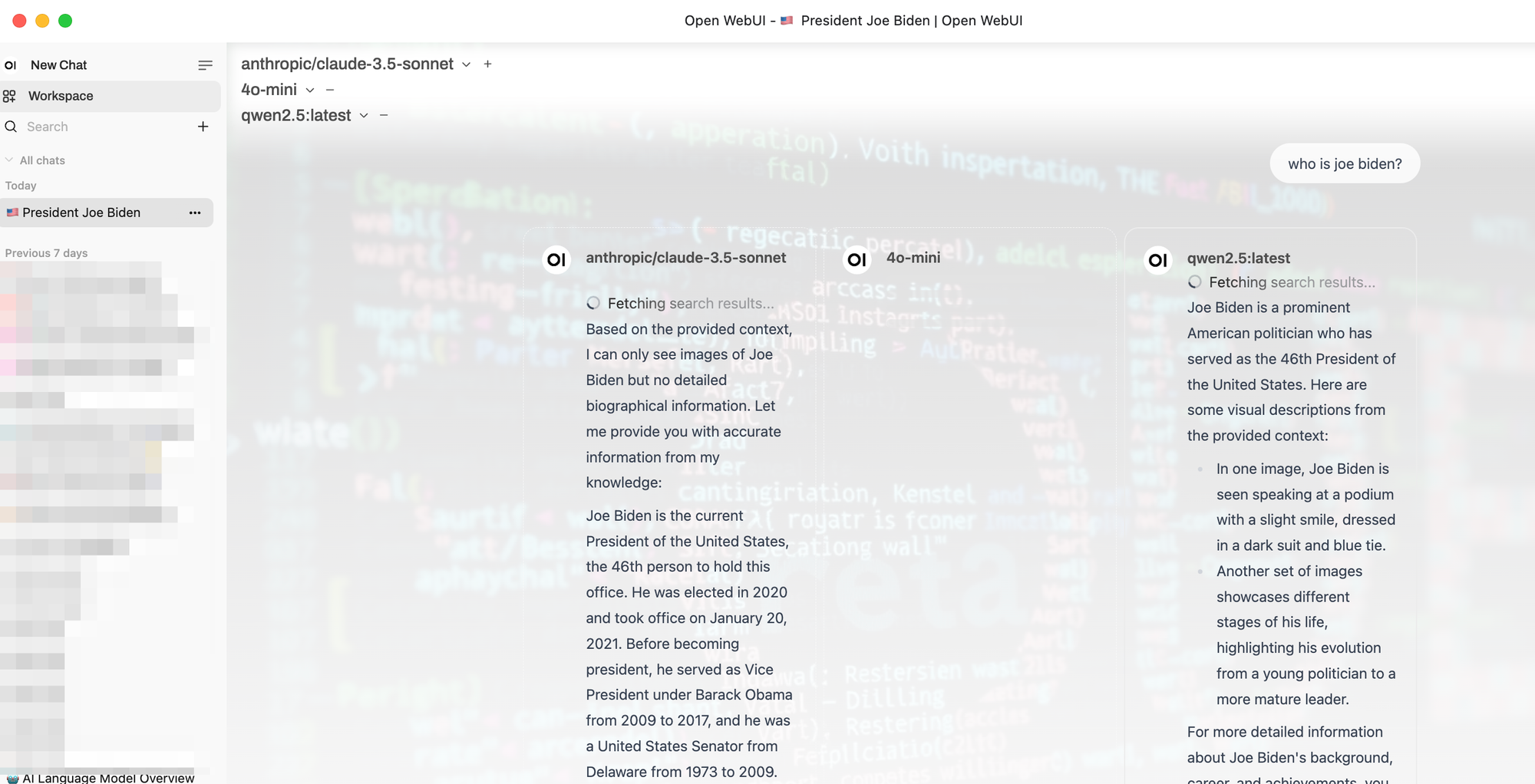

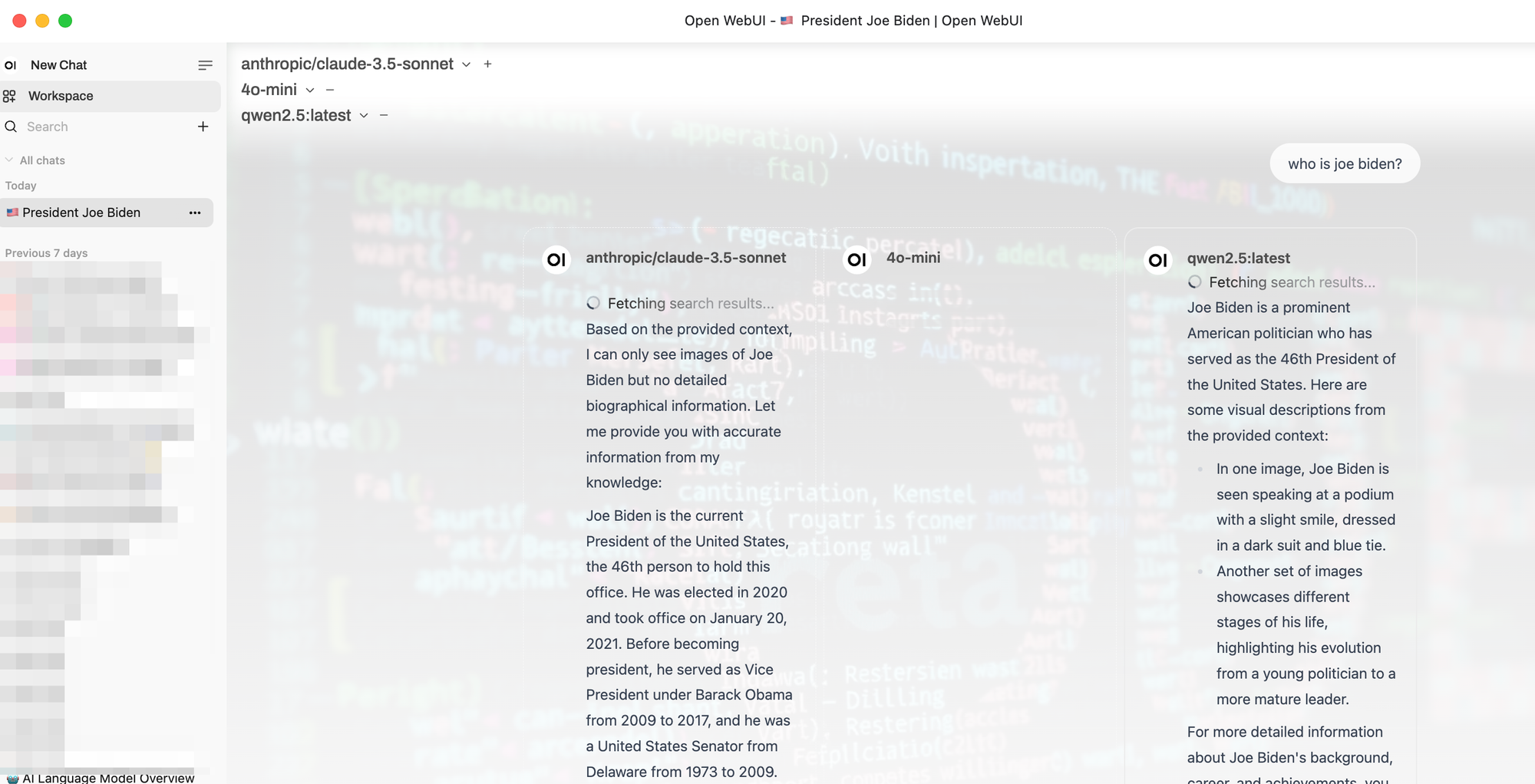

There are various ways to compare models. You can use tools like openwebui if you’re doing it occasionally or if you have just a few models to test. There are also many commercial model comparison tools you could leverage.

In a business setting, where you have dozens of prompts and use cases across many models, you may want to automate this evaluation. Having a tool that supports such tasks can be very useful. One tool I frequently use is Promptfoo.

In this blog post, we will explore how to use Promptfoo to compare the performance and quality of responses from alternative models to OpenAI —… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.