Chunking Tabular Data for RAG and Search Systems

Last Updated on September 4, 2025 by Editorial Team

Author(s): Kunal

Originally published on Towards AI.

When working with Retrieval-Augmented Generation (RAG) or search systems, we often focus on how to chunk long documents — but tables present a different kind of challenge. Unlike plain text, tabular data carries structured relationships across rows and columns, making naive chunking approaches (like splitting by rows or converting everything into text blocks) prone to losing context. The key is to design a chunking strategy that preserves both the semantics of individual rows and the structure of the table, while still being efficient for retrieval. In this post, we’ll explore practical strategies for chunking tabular data to maximize retrieval accuracy and relevance.

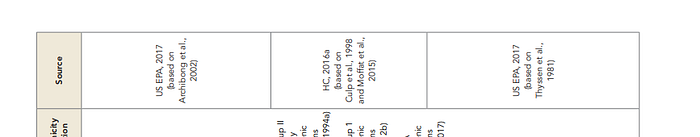

I have used Toxicological reference values pdf from Government of Canada Publications link. The document presents several challenges for data extraction, including multi-header tables, empty cells, varying table orientations, and duplicate column names.

What Happened When I Tried Direct PDF Upload to ChatGPT

I also experimented with directly uploading the PDF to ChatGPT-4o and asking questions about it. While the model could surface some information, it frequently hallucinated answers, especially when handling complex tables with merged headers or empty cells. This reinforced an important lesson: without structured preprocessing and thoughtful chunking strategies, even the most advanced LLMs struggle to reliably interpret tabular data from PDFs. That realization became the foundation for why I built a dedicated chunking pipeline for tables.

From Raw Tables to Usable Data

To extract tables, I used PyMuPDF, which handled the raw parsing well, but extracting text alone wasn’t enough. The real challenge was restructuring these complex tables into a consistent, searchable format and then deciding how to chunk them effectively for RAG and search tasks.

Some of the code snippets from table preprocessing:

df.iat[0, 2] = df.iat[0, 1]— copies the value from the second cell to the third cell in the first row.df.iat[0, 4] = df.iat[0, 3]— copies the value from the fourth cell to the fifth cell in the first row.merged_header = df.iloc[0].astype(str) + "_" + df.iloc[1].astype(str)— combines the first and second rows into a single row by joining their values with an underscore, creating a merged header.

df.columns = df.iloc[0]— sets the first row as the DataFrame’s column names.

left = pd.concat([df.iloc[:, 0], df.iloc[:, 2]], axis=0, ignore_index=True)— stacks the first and third columns vertically.right = pd.concat([df.iloc[:, 1], df.iloc[:, 3]], axis=0, ignore_index=True)— stacks the second and fourth columns vertically.df = pd.DataFrame({"Substance": left, "RAF Derm": right})— creates a new DataFrame with just two columns, combining the stacked columns for easier analysis and retrieval.

def detect_block_orientation(block, min_text_len=5, vertical_ratio=3): ...— a helper function to detect if a text block on a PDF page is vertical or horizontal, based on its bounding box aspect ratio, ignoring very short text blocks.

Chunking and vectorization

The process begins by reading all CSV files that match the pattern output_table*.csv, cleaning the table headers for consistency, and converting each row into a structured JSON object. Each JSON entry contains a text representation of the row along with metadata such as the table name, page number, and column names. These JSON objects are then saved for later use. Next, the workflow demonstrates how to build a vector store by loading the generated JSON files and converting each entry into a Document suitable for vector databases. Using a sentence transformer model, embeddings are created for each row and stored in a FAISS vector store, enabling efficient similarity-based search. I wrote a small function to illustrate how to query the vector store to retrieve the most relevant table rows for a given input query, providing a practical example of a retrieval-augmented workflow for tabular PDF data.

You can find the complete code for table extraction, header merging, column stacking, and block orientation detection on Github.

Note: I do not own the rights to the Toxicological Reference Values PDF; it is published by the Government of Canada. This post uses the document solely for educational and demonstration purposes.

“I hope you found this guide helpful and easy to follow. If it added value to your learning journey, a clap would mean a lot and help me create more content like this. Thanks for reading!”

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.