Small Language Models (SLMs): A Practical Guide to Architecture and Deployment

Author(s): Iflal Ismalebbe

Originally published on Towards AI.

I. Introduction

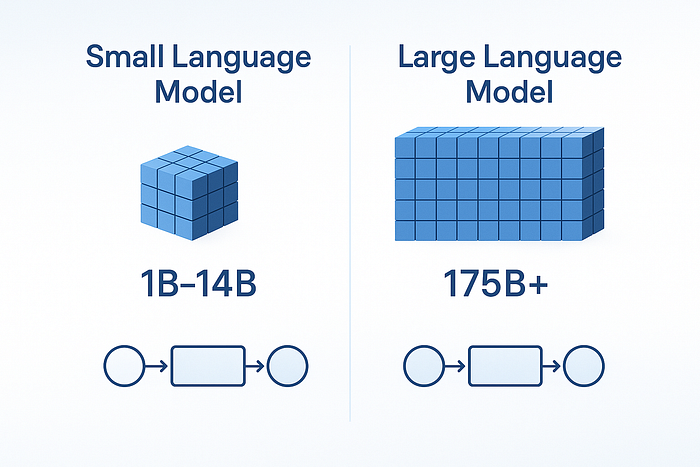

Small Language Models (SLMs) are reshaping how we think about AI efficiency. Unlike their massive counterparts — think GPT-4 or Gemini — SLMs operate with just 1 billion to 14 billion parameters, making them faster, cheaper, and easier to deploy without sacrificing performance in specialized tasks.

Why SLMs Matter

- Cost-Effective: Training and running a 7B-parameter model costs a fraction of a 70B+ LLM.

- Edge-Friendly: They fit on devices like smartphones, IoT sensors, and even microcontrollers.

- Domain-Specialized: Fine-tuned SLMs often outperform general-purpose LLMs in niche applications (e.g., medical diagnostics, legal document parsing).

Recent Breakthroughs

- Microsoft’s Phi Series — Models like Phi-3 (3.8B) and Phi-4-reasoning (14B) punch above their weight, rivaling much larger models in logic and reasoning tasks.

- Meta’s LLaMA Micro — A 3B-parameter variant optimized for edge devices, proving that smaller models can still deliver strong performance.

- IBM Granite 3.2 — A 3B-parameter model fine-tuned for enterprise document analysis, showing that SLMs can replace LLMs in business workflows.

II. Technical Architecture: How SLMs Stay Lean

Transformer Efficiency Tweaks

SLMs use the same transformer backbone as LLMs but with key optimizations:

- Sparse Attention: Instead of processing all tokens at once, models like Phi-3 use sliding-window attention, reducing compute overhead.

- Knowledge Distillation: Training smaller models to mimic larger ones (e.g., DistilBERT retains 95% of BERT’s accuracy with 40% fewer parameters).

- Parameter Sharing: Reusing weights across layers (like in ALBERT) cuts model size without major performance drops.

Training Tricks for Efficiency

- High-Quality Data Curation: Phi-3 was trained on “textbook-quality” synthetic data, boosting reasoning skills without bloating parameters.

- Quantization: Converting weights from 32-bit to 4-bit (e.g., QLoRA) slashes memory usage by 75% with minimal accuracy loss.

- Pruning: Removing redundant neurons (e.g., LLaMA Micro aggressively prunes less important connections for edge deployment).

III. Implementation: Where and How to Deploy SLMs

Hardware Flexibility

- Edge Devices: Models like Phi Silica run on Snapdragon laptops with as little as 8GB RAM.

- Consumer GPUs: A 7B-parameter model (e.g., Mistral 7B) runs smoothly on an RTX 4090.

- Cloud Hybrid Deployments: Some workflows use SLMs for real-time tasks and offload complex reasoning to LLMs.

Deployment Scenarios

Edge Computing Advantages

- Privacy: Data stays on-device (critical for healthcare/finance).

- Speed: No round-trip to cloud servers (e.g., real-time translation on earbuds).

- Cost Savings: No need for expensive cloud LLM API calls.

IV. Performance Optimization: Getting the Most from SLMs

Fine-Tuning Strategies

- LoRA (Low-Rank Adaptation): Adds small, trainable matrices to a frozen base model, reducing fine-tuning costs by 90%.

- DPO (Direct Preference Optimization): Aligns models with human preferences without heavy RLHF overhead.

Benchmarks: SLMs vs. LLMs

Key Takeaway: A 14B-parameter SLM (Phi-4) nearly matches GPT-4o Mini in math while being 100× smaller and 3.75× faster.

Conclusion: The Future is Small (and Efficient)

SLMs aren’t just “LLM-lite” — they’re a smarter way to deploy AI where it matters most. With innovations in sparse architectures, quantization, and edge computing, SLMs are becoming the go-to choice for real-world applications that demand speed, affordability, and precision.

For further reading, check out:

Final Thoughts

SLMs prove that bigger isn’t always better — what matters is how you use them. Whether you’re deploying models on edge devices or fine-tuning for niche tasks, the future of efficient AI is here.

What’s your take? Have you experimented with SLMs like Phi-3 or LLaMA Micro? Drop your experiences in the comments — I’d love to hear what’s working (or not) for you.

If you found this breakdown useful, give it a 👏 & follow me for more practical AI/ML insights.

Next up: Optimizing SLMs for Real-Time Applications — stay tuned!🫡

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.