MCP or not, Manus Made a Choice

Last Updated on April 17, 2025 by Editorial Team

Author(s): Kelvin Lu

Originally published on Towards AI.

First Contact with Manus

Manus has made waves as China’s second big AI innovation after DeepSeek in 2025. At first glance, it looks like a typical multi-agent system — but there’s more to it than meets the eye.

I decided to put it to the test. First, I asked Manus to compare salaries and job opportunities for Java developers, data engineers, machine learning engineers, and data scientists. It didn’t just return some generic answer — it neatly created a to-do list, figured out where to collect the information, gathered all the necessary information using a browser, extracted information from the webpages, and put together a well-structured report. There were a few minor issues but it did a decent job.

Then, I threw it a curveball. I asked it to compare property prices across different suburbs, deliberately including a typo and a completely made-up location. Impressively, Manus caught both mistakes, asking me whether I wanted to correct the typo and ignore the fake suburb. At the end, it even produced a report with impressive chats. The result was still not perfect, but it’s definitely impressive.

One thing really stood out: before doing anything, Manus first created a sandbox and set up its environment. Unlike other AI vendors who prefer to hide the detail, Manus put everything on the table:

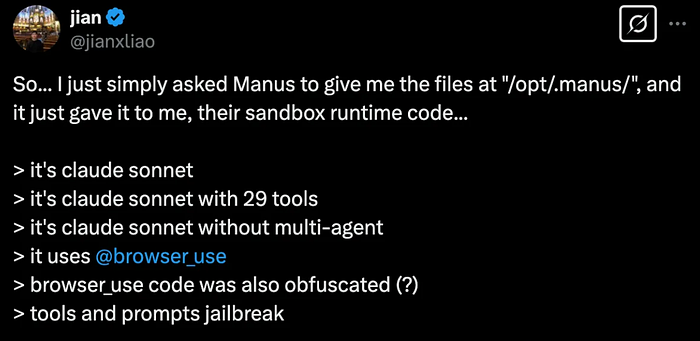

Not long ago, someone proudly announced they had jailbroken Manus. The developer’s reaction? A casual shrug — “That’s how it’s designed.”:

In another post, Manus even revealed its internal structure:

This is an unusual design. Most public AI systems keep their inner workings under wraps, only exposing the bare minimum to users. But Manus? This architecture is very different from other agent-based systems that tend to accept MCP as their protocol.

So, let’s take a step back and think critically: Why is Manus taking this unique approach? What are the pros and cons of MCP? And when does it make sense to use or avoid it?

MCP Primer

If you’ve been keeping an eye on the AI world, you’ve probably noticed the sudden buzz around MCP. Lately, my entire newsletter was flooded with people discussing MCP, asking what it is, or just wondering why it’s trending. Some said Cursor + MCP rule everything. There are already a few MCP servers and even MCP service catalogs despite that the concept only emerged several months ago.

But seriously — what is MCP, anyway? Let’s have a closer look.

Agentic development is widely regarded as the key to the future, enabling generative AI to evolve beyond just being a chatbot. With this approach, AI applications can actively gather information and take meaningful actions.

However, there hasn’t been a standardized way for generative AI applications to interact with agents. Each agent is traditionally handcrafted, and for an application to recognise them, developers must manually define every agent.

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

— Anthropic

Surprisingly, quite a few introductory posts didn’t explain the MCP correctly. According to the official document, MCP architecture has the following major components:

Clients

A client is an LLM-based interface or tool (like Claude for Desktop or a code editor like Cursor) that can discover and invoke MCP servers. This is how the user’s text prompts are turned into actual function calls without constantly switching between systems.

Servers

A server in MCP terms is anything that exposes resources or tools to the model. For example, you might build a server that provides a “get_forecast” function (tool) or a “/policies/leave-policy.md” resource (file-like content). Servers manage the following components:

- Tools: Functions the model can call with user approval (e.g.,

createNewTicket,updateDatabaseEntry). - Resources: File-like data the model can read, such as “company_wiki.md” or a dataset representing financial records.

- Prompts: Templated text that helps the model perform specialized tasks.

MCP Protocol

The nutshell of MCP is its communication protocol between the clients and the servers that allows the clients to be able to access the resources, tools, and prompts of the server.

Why it was named as MCP?

The term of context is very ambiguous. Without clear understand to this concept could cause trouble in developing MCP compliant solutions. I thought when I heard the MCP for the first time was that MCP may be about interaction history optimisation, because that was the closest meaning of context in the LLM development. And not surprisingly, quite a few other people also got confused by this. See how often you heard people talked about MCP and “optimal long-short memory”? Some people even thought MCP stands for “Memory, Context, and Personalisation”.

Hmm, hallucination is not joking.

The official MCP document was badly written. It doesn’t explain the main concept very clearly. The most intuitive explanation I can find about the term Context was in the Github:

It is reasonable guessing that the MCP is all about the facility to connect the clients, servers, and models. It has nothing to do with the conversation history. The context is the information keeping components communicate, not the context of prompting. As another support of the understanding, Anthropic states that one goal on the roadmap is to make the remote operation stateless, that means MCP server will not maintain conversation history:

The rapid growing adoption of MCP explained its success as an inter-operational protocol. Having said that, let’s turn around and see an opposite solution.

CodeAct

General LLM agent applications either use template prompting or simply allow LLM to “think step by step” when planning. And then using tools to take actions. CodeAct is an interesting discovery founded by XingYao Wang in his paper.

He noticed that LLM performs better when reasoning in code rather than in text or json:

This discovery inspired the Manus team to develop a solution similar to CodeAct, rather than close to MCP. MCP came later than Manus, but develop a similar solution shouldn’t be difficult.

MCP and CodeAct side by side

There are two major challenges in the agentic development:

- how to make the solution reliable,

- how to make the solution extendible.

Apparently Manus and Anthropic chose different direction. MCP makes the agent services easier to be discovered and reused. While Manus is more interested in making the service reliable. A fundamental difference is the that Manus chose centralised planning vs. MCP distribute the planning task to each separated servers. Using CodeAct as the reasoning engine, Manus has the potential to achieve better performance.

In that sense, a MCP complaint application may be easier to embed impressive smart features. However, may still suffer badly for broken workflows and funny outcome. MCP only provides a communication protocol. The clients have no idea how the servers deal with the requests. The MCP servers may have descent AI power. But the clients don’t care. The MCP servers are more like dummy function providers. MCP doesn’t provide a mechanism for the client-server and server-server collaboration. As the CEO of Manus mentioned, MCP results into a lengthy, inefficient system.

The success of MCP is a plausible milestone, however, it is still too young.

For example, despite MCP aims to provide universal generative AI connectivity, at the moment, MCP only support local connections because the team hasn’t decide on the best way to do authentication and authorisation. If you spend 5 minutes scan through the MCP server catelog, you will understand that most of the functions are merely a thin layer of wrapper of existing functions. The added value of MCP as local service wrapper is fairly limited.

And I’m afraid the wish list could be much longer than that.

Parting Words

There are already quite many Agent development frameworks provided by major AI vendors. Each of them reflects interesting different philosophy of their understanding of what a agentic new world looks like. The success of MCP proved that it answered one of the questions that many other overlooked: service publication.

Despite that, we should keep in mind that AI industry is evolving in a unprerceeding speed. Everything is changing so fast that today’s cutting edge may be totally outdated two month later. Do you remember the RAG? It was a brilliant new concept not long ago, and right now you can use at least 18 technologies to boost its performance.

Agentic development is the same. New ideas emerge everyday. It is too early to bid a solution as the winner. It is even harmful to wish a framework to do the magic. No, MCP doesn’t do magic. It makes low level service integration easier but the headache of reliability and hallucination is still your problem.

Last not the least, lets visit the museum of failed frameworks museum:

- CORBA

- Enterprise Java Bean

- Jini

- DCOM

- Web Service Discovery Protocol

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.