Build the Smallest LLM From Scratch With Pytorch (And Generate Pokémon Names!)

Author(s): Tapan Babbar

Originally published on Towards AI.

So, there I was, toying with a bunch of Pokémon-inspired variations of my cat’s name — trying to give it that unique, slightly mystical vibe. After cycling through names like “Flarefluff” and “Nimblepawchu,” it hit me: why not go full-on AI and let a character-level language model handle this? It seemed like the perfect mini-project, and what better way to dive into character-level models than creating a custom Pokémon name generator?

Beneath the vast complexity of large language models (LLMs) and generative AI lies a surprisingly simple core idea: predicting the next character. That’s really it! Every incredible model — from conversational bots to creative writers — boils down to how well they anticipate what comes next. The “magic” of LLMs? It’s in how they refine and scale this predictive ability. So, let’s strip away the hype and get to the essence.

We’re not building a massive model with millions of parameters in this guide. Instead, we’re creating a character-level language model that can generate Pokémon-style names. Here’s the twist: our dataset is tiny, with only 801 Pokémon names! By the end, you’ll understand the basics of language modeling and have your own mini Pokémon name generator in hand.

Here’s how each step is structured to help you follow along:

- Goal: A quick overview of what we’re aiming to achieve.

- Intuition: The underlying idea — no coding required here.

- Code: Step-by-step PyTorch implementation.

- Code Explanation: Breaking down the code so it’s clear what’s happening.

If you’re just here for the concepts, skip the code — you’ll still get the big picture. No coding experience is necessary to understand the ideas. But if you’re up for it, diving into the code will help solidify your understanding, so I encourage you to give it a go!

The Intuition: From Characters to Names

Imagine guessing a word letter by letter, where each letter gives you a clue about what’s likely next. You see “Pi,” and your mind jumps to “Pikachu” because “ka” often follows “Pi” in the Pokémon world. This is the intuition we’ll teach our model, feeding it Pokémon names one character at a time. Over time, the model catches on to this naming style’s quirks, helping it generate fresh names that “sound” Pokémon-like.

Ready? Let’s build this from scratch in PyTorch!

Step 1: Teaching the Model Its First “Alphabet”

Goal:

Define the “alphabet” of characters the model can use and assign each character a unique number.

Intuition:

Right now, our model doesn’t know anything about language, names, or even letters. To it, words are just a sequence of unknown symbols. And here’s the thing: neural networks understand only numbers — it’s non-negotiable! So, to make sense of our dataset, we need to assign a unique number to each character.

In this step, we’re building the model’s “alphabet” by identifying every unique character in the Pokémon names dataset. This will include all the letters, plus a special marker to signify the end of a name. Each character will be paired with a unique identifier, a number that lets the model understand each symbol in its own way. This gives our model the basic “building blocks” for creating Pokémon names and helps it begin learning which characters tend to follow one another.

With these numeric IDs in place, we’re setting the foundation for our model to start grasping the sequences of characters in Pokémon names, all from the ground up!

import pandas as pd

import torch

import string

import numpy as np

import re

import torch.nn.functional as F

import matplotlib.pyplot as plt

data = pd.read_csv('pokemon.csv')["name"]

words = data.to_list()

print(words[:8])

#['bulbasaur', 'ivysaur', 'venusaur', 'charmander', 'charmeleon', 'charizard', 'squirtle', 'wartortle']

# Build the vocabulary

chars = sorted(list(set(' '.join(words))))

stoi = {s:i+1 for i,s in enumerate(chars)}

stoi['.'] = 0 # Dot represents the end of a word

itos = {i:s for s,i in stoi.items()}

print(stoi)

#{' ': 1, 'a': 2, 'b': 3, 'c': 4, 'd': 5, 'e': 6, 'f': 7, 'g': 8, 'h': 9, 'i': 10, 'j': 11, 'k': 12, 'l': 13, 'm': 14, 'n': 15, 'o': 16, 'p': 17, 'q': 18, 'r': 19, 's': 20, 't': 21, 'u': 22, 'v': 23, 'w': 24, 'x': 25, 'y': 26, 'z': 27, '.': 0}

print(itos)

#{1: ' ', 2: 'a', 3: 'b', 4: 'c', 5: 'd', 6: 'e', 7: 'f', 8: 'g', 9: 'h', 10: 'i', 11: 'j', 12: 'k', 13: 'l', 14: 'm', 15: 'n', 16: 'o', 17: 'p', 18: 'q', 19: 'r', 20: 's', 21: 't', 22: 'u', 23: 'v', 24: 'w', 25: 'x', 26: 'y', 27: 'z', 0: '.'}

Code Explanation:

- We create

stoi, which maps each character to a unique integer. - The

itosdictionary reverses this mapping, allowing us to convert numbers back into characters. - We include a special end-of-word character (

.) to indicate the end of each Pokémon name.

Step 2: Building Context with N-grams

Goal:

Enable the model to guess the next character based on the context of preceding characters.

Intuition:

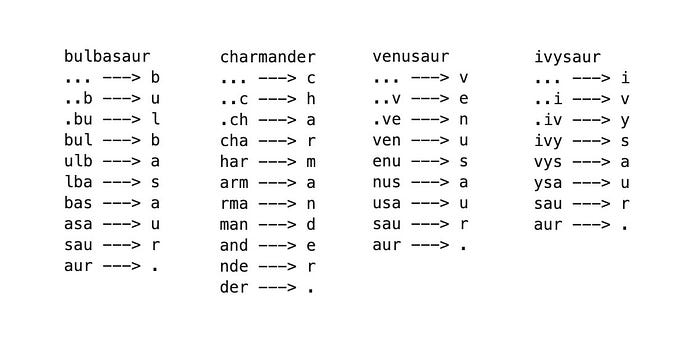

Here, we’re teaching the model by building a game: guess the next letter! The model will try to predict what comes next for each character in a name. For example, when it sees “Pi,” it might guess “k” next, as in “Pikachu.” We’ll turn each name into sequences where each character points to its next one. Over time, the model will start spotting familiar patterns that define the style of Pokémon names.

We’ll also add a special end-of-name character after each name to let the model know when it’s time to wrap up.

This example shows how we use a fixed context length of 3 to predict each next character in a sequence. As the model reads each character in a word, it remembers only the last three characters as context to make its next prediction. This sliding window approach helps capture short-term dependencies but feel free to experiment with shorter or longer context lengths to see how it affects the predictions.

block_size = 3 # Context length

def build_dataset(words):

X, Y = [], []

for w in words:

context = [0] * block_size # start with a blank context

for ch in w + '.':

ix = stoi[ch]

X.append(context)

Y.append(ix)

context = context[1:] + [ix] # Shift and append new character

return torch.tensor(X), torch.tensor(Y)

X, Y = build_dataset(words[:int(0.8 * len(words))])

print(X.shape, Y.shape) # Check shapes of training data

Code Explanation:

- Set Context Length:

block_size = 3defines the context length, or the number of preceding characters used to predict the next one. - Create

build_datasetFunction: This function preparesX(context sequences) andY(next character indices) from a list of words. - Initialize and Update Context: Each word starts with a blank context

[0, 0, 0]. As characters are processed, the context shifts forward to maintain the 3-character length. - Store Input-Output Pairs: Each context (in

X) is paired with the next character (inY), building a dataset for model training. - Convert and Check Data: Converts

XandYto tensors, preparing them for training, and checks their dimensions. This dataset now captures patterns in character sequences for generating new names.

Step 3: Building the Neural Network

Goal:

Train the model by predicting each next character and adjusting weights based on prediction accuracy.

Intuition:

Here’s where it gets interesting! We’ll create a simple setup with three layers that work together to predict the next letter based on the previous three. Again, think of it like guessing letters in a word game: each time the model gets it wrong, it learns from the mistake and adjusts, improving with each try.

As it practices on real Pokémon names, it gradually picks up the style and patterns that make these names unique. Eventually, after going over the list enough times, it can come up with new names that have that same Pokémon vibe!

# Initialize parameters

g = torch.Generator()

C = torch.randn((27, 10), generator=g)

W1 = torch.randn((30, 200), generator=g)

b1 = torch.randn(200, generator=g)

W2 = torch.randn((200, 27), generator=g)

b2 = torch.randn(27, generator=g)

parameters = [C, W1, b1, W2, b2]

for p in parameters:

p.requires_grad = True

for i in range(100000):

ix = torch.randint(0, X.shape[0], (32,))

emb = C[X[ix]]

h = torch.tanh(emb.view(-1, 30) @ W1 + b1)

logits = h @ W2 + b2

loss = F.cross_entropy(logits, Y[ix])

for p in parameters:

p.grad = None

loss.backward()

for p in parameters:

p.data -= 0.1 * p.grad

Code Explanation:

- We initialize weights and biases for the embedding layer (

C) and two linear layers (W1,W2) with random values. - Each parameter is set to

requires_grad=True, enabling backpropagation, which adjusts these parameters to minimize prediction errors. - We select a mini-batch of 32 random samples from the training data (

Xtr), allowing us to optimize the model more efficiently by processing multiple examples at once. - For each batch, we use embeddings, and pass them through the hidden layer (

W1) withtanhactivation, and calculate logits for output. - Using cross-entropy loss, the model learns to reduce errors and improve predictions with each step.

Step 4: Finding the Probability of the Next Character

Goal:

To generate new Pokémon names by predicting one character at a time based on the input sequence, using the model’s learned probabilities.

Intuition:

During training, it optimized its weights to capture the likelihood of each character following another in typical Pokémon names. Now, using these learned weights (W1, W2, b1, b2), we can generate entirely new names by “predicting” one character at a time. At this step, we’re making our model “guess” the next letter that should follow a given sequence, such as “pik”.

The model doesn’t directly understand letters, so the input characters are first converted into numbers representing each character. These numbers are then padded to match the required input size and fed into the model’s “layers.” The layers are like filters trained to predict what typically follows each character. After passing through these layers, the model provides a list of probabilities for each possible character it might select next, based on what it’s learned from the Pokémon names dataset. This gives us a weighted list of potential next characters, ranked by likelihood.

In the example above, you can see that the characters ‘a’ and ‘i’ have a high likelihood of following the sequence “pik.”

input_chars = "pik" # Example input to get probabilities of next characters

# Convert input characters to indices based on stoi (character-to-index mapping)

context = [stoi.get(char, 0) for char in input_chars][-block_size:] # Ensure context fits block size

context = [0] * (block_size - len(context)) + context # Pad if shorter than block size

# Embedding the current context

emb = C[torch.tensor([context])]

# Pass through the network layers

h = torch.tanh(emb.view(1, -1) @ W1 + b1)

logits = h @ W2 + b2

# Compute the probabilities

probs = F.softmax(logits, dim=1).squeeze() # Squeeze to remove unnecessary dimensions

# Print out the probabilities for each character

next_char_probs = {itos[i]: probs[i].item() for i in range(len(probs))}

Code Explanation:

- We convert the

contextindices into an embedded representation, a numerical format that can be fed into the model layers. - We use the model’s layers to transform the embedded context. The hidden layer (

h) processes it, and the output layer (logits) computes scores for each possible character. - Finally, we apply the softmax function to the logits, giving us a list of probabilities. This probability distribution is stored in

next_char_probs, mapping each character to its likelihood.

Step 5: Generating New Pokémon Names

Goal:

Using the probabilities from Step 4, we aim to generate a complete name by selecting each next character sequentially until a special “end-of-name” marker appears.

Intuition:

The model has learned typical character sequences from Pokémon names and now applies this by “guessing” each subsequent letter based on probabilities. It keeps selecting characters this way until it senses the name is complete. Some generated names will fit the Pokémon style perfectly, while others might be more whimsical — capturing the creative unpredictability that fascinates generative models. Here are a few names generated by our model:

- dwebble

- simikyu

- baltarill

- pupi

- don

- burr

- sola

- patran

- meow

- omank

- wormantis

- bune

- glisa

- whirlix

- hydol

- audinja

- digler

- skipedenneon

context = [0] * block_size

for _ in range(20):

out = []

while True:

emb = C[torch.tensor([context])]

h = torch.tanh(emb.view(1, -1) @ W1 + b1)

logits = h @ W2 + b2

probs = F.softmax(logits, dim=1)

ix = torch.multinomial(probs, num_samples=1, generator=g).item()

context = context[1:] + [ix]

out.append(ix)

if ix == 0:

break

print(''.join(itos[i] for i in out))

Code Explanation:

- Using softmax on logits, we get probabilities for each character.

torch.multinomialchooses a character based on these probabilities, adding variety and realism to generated names.

That’s it! You can even experiment by starting with your name as a prefix and watch the model transform it into a Pokémon-style name.

Future Improvements

This model offers a basic approach to generating character-level text, such as Pokémon names, but it’s far from production-ready. I’ve intentionally simplified the following aspects to focus on building intuition, with plans to expand on these concepts in a follow-up article.

- Dynamic Learning Rate: Our current training setup uses a fixed learning rate of

0.1, which might limit convergence efficiency. Experimenting with a dynamic learning rate (e.g., reducing it as the model improves) could yield faster convergence and better final accuracy. - Overfitting Prevention: With a relatively small dataset of 801 Pokémon names, the model may start to memorize patterns rather than generalize. We could introduce techniques like dropout or L2 regularization to reduce overfitting, allowing the model to better generalize to unseen sequences.

- Expanding Context Length: Currently, the model uses a fixed

block_size(context window) that may limit it from capturing dependencies over long sequences. Increasing this context length would allow it to better understand patterns over longer sequences, creating names that feel more complex and nuanced. - Larger Dataset: The model’s ability to generalize and create more diverse names is limited by the small dataset. Training on a larger dataset, possibly including more fictional names from different sources, could help it learn broader naming conventions and improve its creative range.

- Temperature Adjustment: Experiment with the temperature setting, which controls the randomness of the model’s predictions. A lower temperature will make the model more conservative, choosing the most likely next character, while a higher temperature encourages creativity by allowing more varied and unexpected choices. Fine-tuning this can help balance between generating predictable and unique Pokémon-like names.

Final Thoughts: Gotta Generate ’Em All!

This is one of the simplest character-level language models, and it’s a great starting point. By adding more layers, using larger datasets, or increasing the context length, you can improve the model and generate even more creative names. But don’t stop here! Try feeding it a different set of names — think dragons, elves, or mystical creatures — and watch how it learns to capture those vibes. With just a bit of tweaking, this model can become your go-to generator for names straight out of fantasy worlds. Happy training, and may your creations sound as epic as they look!

The full source code and the Jupyter Notebook are available in the GitHub repository. Feel free to reach out if you have ideas for improvements or any other observations.

References:

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.