LangChain 101: Part 3b. Talking to Documents: Embeddings and Vectorstores

Author(s): Ivan Reznikov

Originally published on Towards AI.

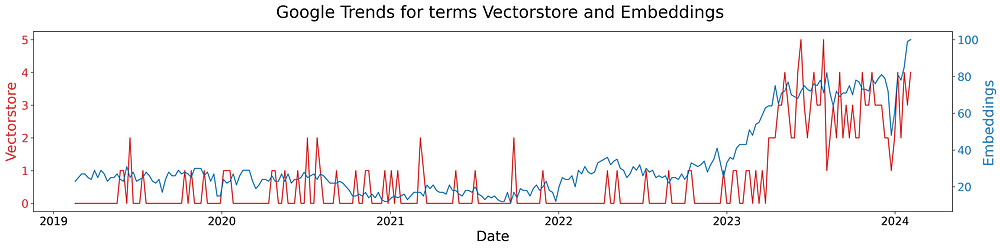

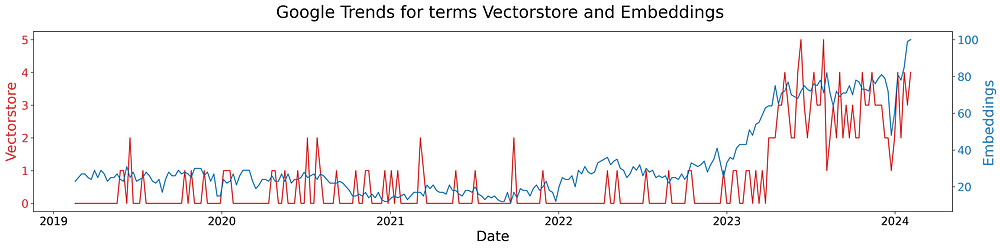

The terms vectorstores and embeddings are gaining more and more popularity. As soon as the idea of integration of LLMs with our own data became a possibility, we started to look into tools to make that a reality.

In Part 3b of the LangChain 101 series, we’ll discuss what embeddings are and how to choose one, what are vectorstores, how vector databases differ from other databases, and, most importantly, how to choose one! As usual, all code is provided and duplicated in Github and Google Colab.

· About Part 3 and the Course

· Embeddings

∘ How to choose an embedding model?

∘ Code implementation

· Vectorstores

∘ How to choose a vectorstore?

∘ Code implementation

∘ Advanced vectorstore retrieval concepts

· Conclusion

About Part 3 and the Course

This is Part 3 of the LangChain 101 course. It is strongly recommended that you check the first two parts to understand the context of this article better.

LangChain 101 Course (updated)

LangChain 101 course sessions. All code is on GitHub. LLMs, Chatbots

medium.com

Embeddings

Embeddings are numerical representations of various forms of content, mostly, but not limited to text and images. These arrays of numbers encapsulate the semantic meanings of their real-world counterparts. From a stereometrical perspective, embeddings represent content within a multi-dimensional space, each point’s position reflecting the relationships and similarities between other embedded parts.

Let’s think of different transport types. We can use many characteristics to describe a particular transportation type: speed, capacity, energy source, terrain possibilities, etc. To begin with, let’s sort them by speed.

This is a 1-dimensional representation of data. Let’s add capacity:

Adding an additional dimension allowed us to split the vehicles into potential subgroups. Instead of drawing more and more dimensions, let’s take a look at a potential table:

According to the table, from a “row comparison perspective,” we can find similar transport kinds: an electric car and a helicopter, a bicycle and an e-scooter, an airplane and a bullet train.

Now, in this case, it was me who defined the columns. In machine learning, special algorithms based on large amounts of data “create” such columns. For text and words in particular, for example, it might be part of speech, average position in the sentence, surrounding words and context, etc. Most often, we embed chunks of text in order to understand their similarity with the asked question, close to how we define the similarity between particular vehicles.

Let’s look at another example. Let’s assume we have several posts in our knowledge base that we want to query. We’ll embed them and for now, will store the vectors somewhere (we’ll later talk about vectorstores).

During the inference phase, using the same embedding model, we’ll embed our question. The achieved vector is compared with the vectors in our storage using cosine_similarity or other metric. Chunks with the most related vectors are passed further the RAG pipeline.

Consider embeddings as sort of encoded representations that are much more accurately compared than direct text-to-text comparison due to their ability to condense complex, high-dimensional data into a more manageable form.

How to choose an embedding model?

When selecting an embedding model, it’s essential to consider the specific needs of your application and the available resources. OpenAI’s text-embedding models, such as text-embedding-ada-002 or latest text-embedding-3-small/large, balance cost and performance for general purposes.

Exploring alternatives like HuggingFace’s embedding models or other custom embedding solutions can be beneficial for applications with specialized requirements. The choice of embedding model can significantly affect your LLM application’s cost, speed, and, most importantly, quality, making it crucial to evaluate different models based on their performance in relevant benchmarks. A great example of such a leaderboard is the Massive Text Embedding Benchmark (MTEB) Leaderboard:

MTEB Leaderboard – a Hugging Face Space by mteb

Discover amazing ML apps made by the community

huggingface.co

Some of the useful features you should look at whilst selecting a model:

- Dimensionality: Higher dimensions capture more information but consume more resources. Consider the trade-off between accuracy and efficiency for your application.

- Pre-training Data: Different models are trained on diverse datasets. Choose one aligned with your data domain (e.g., scientific text vs. social media) for better representation.

- Fine-tuning Capabilities: Can the model be adapted to your specific task further? This is crucial for LLMs and LangChain applications.

Code implementation

As usual, all the code is provided on Github and Colab.

We’ll take the same sentences we’ve discussed in our previous lecture.

LangChain 101: Part 2ab. All You Need to Know About (Large Language) Models

The Models component is the backbone of Langchain. It is the core that is responsible for token generation that makes…

pub.towardsai.net

sentences = [

"Best travel neck pillow for long flights",

"Lightweight backpack for hiking and travel",

"Waterproof duffel bag for outdoor adventures",

"Stainless steel cookware set for induction cooktops",

"High-quality chef's knife set",

"High-performance stand mixer for baking",

"New releases in fiction literature",

"Inspirational biographies and memoirs",

"Top self-help books for personal growth",

]

Let’s take a look how to call different embedding functions:

# OPENAI

from langchain_openai import OpenAIEmbeddings

openai_embedding = OpenAIEmbeddings()

# or

from openai import OpenAI

client = OpenAI()

def get_embedding(text, model="text-embedding-3-small"):

text = text.replace("\n", " ")

return client.embeddings.create(input = [text], model=model).data[0].embedding

_list = [get_embedding(s) for s in sentences]

# DIRECTLY FROM HUGGINGFACE

from langchain.embeddings import HuggingFaceEmbeddings

mpnet_embeddings = HuggingFaceEmbeddings(

model_name="sentence-transformers/all-mpnet-base-v2"

)

_list = [mpnet_embeddings.embed_query(s) for s in sentences]

# LOAD AS SENTENCETRANSFORMERS

from sentence_transformers import SentenceTransformer

gist_embedding = SentenceTransformer("avsolatorio/GIST-Embedding-v0")

_list = gist_embedding.encode(sentences, convert_to_tensor=True)

In the notebook, we’ve also built a similarity matrix to compare dot product scores for all sentence combinations. Below, you can see their heatmaps

As you can see, all of the embedding models identified the same-theme triplets correctly. I’ve noticed that top-10 embeddings on the leaderboard usually have similar quality. There are differences, but there are high chances that they won’t affect you much unless you choose a completely wrong model. I usually tweak embeddings when I’ve built the RAG pipeline and try to squeeze additional quality.

Vectorstores

We’ve heavily discussed how embedding works, what model to choose, and how vectors are compared. Now, if you have a large knowledge base containing thousands or millions of vectors, you’d need to perform such operations quite efficiently. This is where vectorstores come into play.

There is some confusion across the web with misusing words vectorstores and index, so let’s straighten it up. Vectorstores store vectores (thus the name, duh). In SQL and noSQL databases, the order of data most of the time doesn’t matter, whereas vector dbs are designed in a way that similar chunks are located close to each other in a multi-dimensional space.

Talking about index — it’s purpose in SQL and noSQL databases is to find a specific row or document based on the position. In the case of a vectorstore, we’re also talking about positions, and to find similar or related chunks to the query, we use the same embedding model and find the hypothetical position of the query within a vectorstore. That would be counted as an index. Closest n chunks to the index (or by threshold) are later retrieved.

How to choose a vectorstore?

This is a great question! Currently, there are 70+ integrations between different stores and LangChain. Heck, there is even a SKLearnVectorStore based on scikit-learn!

When choosing a vectorstore — there are a couple that I prefer to use. But in this article, I’ll attempt something that I haven’t seen anywhere — I’ll try to systemize vectorstores using trees. These trees are subjective to authors' opinions. If you’re a vectordb company — write me a message, and I’ll add your db and/or make edits 🙂

Decision Tree 1: Cloud vs. No-Cloud

Cloud-Hosted:

- Azure: Azure Search

- GCP: Vertex AI

- AWS: OpenSearch

- Other: Weaviate, Pinecone

Self-Hosted:

- High Technical Expertise: Faiss, HNSWLib, LanceDB

- Moderate Technical Expertise: ClickHouse, PostgreSQL with extensions like PGVector or Chroma

- Low Technical Expertise: Supabase, Rockset

Decision Tree 2: Managed Service vs. Self-Hosted

- Managed Service: AWS OpenSearch, Azure Search, Vertext AI, Pinecone, Weaviate, and Supabase

- Self-Hosted: Chroma and other dbs give the capability to manage their infrastructure and prioritize advanced vector search functionalities

Decision Tree 3: Performance and Latency

- Real-time or low latency (sub-millisecond): MemoryVectorStore and Pinecone

- Moderate latency (millisecond to tens of milliseconds): Faiss, HNSWLib, Tigris

- Tolerant of higher latency (seconds): OpenSearch, Elasticsearch, or database-based options like PGVector

Decision Tree 4: Budget and Cost

- Limited Budget: Open-source options remain the go-to, including Chroma, Faiss, OpenSearch, and ClickHouse

- Moderate Budget: Managed services like Pinecone, Supabase, and potentially AWS OpenSearch or Azure Cognitive Search with pay-as-you-go models could be appropriate, especially for cloud solutions

All vectorstore integrations are provided on the langchain website: https://python.langchain.com/docs/integrations/vectorstores

Code implementation

Now it’s time to take a look at python implementation for vectorstores and indexes. Let’s start with DocArrayInMemorySearch and find most similar documents:

from langchain.vectorstores import DocArrayInMemorySearch

db = DocArrayInMemorySearch.from_documents(

documents=documents,

embedding=embedding

)

docs = db.similarity_search(query)

#or

docs = db.similarity_search_with_score(query)

For most of the vectorstores, you need to pass the documents and embedding function. When returning the similarity score, you can call similarity_search_with_score to return a tuple (chunk, score). In the case of DocArrayInMemorySearch the returned distance score is cosine distance. Therefore, the lower the better.

In case of Chroma, you also pass the location path where the vectorstore will be saved. You can also set k as the number of nearest documents to be returned.

from langchain.vectorstores import Chroma

chroma_db = Chroma.from_documents(

documents=documents,

embedding=openai_embedding,

persist_directory=persist_directory

)

docs = chroma_db.similarity_search_with_score(query,k=3)

It’s time to look at indexes.

from langchain.indexes import VectorstoreIndexCreator

index = VectorstoreIndexCreator(

vectorstore_cls=DocArrayInMemorySearch,

embedding=embedding,

text_splitter=text_splitter

).from_loaders([TextLoader("file.txt")])

index.query(query, llm = llm)

Notice that for an index, we pass the vectorstore class, the text_splitter, and upload the loader from_loaders or from_documents. Under the hood, a vectorstore index is created (VectorstoreIndexCreator, duh), allowing us to run a primitive RAG pipeline using a query function (notice that we pass an LLM as a parameter).

The last vectorstore we’ll cover today will be the asynchronous implementation of FAISS, built on asyncio, allowing for concurrent execution of code:

from langchain_community.vectorstores import FAISS

faiss_db = await FAISS.afrom_documents(documents, embedding)

docs = await db.asimilarity_search(query)

#or

docs = await db.similarity_search_with_score(query)

Advanced vectorstore retrieval concepts

What we described above works as a charm most of the time. Unfortunately, most of the time isn’t always good enough. Let’s discuss some cases that can happen when retrieving documents:

- Not a close match or sparse context

A lot of tutorials on vectorstores show examples of great vector matches. If I ask, “What is the fine for speeding above 20 km/h?” and there is a chunk containing “… fine for speeding speeding above 20 km/h is …”, then yes, the response will be great. But if I rephrase, “Is there a road, where I’ll not get a fine for speeding?” I might still get similar chunks regarding fines.

A technique that can be implemented is MMR, short for Maximum Marginal Relevance. The idea is that you return the closest k chunks that are different from other chunks.

chroma_db.max_marginal_relevance_search(query, k=5, fetch_k=10)

# or

retriever = chroma_db.as_retriever(search_type="mmr", k=5, fetch_k=10)

2. Filtering metadata

There are also cases when you have multiple documents in your vectorstore, or potentially other metadata you can specify.

chroma_db.similarity_search(

query,

filter={"source":"SOURCE_1"}

)

# or

retriever = chroma_db.as_retriever(filter={"source":"SOURCE_1"})

However, setting the filters manually isn’t very flexible. We can use the power of llms for our benefit here as well:

# be as descriptive as you can, as this is passed to llm

metadata_field_info = [

AttributeInfo(

name="source",

description="Documents in use, for example `SOURCE_1`",

type="string",

)

]

document_content_description = "Documents"

retriever = SelfQueryRetriever.from_llm(

llm,

chroma_db,

document_content_description,

metadata_field_info,

verbose=True

)

3. Saving index

In order not to create a vectorstore from scratch every time, you may save your index.

db.save_local("vectorstore_index")

Conclusion

There are tons of vectorstore integrations in Langchain, and it’s awesome because it’s unified — you can easily swap a vectorstore to check if another suits you best.

This is the end of Part 3b. Talking to Documents: Embeddings and Vectorstores. In Part 3c, we’ll make a mega-deep dive into RAGs. Stay tuned!

Visit the previous lecture Part 3a, on loaders and splitters:

LangChain 101: Part 3a. Talking to Documents: Load, Split and simple RAG with LCEL

This is Part 3 of the Langchain 101 series, where we’ll discuss how to load data, split it, store data, and create…

pub.towardsai.net

or check out the full course:

LangChain 101 Course (updated)

LangChain 101 course sessions. All code is on GitHub. LLMs, Chatbots

medium.com

Clap and follow me, as this motivates me to write new parts and articles 🙂 Plus, you’ll get notified when the new part will be published.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.