The LLM Land Grab: How AWS, Azure, and GCP Are Sparring Over AI

Last Updated on August 11, 2023 by Editorial Team

Author(s): Tabrez Syed

Originally published on Towards AI.

In the early 2000s, cell phones were still bulky and utilitarian. But in July 2004, Motorola unveiled the Razr, with its sleek, ultra-thin aluminum body that was a stark departure from the basic phones of the day. Positioned as a premium device, the Razr flew off shelves, selling 50 million units in just two years and over 130 million across various models over four years. The Razr single-handedly revived Motorola’s stagnant mobile phone division and changed the company’s fortunes, at least for a time.

It was common then for cell phone carriers to use exclusive access to hot new phones like the Razr as a carrot to encourage customers to switch networks. Cingular Wireless scored big by landing exclusive rights to the Razr. The gambit paid off — Cingular went on to acquire AT&T Wireless in 2004 and became the largest wireless carrier in the US on the back of the wildly popular Razr.

Today, a similar dynamic is playing out in the world of large language models (LLMs) and cloud computing. Major players like Amazon, Microsoft, and Google are racing to meet surging demand for LLMs like GPT-3. But much like the Razr, access to the most advanced models is limited to specific cloud platforms. As customers clamor for generative AI capabilities, cloud providers are scrambling to deploy LLMs and drive the adoption of their platforms. Just as the Razr boosted Cingular, exclusive access to coveted LLMs may give certain cloud players an edge and shuffle the cloud infrastructure leaderboard. This article explores how major cloud providers are navigating the AI gold rush and using exclusivity to their advantage.

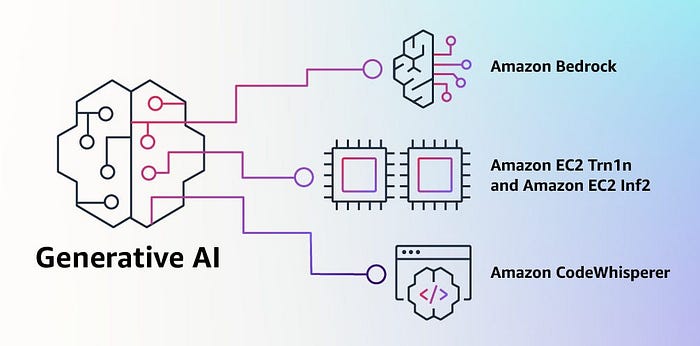

Amazon Web Services: The Swiss Army Knife Approach

With its vast array of cloud infrastructure offerings and unrivaled scale, Amazon Web Services (AWS) has firmly established itself as the dominant player in the space. However, the meteoric rise of large language models (LLMs) like GPT-3 poses a new challenge for the tech titan.

Lacking an equally buzzworthy in-house LLM, AWS risks losing ground to rivals rushing their own models to market. So instead of trying to compete model-for-model, AWS has taken a different tack: enable access to as many models as possible via partnerships and collaborations.

Enter Amazon Bedrock, launched in April 2023. Bedrock serves as a platform for developers to access and combine capabilities from a diverse roster of LLMs both old and new. This includes well-known names like Anthropic’s conversational Claude 2, AI21 Labs’ code-generating Jurassic-2, Cohere’s multipurpose Command and Embed models, and Stability.ai’s image synthesis tool Stable Diffusion.

Rather than betting on any single model, AWS is positioning itself as the Swiss army knife of LLMs — granting developers the flexibility to mix and match the best features across a wide palette of models.

And AWS isn’t sitting idle on the LLM front, either. Though details remain sparse, it’s developing its own homegrown LLM, Titan. But AWS’ strength has always been its platform, not proprietary AI. By reducing friction to access and deploy LLMs, AWS is betting developers will stick with what they know.

Google Cloud: The Walled Garden Approach

As a heavyweight in AI research, Google was perfectly positioned to capitalize on the explosion of large language models (LLMs). And in 2021, they unveiled LaMDA — an LLM rivaling the capabilities of GPT-3.

Access to Google’s AI models is provided through Model Garden, a managed service on the Google Cloud Platform (GCP). Model Garden provides enterprise-ready foundation models, task-specific models, and APIs to kickstart workflows. These include directly using models, tuning them in the Generative AI Studio, or deploying them to a notebook.

As of August 2023, Model Garden includes homegrown models like the conversational LaMDA, text-to-image generator Imagen, and code autocompletion tool Codey. Google also has open-source models like BERT, T5, ViT, and EfficientNet for easy deployment on GCP.

But Google isn’t limiting Model Garden exclusively to its own AI. Third-party models are already being added, indicating Google wants to be the one-stop-shop for AI needs. However, competitors are unlikely to offer their most advanced models. The key question is which providers will license their models for availability on GCP.

Subscribe now

Microsoft Azure: Betting on OpenAI Exclusivity

Microsoft placed an early and sizeable bet on large language models (LLMs) that is paying off handsomely. Back in 2019, before most grasped the astounding potential of LLMs, Microsoft invested a cool $1 billion into OpenAI — the maker of GPT-3.

This prescient investment secured Microsoft exclusive access to OpenAI’s rapidly advancing LLMs, including enhanced versions of GPT-4 and image generator DALL-E. With OpenAI dominating AI headlines, Microsoft is now leveraging its exclusive access to lure customers to its Azure cloud platform. And it’s working — by riding OpenAI’s coattails, Azure is steadily chipping away at AWS’s dominance in cloud market share.

Yet Microsoft hasn’t put all its eggs in the OpenAI basket. It recently partnered with Meta to launch LLaMA, an open-source LLM. This allows Microsoft to diversify its LLM portfolio beyond OpenAI, hedging its bets should a new model usurp GPT-3 as king of the hill.

The Rest: Diverse Approaches to Gain LLM Traction

The major cloud platforms like AWS, Microsoft Azure, and Google Cloud boast eye-catching proprietary AI models to lure enterprise customers. Lacking standout in-house models, smaller cloud providers are taking a flexible “BYOM” (Bring Your Own Model) approach instead. Rather than scramble to develop unique “show pony” models, they enable customers to integrate third-party or custom models into workflows.

IBM’s watsonx service exemplifies this strategy. It offers an MLOps platform to build and train AI models tailored to specific needs. Watson X provides seamless integration with open-source repositories like Hugging Face for those wanting off-the-shelf models. This allows customers to tap into a vast range of pre-built models.

Salesforce also combines internal R&D with external flexibility. Its capable AI Research team has steadily built up proprietary offerings like CodeGen, XGen and CodeT5+. But Salesforce cloud also natively supports partners’ models from vendors like Anthropic and Cohere. This prevents customer lock-in while providing access to leading third-party AI.

Oracle is betting on Cohere. It participated in Cohere’s latest $270M round (along with Nvidia and Salesforce). Oracle’s customers will have access to Cohere’s LLM via Oracle Cloud Infrastructure. Cohere, for its part, is staying cloud agnostic by partnering with several cloud providers.

The AI Upstarts

Beyond the major cloud infrastructure providers, companies specializing in data analytics and AI are also making plays. Instead of competing head-to-head with the large players, they aim to fill gaps and provide complementary capabilities.

Snowflake allows customers to access a range of third-party LLMs from its Snowflake Marketplace. It’s also developing its own model called DocumentAI for information extraction. Rather than be a one-stop model shop, Snowflake focuses on its strength — data integration — while partnering on AI.

Databricks acquired MosaicML for its enterprise MLOps platform designed to build, deploy, and monitor machine learning models. MosaicML also produced the cutting-edge open-source LLM MPT-30B. With engineering and ML tooling, Databricks enables customers to create highly tailored AI solutions augmented by its partnerships.

The battle for enterprise AI dominance is still in its early, unpredictable stages. While heavyweight cloud providers like AWS, Azure, and GCP today tout exclusive access to cutting-edge models like GPT-3 and Codex, smaller players are taking a different tack. By emphasizing flexibility, customization, and partnerships, they aim to enable customers to embrace AI’s possibilities without vendor lock-in.

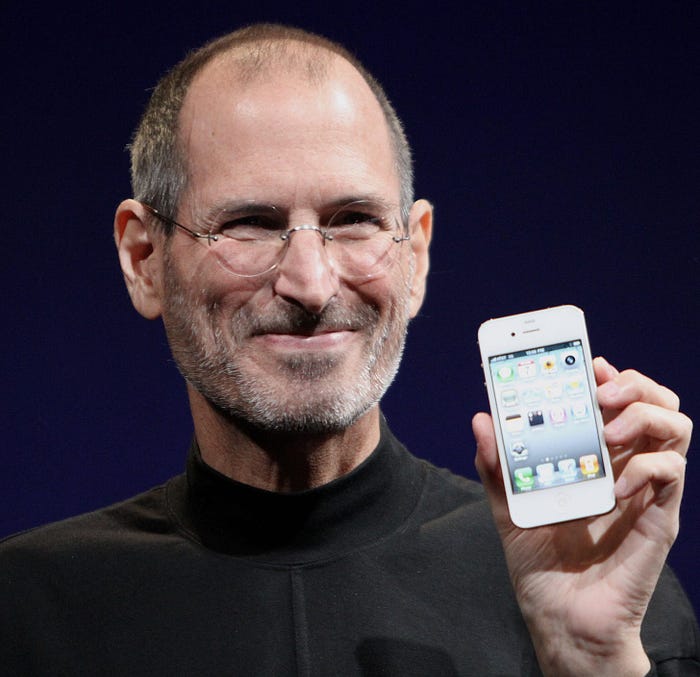

It’s easy to get absorbed by the strengths of players currently on the field. History shows us how a new entrant can scramble the board in unforeseen ways. Just as in 2007, when Steve Jobs unveiled the iPhone ending the Razr’s reign virtually overnight.

One thing is clear, the race is too early to call.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.