Google UniTune: Text-driven Image Editing

Last Updated on July 18, 2023 by Editorial Team

Author(s): Salvatore Raieli

Originally published on Towards AI.

How to use words to modify your images

Google has recently published on arXiv a new model: UniTune. The model is capable of general text-driven image editing. Let’s discover together what it is doing and why this model is an advancement.

Why this model? Why now?

Anyone who has tried to engage in AI art has noticed something strange. Sure, DALL-E, stable diffusion, and MidJourney propose extraordinary results, but after the initial enthusiasm, getting exactly what you want is quite complicated. You have to have to play with parameters a bit, try to change words, and try to add more adjectives.

This approach is called prompt engineering, in which you try to assemble all the elements to achieve the desired effect. There is also an approach called reverse engineering, where starting from an image and its textual prompt, you try either to recreate it or to identify the elements that allowed it to be generated.

Incidentally, there are also resources that allow you to try starting directly from the image and try to reconstruct the elements of the textual prompt (you can try this Google Colab). However, the result is much less exciting than one would hope and often requires several attempts.

Anyone who has experience with deep learning knows what fine-tuning is. Fine-tuning a model fits a model to your particular case without having to train it from scratch again. The model considers a general capability and is adapted to a specific task (for example, a convolutional network trained on millions of images is fine-tuned to recognize flower species). This approach is also possible stable diffusion. It leads to the question, what does it mean to fine-tune a model that generates images from textual prompts?

In the case of stable diffusion, fine-tuning means fine-tuning the embedding to create personalized images based on custom styles or objects. We do not fine-tune the model from scratch but present it with new examples of certain types of images to allow it to specialize.

The problem is that we often do not know how many and what examples we need to fine-tune. How does Google Uni-tune solve this problem?

Google UniTune

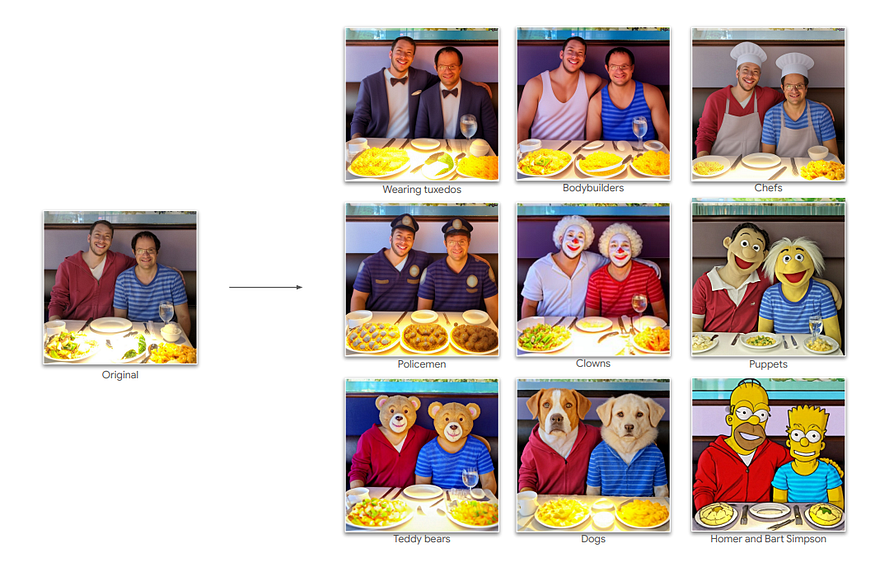

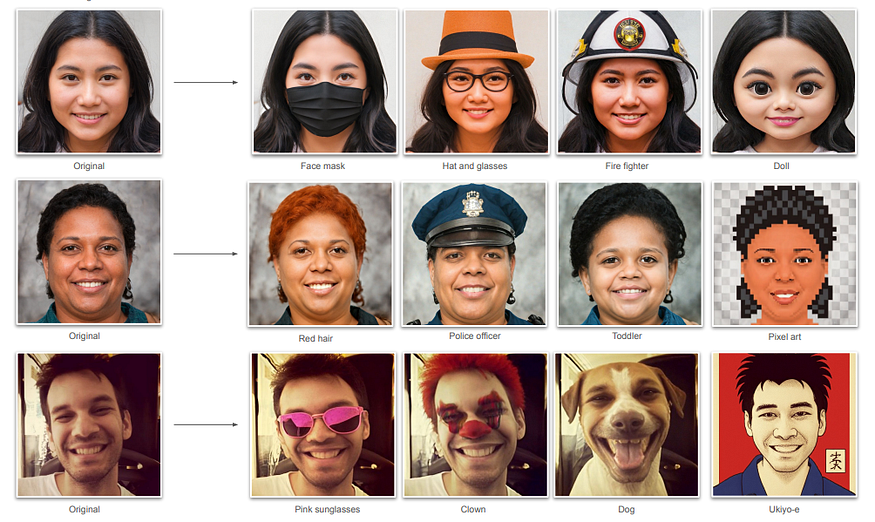

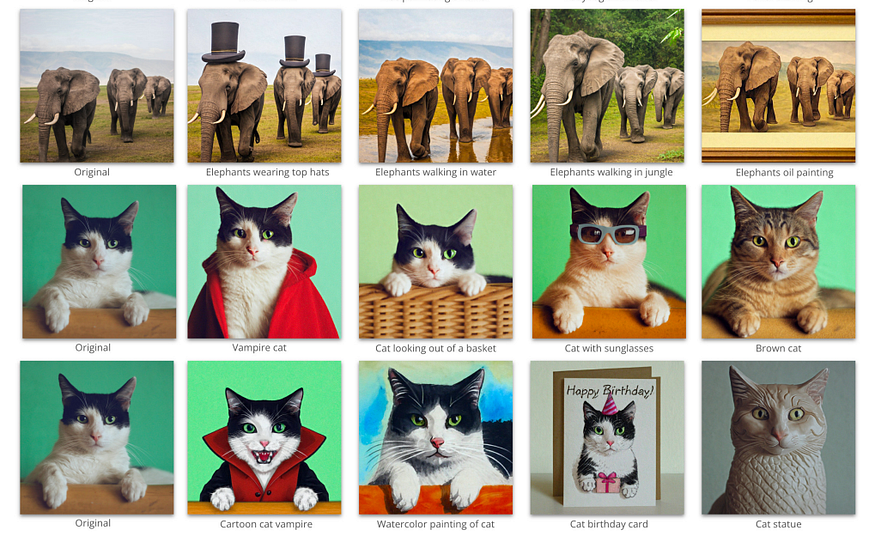

Let’s start with a general description. Google Unitune takes an image and a textual description and edits the image while retaining a high level of semantic and visual fidelity to the original. Also, unlike other models, it does not require another input or masks or sketches.

At the core of our method is the observation that with the right choice of parameters, we can fine-tune a large text-to-image diffusion model on a single image, encouraging the model to maintain fidelity to the input image while still allowing expressive manipulations. We used Imagen as our text-to-image model, but we expect UniTune to work with other large-scale models as well. We test our method in a range of different use cases, and demonstrate its wide applicability — original article

Meanwhile, as we can see from the description, the model behind UniTune is Imagen (a stable diffusion-like model that takes a textual prompt as input and returns an image). Second, generally, we try to avoid overfitting, and fine-tuning helps to prevent it. However, in this case, since we want to maintain high fidelity to the image, some over-fitting is beneficial.

The model takes the image on the left and the prompt (in this case, “minion”), with different amounts of Classifier Free Guidance weights the result evolves and fine-tunes the image

the article briefly explains the fine-tuning process:

Our goal is to convert an input of (base_image, edit_prompt) into an edited_image. In a nutshell, our system fine-tunes a text-to-image and super-resolution Imagen models on pairs of (base_image, rare_tokens) for a very low number of iterations, and then samples from the model while conditioning on text in the form “[rare_tokens] edit_prompt”.

They also use Classifier Free Guidance, a technique used by text-to-image models like Imagen to guide the model to align with the textual prompt.

In addition, the authors also experimented with interpolation using interpolation.

Also, in-panting (used by DALL-E 2) needs an explicit mask to perform edits. UniTune, on the other hand, does not need a mask but only a textual prompt

UniTune is not lacking in limitations, and in general, these emerge when Imagen encounters difficulties (meaning that the capacity of the technique is limited by the underlying text-to-image model). In some cases, subject faces may be swapped or sometimes cloned (repeated more than once). Another problem highlighted that, in some cases, it was hard for us to find

a good balance between fidelity and expressiveness (especially when dealing with small edits).

Also, latency is an issue; the first step (fine-tuning the base model) takes 3 minutes using TPUv4, and needs to be run once per input image. Subsequent steps, on the other hand, take about 30 seconds. In addition, UniTune uses numerous parameters to tune the final output.

Parting thoughts

In this article, Google presented UniTune, a simple approach for text-driven image editing. UniTune places objects in the scene or makes global edits that preserve semantic details from only a text description.

In the future, this approach can be used to edit photos or do other image editing by explaining with text prompts to the program what changes one wants to make. Also, this approach could be used by users on cell phones (filters, editing photos quickly before uploading them). In any case, the authors note, it can be improved in the future (automatically tweaking the fidelity-expressiveness dials, boosting the success rate, and speeding up the generating process).

In addition, this idea opens up more global perspectives, such as a better understanding of diffusion model embedding, reducing the number of weights, and testing the hypothesis of using fine-tuning with a single example for other models such as GPT as well.

On the other hand, in the article, they also discuss the potentially problematic nature of their work:

However, we recognize that applications of this research may impact individuals and society in complex ways (see [2] for an overview). In particular, this method illustrates the ease with which such models can be used to alter sensitive characteristics such as skin color, age and gender. Although this has long been possible by means of image editing software, text-to-image models can make it easier

If you have found it interesting:

You can look for my other articles, you can also subscribe to get notified when I publish articles, and you can also connect or reach me on LinkedIn. Thanks for your support!

Here is the link to my GitHub repository, where I am planning to collect code and many resources related to machine learning, artificial intelligence, and more.

GitHub – SalvatoreRa/tutorial: Tutorials on machine learning, artificial intelligence, data science…

Tutorials on machine learning, artificial intelligence, data science with math explanation and reusable code (in python…

github.com

Or feel free to check out some of my other articles on Medium:

Reimagining The Little Prince with AI

How AI can reimagine the little prince’s characters from their descriptions

medium.com

How artificial intelligence could save the Amazon rainforest

Amazonia is at risk and AI could help preserve it

towardsdatascience.com

Blending the power of AI with the delicacy of poetry

AI is now able to generate images from text, what if we furnish them with the words of great poets? A dreamy trip…

towardsdatascience.com

Speaking the Language of Life: How AlphaFold2 and Co. Are Changing Biology

AI is reshaping research in biology and opening new frontiers in therapy

towardsdatascience.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.