Smart Edge Cam with Gesture Alarm

Last Updated on January 7, 2023 by Editorial Team

Author(s): Anand P V

IoT

Gesture Triggered Alarm for Security based on CV, Vector Concavity Estimation, OpenVINO, MQTT, and Pimoroni Blinkt on RPi or Jetson Nano.

Notwithstanding notable advancements in technology, the developing economies are still trapped in the clutches of patriarchal evils like molestation, rape, or crime against women, in general. Women are often not allowed to stay back in their professional workspaces during late hours, nor are considered safe alone even during day time, especially in the developing world. Imperative, it has become, to enable the other half of population to be more safe & productive. Why not use advancements in technology to arm them with more power?

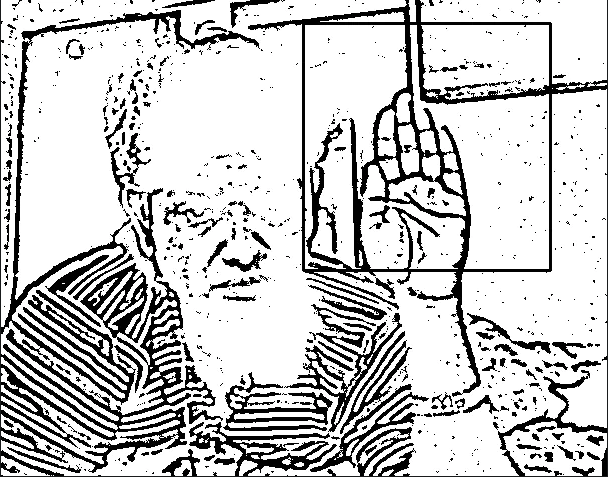

Imagine a lady who is sitting alone in a clinic, shop, company, or isolated elsewhere needs urgent rescue. She may not have the opportunity or liberty to make a call. The surveillance camera should be smart enough to detect her gestures, be it with hand or objects, as an SOS signal. A similar solution can be used by the elderly at home, to send requests or call for help.

Let’s evaluate our choices, whether to use image processing, deep learning, or arithmetic algorithms to analyze the incoming video frames from an SoC with a camera, to trigger an alert.

There are 3 main modules for this project,

a) Localize the object, used to trigger the event.

b) Analyze the motion of the object to recognize the signal

c) Deployment on an SoC to install the gadget in the environment.

This is a tricky problem, as we need a cheap solution for easy adoption, but not at the cost of accuracy, as we deal with emergencies here. Similarly, it is easier to detect a custom object but it is more useful to use your own body to signal the emergency. Bearing all this in mind, let’s discuss solutions for Localization & Gesture Detection.

A) Object Localization Methods

We can use hardware optimized YOLO to detect, say a cell phone, and easily get 5–6 FPS on 4GB Raspberry Pi 4B with Movidius NCS 2. We can train YOLO to detect a custom object such as a hand. But the solution is not ideal as NCS stick will hike up the product price (vanilla YOLO gives hardly 1 FPS on 4GB RPi 4B).

ii) Multi-scale Template Matching

Template Matching is a 2D-convolution-based method for searching and finding the location of a template image, in a larger image. We can make template matching translation-invariant and scale-invariant as well.

- Generate binary mask of template image, using adaptive thresholding. Template image contains objects to be detected.

- Grab the frame from the cam and generate its binary mask

- Generate linearly spaced points between 0 and 1 in a list, x.

- Iteratively resize the input frame by a factor of elements in x.

- Do match template using cv2.matchTemplate.

- Find the location of the matched area and draw a rectangle.

But to detect gesture, which is a sequence of movements of an object, we need stable detection across all frames. Experiments proved hand template multi-scale matching is not so consistent to detect an object in every frame. Moreover, template matching is not ideal if you are trying to match rotated objects or objects that exhibit non-affine transformations.

iii) Object Color Masking using Computer Vision

It is very compute-efficient to create a mask for a particular color to identify the object based on its color. We can then check the size and shape of the contour to confirm the find. It would be prudent to use an object with a distinct color to avoid false positives.

This method is not only highly efficient and accurate but it also paves the way to do gesture recognition using pure mathematical models, making it an ideal solution on the Edge. The demonstration of this method along with mathematical gesture recognition is given down below.

B) Gesture Recognition Techniques

We can define a simple gesture so that is efficient to detect. Let’s define the gesture to be circular motion and now we try to detect the same.

i) Auto-Correlated Slope Matching

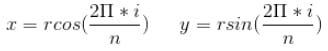

- Generate ’n’ points along the circumference of a circle, with radius = r

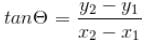

- Compute slopes of the line connecting each point in the sequence,

- Generate point cloud using any of the object localization Methods above. For each frame, add the center of the localized object to the point cloud.

- Compute slopes of the line connecting each point in the sequence.

- Compute correlation of slope curves generated in the above steps.

- Find the index of the maxima of the correlation curve using np.argmax()

- Rotate the point cloud queue by index value for the best match

- Compute circle similarity = 1- cosine distance between point clouds

- If correlation > threshold, then the circle is detected and alert triggered.

Upon implementation, the above algorithm is found working. But in practice, the distance between the localized points varies based on rotation speed and FPS. Another neat and simpler solution would be to use Linear Algebra to check whether the movement of points is convex or concave.

ii) Concavity Estimation using Vector Algebra

If all the vectors in the point cloud are concave, then it represents circular motion.

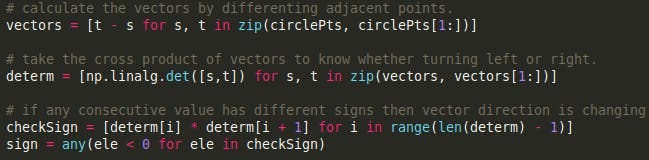

- Compute the vectors by differencing adjacent points.

- Compute vector length using np.linalg.norm

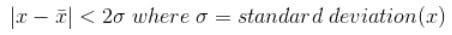

- Exclude the outlier vectors by defining boundary distribution

- If the distance between points < threshold, then ignore the motion

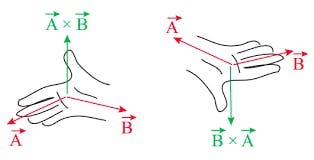

- Take the cross product of vectors to detect left or right turns.

- If any consecutive value has a different sign, then the direction is changing. Hence, compute a rolling multiplication.

- Find out the location of direction change (where ever indices are negative)

- Compute the variance of negative indices

- If all rolling multiplication values > 0, then motion is circular

- If the variance of negative indices > threshold, then motion is non-circular

- If % of negative values > threshold, then motion is non-circular

- Based on the 3 conditions above, the circular gesture is detected.

From the above discussion, it is clear that we can use Object Color Masking (to detect an object) and use efficient algebra-based concavity estimation (to detect a gesture). If the object is far, then only a small circle would be seen. So we need to scale up the vectors based on object depth, not to miss the gesture. For the purpose of the demo, we will deploy these algorithms on an RPi with a camera and see how it performs.

Now let’s see the gesture detection mathematical hack as code.

If a circular motion is detected, then messages are pushed to people concerned and an alarm is triggered. The event trigger is demonstrated by flashing ‘red’ light on a Pimoroni Blinkt! signaled using MQTT messages and SMS to mobile using Twilio integration. Watch the project in action in the video above.

This is an efficient and practical solution for gesture detection on edge devices. Please note that you can use any object in place of the tennis ball or any gesture other than circular motion. Just that you need to tweak the mathematical formula accordingly. Probably the only downside may be, sensitivity to extreme lighting conditions or the need for an external object (not your body), to trigger the alarm.

To address this drawback, we can also use gesture recognition models optimized by OpenVINO to recognize sign languages and trigger alerts. You can also train a custom gesture of your own, as explained here.

However, some layers of such OpenVINO models are not supported by MYRIAD device as given in the table here. Hence, this module needs to be hosted on a remote server as an API, and not on the RPi.

Alternatively, train the hand gesture classification model and convert to OpenVINO Intermediate Representation format to deploy on an SoC.

1. First, choose an efficient object detection model such as SSD-Mobilenet, Efficientdet, Tiny-YOLO, YOLOX, etc which are targeted for low power hardware. After experimenting with all these models on RPi 4B, SSD-Mobilenet was found to give maximum FPS.

2. Do transfer learning of object detection models with your custom data

3. Convert the trained *.pb file to Intermediate representation — *.xml and *.bin using Model Optimizer.

export PATH=”<OMZ_dir>/deployment_tools/inference_engine/demos/common/python/:$PATH”

python3 <OMZ_dir>/deployment_tools/model_optimizer/mo_tf.py — input_model <frozen_graph.pb> — reverse_input_channels — output_dir <output_dir> — tensorflow_object_detection_api_pipeline_config <location to ssd_mobilenet_v2_coco.config> — tensorflow_use_custom_operations_config <OMZ_dir>/deployment_tools/model_optimizer/extensions/front/tf/ssd_support_api_v1.14.json

python3 object_detection_demo.py -d CPU -i <input_video> — labels labels.txt -m <location of frozen_inference_graph.xml> -at ssd

4. Finally, deploy the hardware optimized models on Pi.

In a similar experiment, a trained hand gesture classification model was deployed on Jetson Nano which gave hardly 3-5 FPS. This was not so efficient and also the hardware cost was higher. Note, that the gesture trigger can be used not only to signal an emergency but to pass a message as well.

To conclude, we can consider the vector algebra model to be an efficient, cheap, and generic solution to detect gestures. Another creative way to detect gestures is to use onboard IMU on SoCs like Pico4ML to identify a gesture. Just wear your creative hat to model other geometric shapes mathematically!

The source code of this solution is available here

If you have any queries or suggestions, you can reach me here

References

- Book: University Physics I — Classical Mechanics. The Cross Product and Rotational Quantities. https://phys.libretexts.org.

Open Model Zoo Demos – OpenVINO™ Toolkit

Smart Edge Cam with Gesture Alarm was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.