Run Mixtral 8x7b on Google Colab Free

Last Updated on January 2, 2024 by Editorial Team

Author(s): Dr. Mandar Karhade, MD. PhD.

Originally published on Towards AI.

A clever trick allows offloading some layers

Hello, wonderful people! 2023 is almost over. But it seems like the development in LLMs has no breather.

Today, we will see how Mixtral 8x7B could be run on Google Colab.

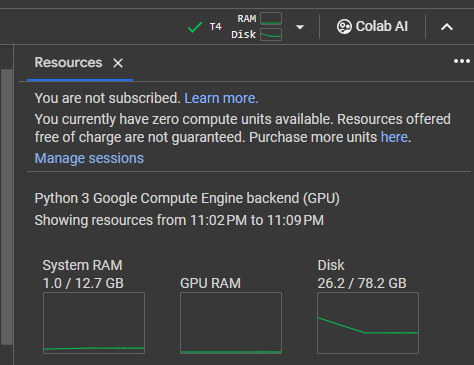

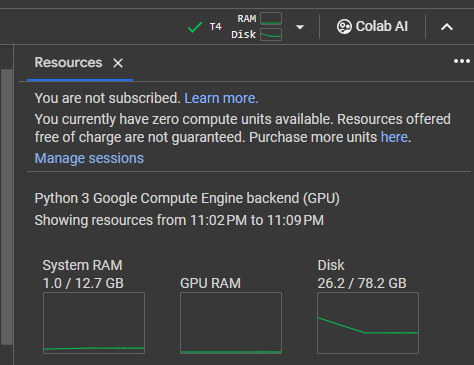

Google Colab comes with the following confirmation. It has a T4 instance with 12.7 GB memory and 16GB of VRAM. The disk size does not matter, really, but as you can see, you start with 80GB of effective disk space.

First, lets fix the numpy version and triton in Colab

# fix numpy in colabimport numpyfrom IPython.display import clear_output# fix triton in colab!export LC_ALL="en_US.UTF-8"!export LD_LIBRARY_PATH="/usr/lib64-nvidia"!export LIBRARY_PATH="/usr/local/cuda/lib64/stubs"!ldconfig /usr/lib64-nvidia!git clone https://github.com/dvmazur/mixtral-offloading.git –quiet!cd mixtral-offloading && pip install -q -r requirements.txtclear_output()

Now we will import rest of the libraries and also append the system path with mixtral-offloeading folder created by the git cloning statement above.

# append newly downloaded mixtral github import syssys.path.append("mixtral-offloading")import torchfrom torch.nn import functional as F# import quantization lirbaries from hqq.core.quantize import BaseQuantizeConfigfrom src.build_model import OffloadConfig, QuantConfig, build_model# import huggingface hub from huggingface_hub import snapshot_download# Import additional libraries to allow easier handling of ipython environment from IPython.display import clear_outputfrom tqdm.auto import trange# import the usual transformers suspect from transformers import AutoConfig, AutoTokenizerfrom transformers.utils import logging as hf_logging# configure huggingface logging to be a bit quiet hf_logging.disable_progress_bar()

Now… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.