Learnings From My Data Science Career So Far

Last Updated on July 17, 2023 by Editorial Team

Author(s): Harshit Sharma

Originally published on Towards AI.

Data Science is huge, and so are the chances of getting lost.

Each one of us — the Data Scientists and MLEs — has to be good in Maths, Coding, System Design, MLOPs, and whatnot. Not everyone has the luxury to learn these things in their day job and therefore has to learn these on their own. And that's when one realizes the endless stretch of Data Science land.

But I feel this is doable, given enough time. The question is how exactly, especially when we have courses being released left and right, exciting papers published every single day, and books coming out on topics that were discussed never before.

I still don’t know the answer, and this article is not about prioritization and time management but the mistakes I made along the way, the realizations I had, and the mental models that have and will help me in making my learning more efficient in times ahead.

Let's get started !!

1. Everything you do has an opportunity cost

But don’t give it more attention than it deserves

Let me be honest — my mind is busy assessing the opportunity cost of writing this article. I could have read the long pending paper on prompting, implemented some algorithm for revision, done Leetcode, etc (this “etc” stands for at least 5 other tasks).

But I realized over time there is an opportunity cost to “worrying about opportunity cost”. And let me tell you — that's significant to the extent that you end up doing nothing.

This is for the planning maniacs trying to optimize every single action they take in the hope that their next project will finally move the world.

I have spent countless hours planning the next:

– best next Data Science project to do

– best book on Machine Learning to read

– a best note-taking tool to go for

But guess what,

I would have been just fine taking sub-optimal but consistent actions over time.

Takeaway —

Instead of waiting for the next best project idea, just START, however small the project is. 10 small projects are worth more than a single “best” one. Quantity is a prerequisite to Quality. The small implementations build foundations for the big ones and compound, once again, rules.

The ideas from different projects slowly compound, helping you make and connect the dots. You can’t simply wake up one day with a novel Transformer based architecture without understanding what Attention truly means.

Lower your bar, go small, and learn new ideas along the way, and you will realize that:

Magic Happens at the intersection of Ideas

2. Learn to learn from less

Informational Minimalism is what we need

I wanted to study Transformers, and I ended up downloading 5 different books.

Do I really need 5 books? No.

Then why this madness? I believe it was the fear of missing out on information. What if there are better explanations in another book? What if this book is missing some important equation that could have made my understanding better?

What should you do?

Pick one book, make sure it's almost the best (we would try to be optimal here), and just be fine with it for foundational understanding. Just read enough so as to be able to apply what you learned. Don’t pick up another resource until needed. Otherwise, the Law of Diminishing Returns will start kicking your a**. The marginal gain in knowledge (if at all) due to overlapping content won’t be worthy of your time.

Instead, spend time applying knowledge that you already have. Fail at it and then learn the missing parts to finish the task.

Failure is a better teacher than the fear of missing out

3. Refer to the same resource for revising concepts: Reciprocal Anchoring

Don’t fire up your favorite browser every single time you revise KNN. Refer to the notes that should have already been made. Or if not, try to use the same reference. This is because

Memory is associational in all modalities

Let's break this down.

Learning happens in different modalities, namely via visuals, text, sound, etc. While learning about, let's say Voronoi diagrams for KNN in a book, your subconscious mind also registers metadata such as the position of the image on the page, colors in the image, the approximate position of the page in the book, and the preceding and succeeding context around the content.

This metadata acts as “Anchor points” that help you put your learning in a context (visual), and it is this context that lets you recall the concepts you learned not only quickly but accurately as well.

For example, I refer:

1. Linear Algebra by Gilbert Strang for revising LA

2. Speech and Language Processing by Dan Jurafksy, each time I feel rusty in foundational NLP concepts

3. And so on

After all this, it might still be tempting to refer to a different source because who knows if you missed anything. And this is when you need to scroll up a bit and re-read the previous section.

4. Sort out your note-taking workflow sooner than later

I have mentioned this in my earlier posts here and here, and it's worth mentioning again.

Think of your notes as a second you, someone with whom you can park your entire knowledge base. You would want him/her to be:

- Quick, giving you information as quickly as possible

- Accessible from anywhere

- Accurate

- And most importantly, should be able to connect things as closely as your brain does

Give your second-you the time it deserves, and it will take care of you forever.

5. Do this if you are looking for unique project ideas

Just peep into your lives deep enough, and you won’t ever run out of them. Moreover, what could be a greater motivation to learn data science than to be able to apply it to your own life?

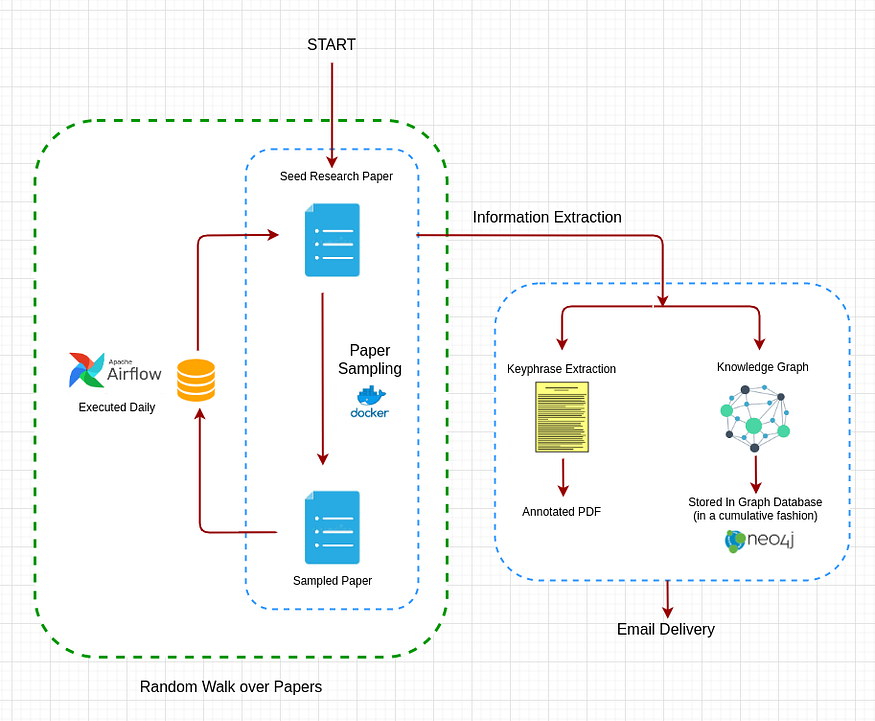

I wanted to develop the habit of reading research papers, so I created a paper annotation tool that would automatically highlight important pieces in a research paper and organize the new information in the form of a knowledge graph. In case you are interested, here is the Github link for the project.

You can make your projects novel either:

- by inventing a novel Algorithm and apply to a common use case

- by applying a common Algorithm to a novel use case

Looking at the rate at which ML papers are published each day, I find 2nd approach to be more feasible. Just find the problems you face, which we should already be good at, and apply some simple algorithm to solve them. If it works, move on to a more complex one.

6. Reading papers is not only for Researchers

I wish I had started this earlier. Few instant advantages:

- Staying updated with state-of-the-art

- Real-world applications of algorithms in different contexts

- It helps you in developing deeper intuitions about algorithms, as the papers discuss their pros and cons, what worked and didn’t work, etc.

Reading research papers is the fastest way to develop mental

But what papers to read? Well, that depends on what you want to achieve:

- Is it just for fun and exploration?

Explore Alpha Signal. It's an aggregator of the best ML papers that delivers via a weekly newsletter. - Is it targeted to a specific topic or related to something you are attempting at your job?

Start with Survey Papers. That's a good way to jump-start your understanding of the new concept, as survey papers put everything into context, putting all the approaches at a glance.

Make a habit of reading papers, and you will thank yourself for doing so.

7. Strive to be a Producer

Our brain hurts when it comes to creating something, and that's precisely why you should do it. It forces you to first convert your subconscious thoughts to dots and then connect them in a way that you otherwise wouldn’t have.

Reading books, doing courses, and watching youtube tutorials, are passive activities which trick your brain into believing that it learned something. Let me tell you — it didn’t. Don’t fall for this trap.

Instead of reading 10 books on a topic, just read one and create information using your learnings. Maybe:

- Write a blog describing your unique perspective on the topic

- Implement the concept and push it to GitHub

- Or at least take notes in your favorite app (mine is Obsidian, by the way)

To give my own example, just to share my learnings, I created a blog on Blogger.com

And then I moved on to GitHub as I felt getting some stars would feel awesome.

I didn't stop there and moved to Substack, where I am planning to stay for a while:

What you just saw is an example of how overanalyzing opportunity costs (blogging platforms in this case) and the fear of missing out truly take over you.

Anyways, the point here is to BUILD and CREATE stuff.

8. Plan for a Marathon, but Execute like a Sprint

There have been numerous instances when I decided to Leetcode for interview preparation, I was able to maintain a few days worth of streak but eventually fell out of the loop. Back to square one — this is what happens when the “space” in the “spaced-repetition technique” is put to the test.

Things die out if not attempted aggressively (in terms of time taken to implement). Plan long-term, break the goal into pieces, and target each one with as much force as possible.

Follow Intuitive Shorts (my Substack newsletter), to read quick and intuitive summaries of ML/NLP/DS concepts.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.