‘Chat With Company Documents’ Using Azure OpenAI

Last Updated on November 6, 2023 by Editorial Team

Author(s): Stephen Bonifacio

Originally published on Towards AI.

Large Language Models (LLMs) like ChatGPT store a vast repository of knowledge within their billions of parameters as they are trained on massive amounts of text from the internet. However, their knowledge is only as good as the text data they were trained on. For ChatGPT, this means it has no knowledge of anything after September 2021 or any private data stored behind firewalls, for example – proprietary company data.

So far, the popular, most accessible way to augment this knowledge limitation is through a method called Retrieval Augmented Generation or RAG (the other method is fine-tuning). It works by storing text-based documents (that the LLM has no knowledge of) on an external database. When a user asks the LLM a question, the system retrieves relevant documents from this database and provides them to the LLM to use as a reference to answer the user’s question. This is called in-context learning or, more crudely — prompt stuffing. In most use cases, the RAG method is preferable to LLM fine-tuning, which is usually more involved, requiring specialized hardware (GPUs) and skillset (e.g., machine learning), high-quality data set, and is generally more expensive to implement.

Here are additional information from the OpenAI cookbook to help you decide which approach to use:

Fine-tuning is better suited to teaching specialized tasks or styles, and is less reliable for factual recall.

As an analogy, model weights are like long-term memory. When you fine-tune a model, it’s like studying for an exam a week away. When the exam arrives, the model may forget details, or misremember facts it never read.

In contrast, context messages (from RAG systems) are like short-term memory. When you insert knowledge into a message, it’s like taking an exam with open notes. With notes in hand, the model is more likely to arrive at correct answers.

Microsoft Azure has released a quick-start solution for its customers that leverages the RAG method to augment LLMs with knowledge of company documents. As a conversational agent, it uses OpenAI LLMs (gpt-3.5/ChatGPT or gpt-4) hosted on Azure infrastructure (and not OpenAI’s) that are called by the application via completion API endpoints. The use of this application is covered by the stringent Azure data protection policy, and thus any user interaction and data shared while using it is kept secure and private. Pricing is based on per-token usage.

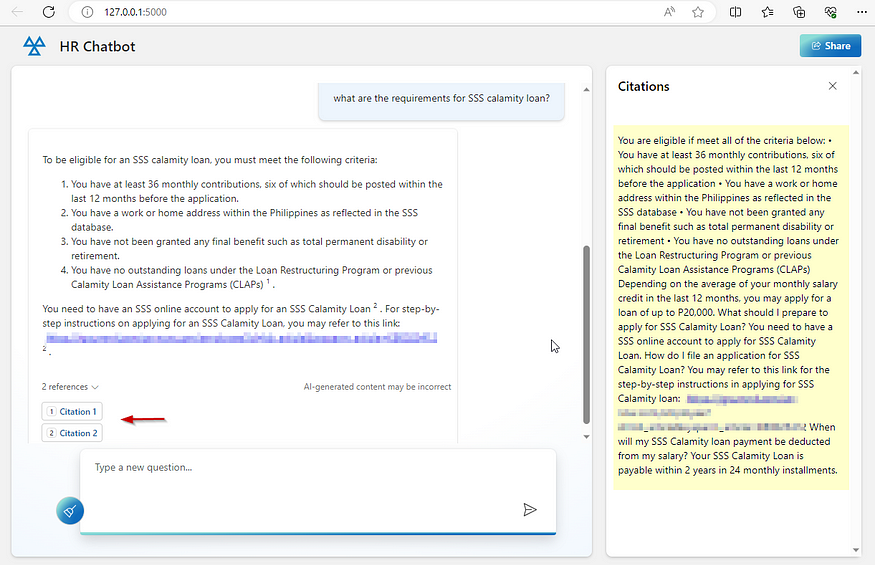

The sample chat interaction below demonstrates the application using a sample document about a Social Security calamity loan program as a reference. LLMs are known to ‘hallucinate’ or give untruthful or made-up responses. To mitigate this, each response is returned with a citation referencing the source document the response was based on. This allows the user to verify the veracity of a given response.

Deployment Options

The solution can be deployed as a web app or as a power virtual agent (PVA) bot directly from the Azure OpenAI Chat playground. The data source needs to be set up and then deployed based on the two available deployment options.

Web App

The web app is a basic chat interface that’s accessible via browsers on laptops or mobile devices. The web app has been released as open source by Microsoft with an MIT license, which permits commercial use. The GitHub repository can be found here. Customers are free to customize the application as required. The web app can be deployed ‘as-is’ in one click as an app service hosted in Azure. This will be deployed with the Azure logo and default color schemes.

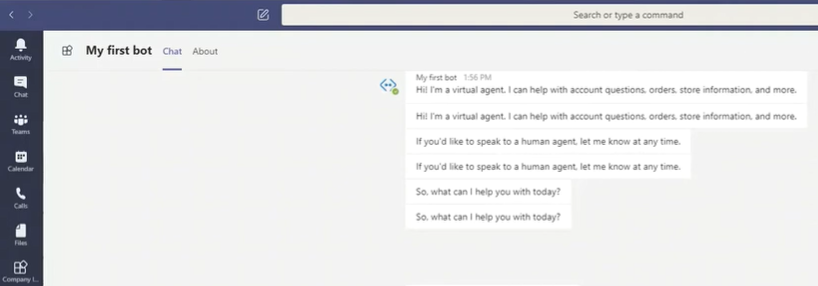

Power Virtual Agent

The PVA bots can be deployed on company websites. It also has native integration and can be easily embedded into other MS products such as Sharepoint sites and MS Teams.

Loading company documents to the Chatbot’s knowledge database

The following document types can be loaded into the bot’s database: txt, PDF, Word files, PowerPoint files, and HTML. It’s recommended to use text-rich documents like company policies, contracts, how-to instructions, etc., for this chatbot. Tabular data, such as those stored in Excel/CSV or relational databases, is not recommended. The uploaded documents will be stored in a storage blob within the customer’s Azure environment and will be kept private as well.

The chatbot queries data from an index in the Azure Cognitive Search service. It has an intuitive indexer that can load all supported document types from a single location (e.g., a local folder or an Azure Blob) and into the index.

The search service (which compares the user query/input versus the relevant document from the index that will be used as the basis for the chatbot’s response) supports lexical or keyword comparison based on the BM25 search algorithm as well as embedding/vector-based search algorithms like cosine similarity or dot product. It also supports a hybrid of these two — a combination of full-text search and vector search.

References:

Azure OpenAI on your data (preview)

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.