We Will Never Achieve AGI

Author(s): Pawel Rzeszucinski, PhD

Originally published on Towards AI.

Some say artificial general intelligence (AGI) is already here. Others say it will take years or decades to arrive, maybe even centuries. I claim that we will never get there.

While the first mathematical model of a neural network was published way back in 1943, modern AI research really kicked off in the 1950s. Researchers quickly landed on the idea of the eventual (but certain) emergence of AGI: a form of intelligence that would be capable of doing any work a human can do.

This idea has captured the popular imagination since then. If you’re familiar with the film “2001: A Space Odyssey” then you’ll understand why the infamous sentient computer HAL-9000 was voted the 13th greatest villain of all time by the American Film Institute. Not all fictional depictions of AGI are as dramatic and dreadful though: Think of bumbling Star Wars robots C-3PO and R2-D2.

All fiction aside, the topic of AGI recently grabbed my attention after I read this article proposing that we have reached the point of AGI. The authors argue that current generative AI models such as ChatGPT will go down in history as the first real-world examples of AGI. But more importantly, they also discuss at length why many people will inevitably disagree with this proposition.

This offense-defense tactic made me realize that we’ll most likely never reach a consensus about AGI. From my perspective, I don’t believe we’ll ever achieve AGI, but it won’t be due to technological limitations.

The AI Effect

Back in the 1960s, AI researchers prophesized that AGI was only a decade away, which, of course, did not pan out.

Fast forward to a few decades later: Surveys dating back to as early as 2006 start discussing when AGI would hit the scene. Yet, almost twenty years later, top professionals are still debating what AGI even means in the first place. It’s a delicate and hugely complex task to characterize what constitutes an artificial human-like intelligence, but the confusion resulting from trying to reach a solid definition is a first indication of how our human nature resists declaring that we’ve 100% “achieved” AGI.

“Artificial Intelligence” is a term situated in the same category of other terms such as “strategy,” “consciousness,” “value” and “morality,” a group conveniently labeled “suitcase words” by Marvin Minsky. All these terms are extremely subjective and can have many different meanings, especially “intelligence.”

For one, how do we define intelligence? Humans display a vast number of different capabilities and while many have tried to classify the various types of intelligence, they never seem to get the full picture. It’s only natural that defining “artificial intelligence” is equally challenging.

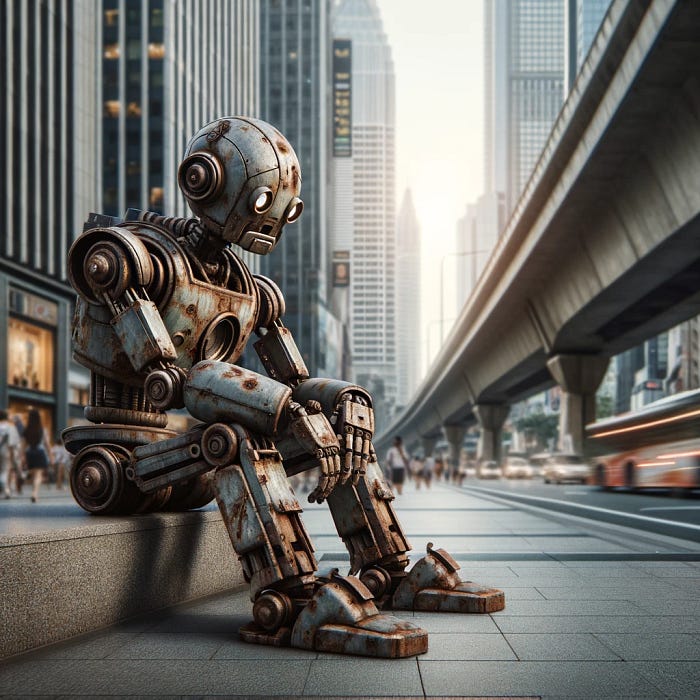

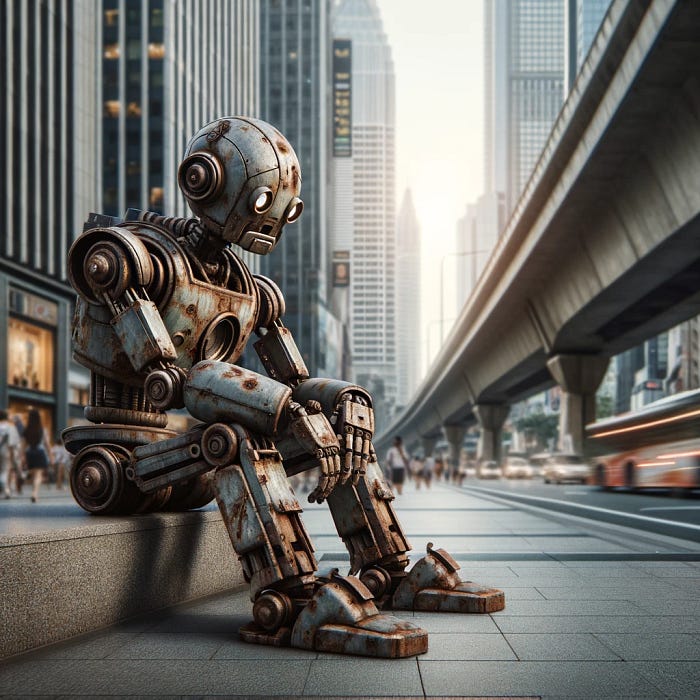

Another pushback comes from our sense of worth as humans; we’ve come a long way as a species, and with our complex intelligence comes the need to feel special and unique. In the history of animal cognition, whenever an animal subject is discovered to have an ability that we thought was unique to us (like using a tool), we will somehow diminish the importance of this capacity. Similarly, every time an AI model manages to display some amazing capability that we never thought possible, we will trace the process and deprecate its magnitude to the level of simple automation.

In the field of AI, this phenomenon has been characterized as the “AI Effect.” The AI Effect suggests that the definition of AI can be thought of as a moving target and the same can be said about AGI. This idea can be perfectly summed up by Tesler’s Theorem, which states that “AI is whatever hasn’t been done yet;” or, in another example, researcher Rodney Brooks said, “Every time we figure out a piece of AI, it stops being magical; we say, ‘Oh, that’s just a computation.’”

Moving Targets And Moving Forward

A 2023 letter issued by the Future of Life Institute divided the field into two camps:

• The first fear is that AI poses a giant threat to humanity, and we should be wondering about future repercussions.

• The second is that we should focus on current AI issues and not long-term existential threats.

I’m with the latter group. Given the AI Effect and various issues related to it, such as the lack of definition of AGI itself, tackling current AI issues like inherent bias or job displacement is by far the best use of our time.

I don’t mean to say we should abolish all philosophical and existential ponderings about how AI affects our civilization. We have also come a long way in this field, and experts in humanities and on social matters ought to have a seat at the table of discussion. But the group of AI experts in the industry, as a whole, should focus their efforts on promoting the practical changes we so desperately need to see to this fast-moving train, because it’s certainly not going to slow down so soon.

This text was initially written by me with support from Bruno Giacomini and Natalia Panzer for the Forbes Technology Council, and appeared as part of my work for the Team Internet Group PLC.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.