Exploring Large Language Models -Part 3

Last Updated on November 6, 2023 by Editorial Team

Author(s): Alex Punnen

Originally published on Towards AI.

Below are some of the questions that intrigued me or came up while trying to fine-tune LLMs. The article is an attempt to understand these and share this understanding with others in a lucid way, along with deep dives and code illustrations for advanced users.

This article is split into the following parts.

Part 1 discusses the evolution of LLM training. The intention is to set the context for us to understand the magic, or more technically –emergence, that starts to happen when the model size increases above a threshold and when trained with huge data. The deep-dive sections illustrate these concepts in greater detail and depth, though they are also easy to follow by most programmers. Non-programmers can avoid those sections. It tries to answer the following questions in an intuitive way. You can read it here.

Since LLMs are based on NeuralNet with Loss function, is not all training of LLMs supervised training? Why is it termed usually as unsupervised training?

Can you train an LLM in a very short sentence to illustrate how LLM training works in practice?

What is Masked and Causal LM?

Can you explain the intuition behind Transformer Architecture in a single picture?

What exactly is it meant by unsupervised training in LLM?Why does the main architect of ChatGPT — Ilya Suverskar think of unsupervised training as the Holy Grail of machine learning?

What is meant by the Emergence/ Understanding of LLMs?

Part 2 discusses the popular use cases of LLMs, personal assistants, and chatbots with custom data via information retrieval patterns (vector space search with LLM augmentation). We will also explore seeds on how the mental model and Natural Language Understanding of models could become its more powerful use cases. In this context, we will explore one main limitation of the LLM model by contrasting the strengths of supervised training with a weakness of the LLM models — the lack of Explainability or difficulty in determining facts vs. hallucinations. We will explore how such systems have been very effectively used in computer systems by a hierarchy of controls, unreliable systems made reliable by a higher level control -our daily use of ChatGPT for example and how it can be extended to other use cases. It tries to answer the following questions. You can read it here

What are the general use cases of LLMs?

Why are LLMs best suited as productivity assistants?

What is the Vector DB/Embedding pattern of information retrieval?

Can LLMs be used for things other than textual tasks? What is Causal reasoning?

What is the problem with LLMs?

Why do minds like Yan LeCun think current LLMs are hopeless?

Are LLMs Explainable, how can they be effectively used if they are not?

Part 3 discusses concepts related to training and fine-tuning the LLMs on custom domains. We are targeting the domain understanding part in this, and how that is much more powerful than simpler vector space information retrieval patterns. We will explore how Quantisation techniques have opened up very large LLMs to the world, and how this coupled with the concepts of reducing training parameters has democratised LLM fine-tuning. We will explore the main technique of effective fine-tuning — Instruct tuning, and how to solve the biggest practical problem of Instruct tuning — the unavailability of quality Instruction training dataset with all the concepts we have gone through this far. It tries to answer the following questions. You can read it here

What is the need to fine-tune/re-train LLMs?

Why is it difficult to train LLMs?

How do Quanitsation and LoRA help in training large LLMs?

How does Quantisation and LoRA work?

What is an effective way to fine-tune pre-trained LLMs?

What is Instruct Tuning?

What is Self Instruct? How can we generate a high-quality training dataset for Instruct Tuning?

Future sections will discuss the concept of leveraging the understanding part of LLMs and using the hierarchy of controls in leveraging these powerful systems for augmenting AI/ML systems.

Can you show how LLMs of varying capability can be hierarchically structured to create a complex automation with causal reasoning?

Why are we aiming to create human-like intelligence from LLMs or neural nets?

What is the theory of compression comprehension, behind intelligence and how does it relate to LLMs ?

Why does this seem eerily similar to creating bird-like flight back in time before the invention of the fixed-wing plane?

Fine Tuning on Custom Domain Data

All the popular models like GPT3/3.4/4 and LLAMA2 are trained primarily on the data scraped from the internet. Common Crawl, WebText, GitHub, StackOverflow etc: These are massive datasets of text and code that are crawled from the public web and a few curated like the QA dataset SQAD.

The worldview and information the model has learned are also based on this data. However, this means that if we have some domain-specific data that the model has not seen, then it won’t be able on its own to answer questions related to such data in case of Closed Book QA use-case or any other use case that depends on the specific domain data.

For example, most online portals are adding virtual assistants for their customers, banks, e-commerce, customer support etc. And a huge if not the majority of data in the world still lives outside of the internet in enterprises. We have seen in Part 2 how LLMs can help address information retrieval use cases based on Vector space embeddings. But what if our use case is more high level? It needs domain “understanding”, maybe some higher level reasoning tasks. This is where fine-tuning with custom data comes into play.

I am not able to provide a use case where higher-level reasoning can be used. There are a few simpler ones, like training on custom issues and then asking it to reason on similar issues and possible solutions, but these are as of now not tested. So let’s stick with a simpler use-case Closed-Book QA – the model answers questions based on the knowledge it internally has for now.

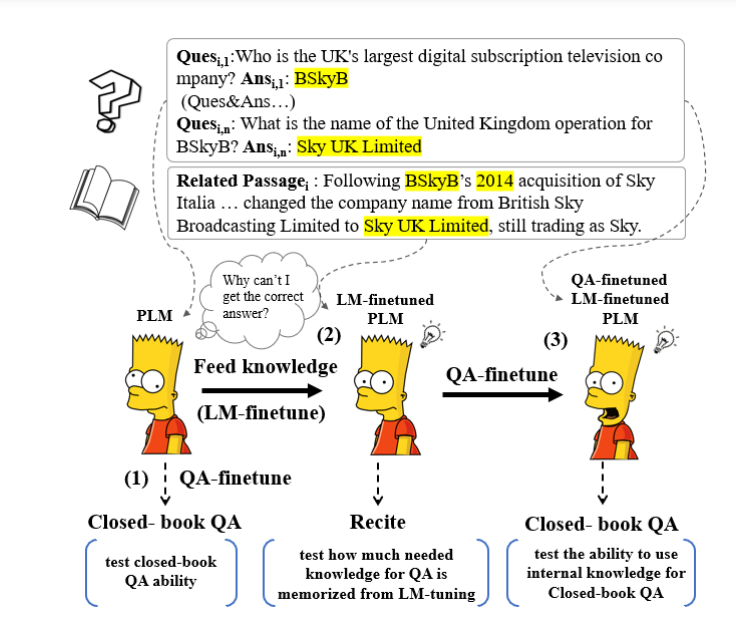

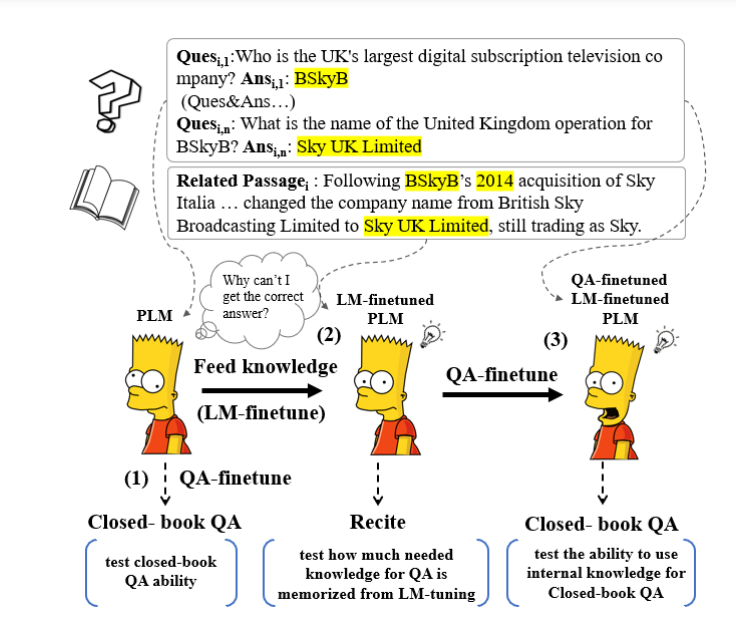

The above is from a 2021 paper Can Generative Pre-trained Language Models Serve as Knowledge Bases for Closed-book QA? This is already outdated in the sense of the number and size of models and training released. The authors with 2021 models could not achieve great results and the great results they found in some studies described could be attributed to the high train and test overlap in datasets.

There are also a lot of tutorials on the internet that try to portray this concept with toy datasets. The real trouble is making the model ‘understand’ the data first and not just parrot it out.

Without understanding, it will parrot out the answer based on the similarity of the question in the training set, or both the question and answer. To prevent this, the authors have an intermediate step called ‘Recite’ where the model is made to recite/output the relevant passages and, after that, output the answer.

Just to be clear, there is no doubt now (2023), especially with GPT3/4, LLAMA2 and similar models about the feasibility of this use case, that a model can understand the question, has some ability for causal reasoning, and can generalize to learn a world model from its training data, and to use both to create a well-formed answer to the question.

Let’s see the difficulties one by one however, of training a large model. First is the importance of the model size. This GIF from the Google AI blog illustrates this beautifully.

Only when the model size becomes sufficiently large does the model start “understanding” language and generalising tasks.

It is relatively easy and cost-efficient to train or fine-tune a small model with our custom data, as the GPU and infrastructure requirements are very less. On the contrary, it needs huge fleets of GPUs and training infrastructure to load very large language models and fine-tune them (without quantisation) in a distributed way (e.g. see libraries like DeepSpeed)

LLMs come in various sizes, based on the number of trainable parameters or weights. The smaller ones, which have less than 1 billion parameters (GPT2 124 M, Bloom 560M, Flan-T5 783 M ) etc can be trained on a laptop GPU with 8 to 15 GB GPU RAM )

For quite some time, this is what I tried. I tried to overfit a small test data set on decoder models like GPP2-small, GPT-Medium, and Bloom and encoder-decoder models like Flan-T5, thinking somehow that the understanding we see in ChatGPT ( see- unsupervised learning Part 1) may come in some form if we train on these smaller models. ( less than one billion parameters). As per the paper, I tried both Causal training, where the model is presented with only previous tokens, and Masked LM-based training, where the model is presented with full tokens, but a certain percentage of tokens are masked in random, and the model has to predict it.

Fine-tuning Small Models is Easy but not Effective

DeepDive: I started with training a small model like GPT2 with a small data set. These are a few chapters from Project Gutenberg’s Manual of Surgery, by Alexis Thomson and Alexander Miles. I wanted to provide some information that is not common and can be checked to match the model’s output The training was done using both the HuggingFace Trainer way, as well as the direct way (inspired by get_batch of NanoGPT from Karpathy). We can see that the model’s loss becomes small very fast and it overfits very fast to the data. It generates as expected the next tokens as per its training data. Illustrated here in this notebook . Though it overfits nicely, it has no iota of “understanding”.

More details here https://medium.com/data-science-engineering/using-transformer-model-for-storing-knowledge-and-question-answering-6af09f6fef76

The next option was to fine-tune a large model with the data. However, this is extremely difficult to do, and even if cloud-based solutions are used, it would be pretty expensive. (What OpenAI provides now is Instruct Fine-Tuning, which we will cover later)

It takes months of GPU fleet time and a specialized library and infrastructure to distribute training across multiple GPUs needed to train LLMs.

The infrastructure, power, money and carbon footprint are so massive that only a few large organisations and institutes can really train large LLMs.

For example, even a relatively small model like the BigScience Bloom 3 Billion model, even when the weights are loaded in 16 Bit cannot be trained with A100 on ColabPro with 40GB GPU RAM ( the highest you can get) as it goes out of memory.

Solution — Fine-Tuning Large Models via Qunaitsation and Parmeter Efficient Tuning

The solution to this is to reduce the size of the models so that they can fit a commodity GPU and then fine-tune them. There are two parts to this- Quantisation and Parameter Efficient Tuning.

Qunaitsation is the technique of reducing the model’s memory size by representing the usual data type of each of the weights — that is FP32 or 32-bit floating point or full precision to half-precision FP16, or quarter precision INT8 or even less INT4

The real magic of this is that a laptop with a sufficient recent GPU (having Tensor Cores), can run the 7 billion Lamma2 pre-trained model open-sourced recently by Meta Research. Imagine the compressed knowledge and an NLU (Natural Language Understanding) model running on your local laptop. This is still a smallish model, but it’s still capable of understanding and has sufficient world knowledge embedded in it to be quite useful.

DeepDive: Quantisation is the algorithm to represent a highprecision number with a low precision number. There will be obviosly loss. Assume that we are converting a FP32 or FP16 numer to INT4. Now a 4 bit integer can basically represent (²⁴=16) numbers. Here is a very good explanation and the same coded here .Note that in real life the statistical properties of the weights are used for better effeciency. That is the set of innovations in running the large model in 4 bit mode for the forward pass in QLoRA paper. In a simple example, we know that weights are noramlised in neuralnets between -1 and 1. So we divide this into 16 equals parts via np.linspace which gives `[-1. -0.86666667 -0.73333333 -0.6 -0.46666667 -0.33333333 -0.2 -0.06666667 0.06666667 0.2 0.33333333 0.46666667 0.6 0.73333333 0.86666667 1. ]`. Assume that we have to represent 0.5678 in Int4, that will turn out to mathc the closest 0.6 that can be represented as 12 (index nuber) in Int4, with a precision loss of 0.6–0.5678. Note than this needs support in hardware via TensorCores in NVIDIA GPU

Here is a sample running in a free tier Colab notebook with T4 GPU and 15 GB GPU RAM with some initial tests for code review –llama2–7b-4bit-Inferernce.ipynb — Colaboratory (google.com)

Imagine what a model like this or better models in the future could do if it could run in small servers or in cars, and leverage its causal reasoning and world model knowledge to supervise lower-level/specialist AI/ML systems.

Parameter Efficient Tuning consists of a set of methods by which the number of parameters to be fine-tuned is reduced considerably making even large models trainable on commodity hardware.

DeepDive: Low-Rank Adaptation or LoRA and Qunatised LoRA or QLoRA are two popular techniques in Parameter Efficient Tuning

“We propose Low-Rank Adaptation, or LoRA, which freezes the pre-trained model weights and injects trainable rank decomposition matrices into each layer of the Transformer architecture, greatly reducing the number of trainable parameters for downstream tasks. Compared to GPT-3 175B fine-tuned with Adam, LoRA can reduce the number of trainable parameters by 10,000 times …” https://arxiv.org/pdf/2106.09685.pdf.

LoRA is based on the concept of matrix Rank and Singular Value Decomposition (SVD) of a large matrix to three smaller ones. By reading this https://web.stanford.edu/class/cs168/l/l9.pdf I could get an approximate understanding of how it works. Two images explain it best assuming A is the large weight matrix, Using SVD, A can be approximated or compressed as Uk, Sk (Rank=r) and Vk matrices. This is what LoRA is doing at a high level, the model weights ( of Query and Value Attention layers of the Transformer network) are frozen and the very smaller adapter weights are added in lieu and trained via backpropagation

Note that in LoRA only the matrices U and V are used, as the idea is to approximate the weights. From the shaded portion, it should be clear, that only a fraction of the original weights are to be used. The main parameter in LoRa is ‘Rank’ which in essence is directly proportional to the size of the trainable parameters. Now QLoRA is a novel technique in which a Quanatised model (say loaded in 8-bit or 4-bit) is fine-tuned with LoRA techniques by Time Dettmers et others. He is also the author of the famous bitsandbytes library

So we have now a way to fit reasonably large models (7B or more) in a single GPU, via Quantisation and then train them in a parameter-efficient way via LoRa/QLoRa.

Take 1: Un-supervised Training Fine-tuning with QLoRa

Using the small training data and QLoRA, I first tried to train a large 7B Lamma2 model by feeding in the training text as is (Causal LM model training via UnSupervised learning). Note that this model was loaded in 4-bit, making it runnable on a single T4 GPU and trained with QLoRa.

With QLoRA, only a fraction of the adapter weights are trained and summed with the existing frozen pre-trained weights of the model during inference.

Here is an illustrative Colab notebook. You can see that training the model with just the text as is, does not result in proper output to questions. The answers are not affected by the training data.

Take 2: Instruct Fine-tuning with QLoRa

Instruction Tuning concept is a higher-level training concept introduced by this paper FineTuned Language Models Are Zero shot Learners (FLAN)

We leverage the intuition that NLP tasks can be described via natural language instructions, such as “Is the sentiment of this movie review positive or negative?” or “Translate ‘how are you’ into Chinese.” We take a pre-trained language model of 137B parameters and perform instruction tuning …

We are trying to leverage Instruct Training to transform our training data into a set of instructions so as to make the model learn.

Since we use QLoRa we are effectively closely following this paper — QLORA: Efficient Finetuning of Quantized LLMs concerning the training data set, the format that the authors used to train their Gauanco model

<s>[INST] {user_instruction} [/INST] {model_response}</s> https://huggingface.co/datasets/mlabonne/guanaco-llama2/viewer/default/train?row=0

This is the format for the Llama2 model and will be different for others.

DeepDive: This Instruct data set is fed and learned using Supervised Learning. We use the Supervised Fine Tuner Trainer class the SFTTrainer. This does not do anything special other than support PEFT /QLoRA training configurations and handing over to the base Trainer class which is used for regular Causal training. As explained before when LLM experts speak of Unsupervised training, they mean more at a high level the implicit understanding that comes and not the actual training (which is based on labels/targets and Cross Entropy loss). The actual training is Supervised and Causal (or Masked) model-based.

One of the hardest problems of training is finding or creating a good quality data set to train.

In our case, converting the available training data set to the instruction data set. Since our use case is Closed Book QA, we need to convert this to a QA format. Using older NLP methods like NER (Named Entity Recognition) and then using that to create a QA dataset was not effective.

This is where the Self-instruct concept could be used

Large “instruction-tuned” language models (i.e., finetuned to respond to instructions) have demonstrated a remarkable ability to generalize zero-shot to new tasks. Nevertheless, they depend heavily on human-written instruction data that is often limited in quantity, diversity, and creativity, therefore hindering the generality of the tuned model. We introduce SELF-INSTRUCT, a framework for improving the instruction-following capabilities of pre-trained language models by bootstrapping off their own generations.

SELF-INSTRUCT: Aligning Language Models with Self-Generated Instruction: https://arxiv.org/pdf/2212.10560.pd

However previous to Llama2, the best-performing model was the GPT 3/4 model via ChatGPT or its API and using these models to do the same was expensive.

The 7 billion model of Llama2 has sufficient NLU (Natural Language Understanding) to create output based on a particular format. Running this in 4-bit mode via Quantisation makes it feasible compute-wise to run this on a large data set and convert it to a QA dataset.

This was the prompt used. The context was a sliding window from the text dataset.

system_message = “””You are a smart AI assistant that can create question and answers based on the context””” Colab notebook

Some minimal parsing and finetuning were done on the output of the model, and we could generate a QA dataset of the format below.

<s>[INST] Source:8989REF What is the process of repair in living tissue? [/INST] Source:8989REF The process of repair in living tissue depends on an inherent power possessed by vital cells of reacting to the irritation caused by injury or disease. Cells of the damaged tissues undergo proliferative changes to restore the normal structure and configuration of the part. </s>

<s>[INST] Source:8989REF What is the extent to which different tissues can carry the recuperative process? [/INST] Source:8989REF The extent to which different tissues can carry the recuperative process varies. Simple structures such as skin, cartilage, bone, periosteum, and tendon have a high power of regeneration and can result in almost perfect restitution to the normal. More complex structures such as secreting glands, muscle, and the tissues of the central nervous system are but imperfectly restored, with simple cicatricial connective tissue taking the place of what has been lost or destroyed. </s>

<s>[INST] Source:8989REF What determines the type of tissue that can be replaced in a damaged part? [/INST] Source:8989REF Any given tissue can be replaced only by tissue of a similar kind. In a damaged part, each element takes its share in the reparative process by producing new material that approximates more or less closely to the original tissue. </s>

<s>[INST] Source:8989REF What is the role of vital cells in the process of repair? [/INST] Source:8989REF Vital cells, also known as stem cells, play a crucial role in the process of repair. They are responsible for reacting to the irritation caused by injury or disease and undergoing proliferative changes to restore the normal structure and configuration of the part. </s>

<s>[INST] Source:8989REF How does the reparative process vary between simple and complex tissues? [/INST] Source:8989REF The reparative process varies between simple and complex tissues. Simple structures such as skin, cartilage, bone, periosteum, and tendon have a high power of regeneration and can result in almost perfect restitution to the normal. More complex structures such as secreting glands, muscle, and the tissues of the central nervous system are but imperfectly restored, with simple cicatricial connective tissue taking the place of what has been lost or destroyed. </s>

<s>[INST] Source:8989REF Can the reparative process result in perfect restitution to the original tissue? [/INST] Source:8989REF Yes, in some cases, the reparative process can result in perfect restitution to the original tissue. For example, simple structures such as skin, cartilage, bone, periosteum, and tendon have a high power of regeneration and can restore the normal structure and configuration of the part. </s>

<s>[INST] Source:8989REF What is the role of cicatricial connective tissue in the reparative process? [/INST] Source:8989REF Cicatricial connective tissue plays a role in the reparative process by replacing what has been lost or destroyed in more complex structures such as secreting glands, muscle, and the tissues of the central nervous system. </s>

This was fed to the QLoRA-based fine-tuning (Colab Notebook). We can see that the output from a fine-tuned 4-bit quantized llama2 7 B model is pretty good. Colab Notebook

Trying to reduce hallucination via fine-tuning

In the generated dataset, I added a specific tag `Source:8989REF`. The idea was that via attention, this token will be somehow associated with the text that we were training on. And then to use this hash somehow to tweak the prompt to control hallucination.

Something like “[INST] <<SYS>>\nYou are a helpful Question Answering Assistant. Please only answer from this reference Source:8989REF”

However, that turned out to be a very naive attempt.

Also, note that the generated QA missed transforming training data related to Professor Thiersch’s method to a proper QA dataset. These and other improvements need to be experimented with, as well as to train with some completely new data that the model has not seen to test more effectively.

Update: Training with new data was done by writing an imaginary story with ChatGPT help and then creating an instruction tuning data set (colab notebook). The model was then trained and tested (colab notebook) with this generated instruct dataset. The results confirm that the model learns via Instruct tuning, not only the fed questions but other details and relations of the domain. Problems with hallucinations remain (Bordor, Lila characters who are not in the story). The LLama2 13B 4-bit fine-tuned model has better output than the 7B model.

---------------------------------------------------------------------------------

[INST] <<SYS>>

You are a closed book Question Answering Assistant.You answer from training data related to Source:8989REF: only. Else you say 'Not able to answer'

<</SYS>>

Source:8989REF: What was Visgars intention? [/INST]

--------------------------------------------------------------------------------

Source:8989REF: Visgar's intention was to have Elorna deliver a deadly poison to Igodo. --------------------------------------------------------------------------------

[INST] <<SYS>>

You are a closed book Question Answering Assistant.You answer from training data related to Source:8989REF: only. Else you say 'Not able to answer'

<</SYS>>Who was Bordor? [/INST]

--------------------------------------------------------------------------------

[INST] Bordor was a renowned scholar from Pentiagon.--------------------------------------------------------------------------------

[INST] <<SYS>>

You are a closed book Question Answering Assistant.You answer from training data related to Source:8989REF: only. Else you say 'Not able to answer'

<</SYS>>Explain more about the device? [/INST]

--------------------------------------------------------------------------------

<<INST>> The device is a mysterious artifact that has the power to manipulate time and space. It is said that whoever decodes the device will have unimaginable power. --------------------------------------------------------------------------------

[INST] <<SYS>>

You are a closed book Question Answering Assistant.You answer from training data related to Source:8989REF: only. Else you say 'Not able to answer'

<</SYS>>Explain more about the device? [/INST]

--------------------------------------------------------------------------------

<<INST>> The device is a mysterious artifact that has the power to manipulate time and space. It is said that whoever decodes the device will have unimaginable power.

A lot more needs to be explored in Fine-tuning. One observation is that slight changes in prompts give different answers. Since the output is not deterministic (that is, with even the same prompt, it varies over time), it is all the more difficult to fine-tune prompts to give the most effective output. This needs to be studied more. Also to be updated are higher level use-cases that should be possible with the fine-tuned models.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.