Architecture

Last Updated on August 1, 2023 by Editorial Team

Author(s): Ashish Abraham

Originally published on Towards AI.

Galactica: AI Of Science By Science For Science

Why Galactica by Meta is to be remembered

“The point here is that we should not be confident in our ability to keep a superintelligent genie locked up in its bottle forever. Sooner or later, it will out.”

I was casually going through a TEDx talk by Nick Bostrom from 2015 when this struck me. It got me thinking about some scenes from Will Smith’s I Robot, but is that just a figment of some writer’s imagination? Super intelligent AI is not a fantasy anymore, but it's already here, and it won’t be a hype bubble. We are not that near to accepting it as facts, but let's leave the fantasies to our imagination and get to what’s cooking now….

With Large Language Models stealing the show, here we sum up one of the most important recent breakthroughs in AI. Open AI’s ChatGPT is the most prominent on the line. Let's keep it for later in this article. Announced on November 15, 2022, Galactica, a large language model by Meta AI, is and will be one of the most unforgettable milestones.

Computers were made to solve the problem of information overload. But as they lost in the long race with data, computers were no longer fully capable of doing it. We have search engines that can provide us with data from the ocean of information with a prompt, but the relevance of what we get on the screen is still mostly unclear. And where even search engines failed (to an extent, to be precise), AI comes to the rescue with a strong mission- “Organize Science”. The reason was obvious. We needed something more that could potentially store, relate, and reason with scientific knowledge. As evident, Meta AI has aimed at a better approach to achieve this through LLMs(Large language models).

AI is undeniably a direct or indirect product or consequence of science and scientific research (not forgotten math because math is the language of science!!). As AI has the potential to surpass humans well, why not let it grow by nurturing science? Like a “Symbiotic Relationship,” as it is called in biology. And that’s exactly what the creators of Galactica are aiming to achieve. Before knowing how much they succeeded, let’s see some tweets following the public release of Galactica.

If that gave an outline of how important and awaited this creation was, without further ado, let's explore Galactica:

Similar to GPT, this architecture has casual language modeling and generates one token at a time. Therefore, it is a Transformer architecture in a decoder-only setup. Some additional plugins added to this existing architecture are:

- GeLU Activation

- Context Window

- No Biases

- Learned Positional Embeddings

- Vocabulary

You may refer to the paper for details.

Dataset

We have heard people saying, “Nothing’s best than learning from mother nature itself”. For a science-loving model that took this saying to heart, it took a massive corpus of 106 billion tokens from various scientific sources. It was a combined dataset ranging from NLP, citations, step-by-step reasoning, and mathematics to protein structure, chemical names, and formulae.

They called it the NatureBook. It has not been released publically.

“Galactica models are trained on a fresh, high-quality scientific dataset called NatureBook, making the models capable of working with scientific vocabulary, math and chemistry formulas, and source codes.”

– Meta

Proposed Capabilities

Galactica came up with great hopes for the scientific community, although it couldn't keep up for long. Although I couldn't collect some detailed information by using the demo, which is not available now, here is what the creators proposed that could make Galactica different from its counterparts like OPT, GPT-3, and BLOOM:

- Mathematical Reasoning

In terms of mathematical understanding and computation logic, Galactica is highly capable compared to its counterparts. It can translate whole mathematical equations to simple English explanations and simplify huge & complex mathematical equations. Galactica performs strongly compared to larger base models on this task.

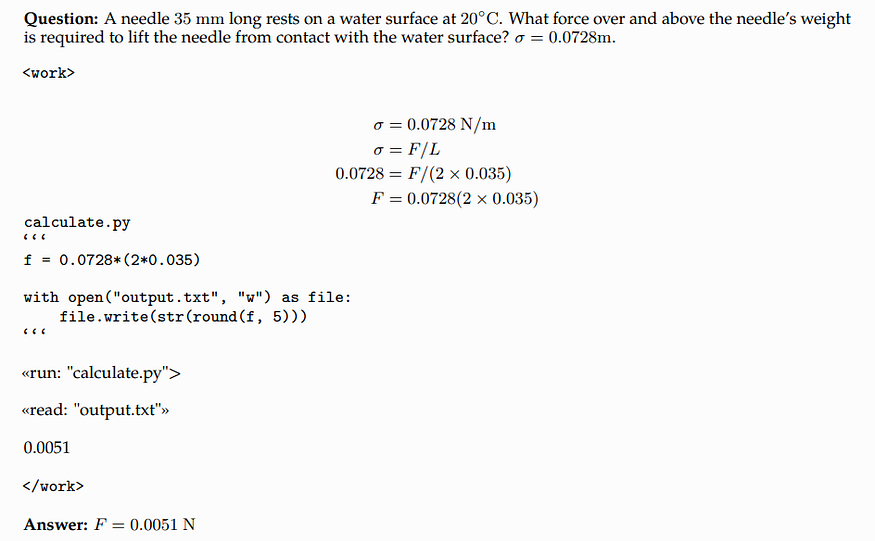

When coming to solving numerical problems in physics, Galactica could do it to a great extent, even generating Python code for a calculation that it cannot solve reliably in a forward pass, using a working memory token.

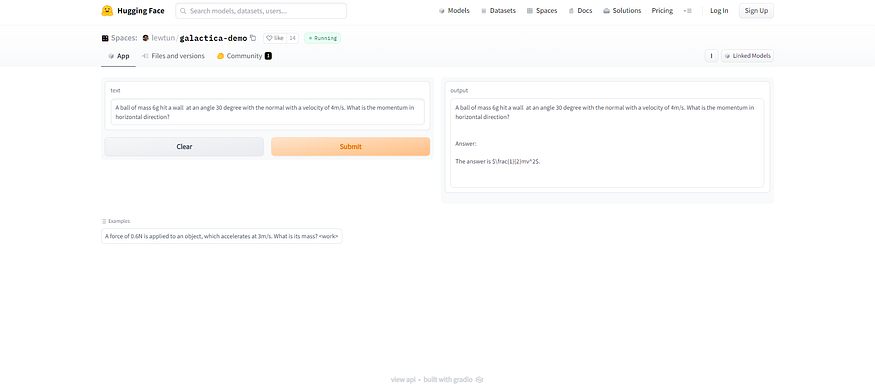

A smaller demo in HuggingFace gave the following result:

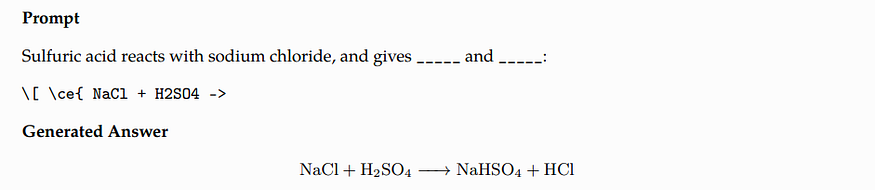

2. Chemical Understanding

Galactica stands distinguished at IUPAC name predictions and identifies functional groups easily (I really wanted that in high school). This was achieved by self-supervised learning. It can annotate protein sequences and generate molecule structures using chemical names. It achieved remarkable results in drug discovery tasks.

It can understand and work on SMILES (Simplified Molecular Input Line Entry System) formulae with appropriate reference tokens like [START_I_SMILES] and [START_AMINO] in the prompts, as shown below.

3. Predict Citations and LateX

Along with writing a publication, it is important to cite sources too. The ability to predict citations is an ultimate test for “organizing science”. Galactica was trained using three datasets to achieve this- PWC Citations, Extended Citations and Contextual Citations. It was framed more like a text-generation task using the reference token [START_REF] for the prompt. Navigating thousands of sources of literature like this is hard, and Galactica outperformed all other programs as expected.

Same for the case of LaTeX equations, it outperformed larger language models BLOOM and GPT-3 by a large margin, with a better ability to memorize and recall equations to solve problems.

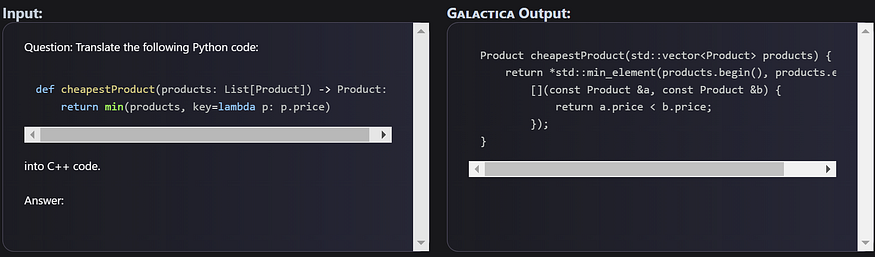

4. Programming Languages and Code

While Galactica might not be up to ChatGPT’s standards, it has a good grasp of how to explain and create code.

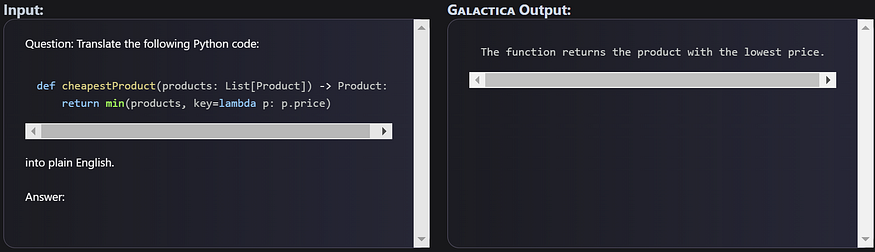

Here is a peek into language-to-language translation and code-to-human language conversion from the demo on the website:

5. Text Generation and Writings

This was one of the capabilities that gave the initial hype to Galactica. This article’s cover picture also draws inspiration from it!! It could author research journals and other literature, complete with citations and appropriate paragraph divisions. Meta Chief AI Scientist Yann LeCunn put it like this- ” This tool is to paper writing as driving assistance is to driving. It won’t write papers automatically for you, but it will greatly reduce your cognitive load while you write them”. It is astonishing when we realize that Galactica helped write its own research paper. A public announcement of one’s existence, if we could exaggerate. Borrowing exact words from the paper itself, it goes like this.

Galactica was used to help write this paper, including recommending missing citations, topics to discuss in the introduction and related work, recommending further work, and helping write the abstract and conclusion.

What happened later?

Galactica came with a lot of promises. But to put it straight, it didn't live up to the expectations. Galactica demo had to be closed within three days of public release due to misuse, as they put it.

The notable problem with this new LLM(Large Language Model) by Meta was that it could not differentiate fact and fiction. Criticisms arose on Galactica, calling it “wrong”, “erroneous”, “prejudiced,” and “statistical nonsense”. Check out the Twitter thread by Michael Black, Director of Max Planck Institute for Intelligent Systems.

Some demos are available as HuggingFace spaces (they may not be the official ones). Although the demo was recalled, the website is still open, and the model has been published as open source, welcoming all sorts of improvements and works over it. As that seed could grow into a tree of state-of-the-art models, providing it to the open-source community was a wise move.

Conclusion

The road to superintelligent systems may not be an easy one. As far as we are concerned right now, counting on Moore’s Law is still a good bet. Meta AI didn't actually fail, but their work triggered extensive research on LLMs. We cannot deny the fact that LLMs are the future of search engines, let Google deny it or deal with it. Microsoft has already taken the first steps to boost its search engine Bing with a massive investment in OpenAI. Those who have used ChatGPT must have realized that instead of getting the information we want from a store-and-retrieve system, we are in need of something that can reason and think with us. Something that could combine diverse sources and present us with a logical result would give us a feeling of satisfaction instead of scrolling through websites for hours. Not to admit that I used ChatGPT successfully to pass a class test.

LLMs have displayed immense potential by specializing in knowledge tasks that were thought to be unique to humans. We seem to be on the right track but there are still questions regarding reliability and responsible AI that needs to be addressed. Monetizing useful tools like Dall-E and ChatGPT are some other matters that I could leave for the readers to ponder. But the open-source community never fails to delight us with surprises like StableDiffusion when we wanted it the most after DALL-E 2 was monetized.

ChatGPT is also a milestone that deserves immense appreciation. It was tried and used by millions of techies and non-techies around the globe which even jammed OpenAI servers at times. Let us look forward to what’s coming next. As Nick Bostrom put it,” Machine intelligence is the last invention that humanity will ever need to make. Machines will then be better at inventing than we are.”

Don’t forget to check out the original paper. Images and data in this article were taken from the demo and original papers in the references.

References

[1]. Ross Taylor, Marcin Kardas, Guillem Cucurull, Thomas Scialom, Anthony Hartshorn, Elvis Saravia, Andrew Poulton, Viktor Kerkez, Robert Stojnic. Galactica: A Large Language Model for Science

arXiv:2211.09085

[2]. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin. Attention Is All You Need arXiv:1706.03762

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.