This AI newsletter is all you need #80

Last Updated on January 3, 2024 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI.

What happened this week in AI by Louie

After a year of near-weekly significant model releases and AI progress in terms of capabilities and adoption, the year finished with a focus on the legal consequences of this AI adoption. The New York Times has sued Microsoft and OpenAI over copyright infringement, alleging that the companies are liable for substantial financial damages, potentially in the billions of dollars. The New York Times is seeking compensation and the destruction of any chatbot models and data that may have used their copyrighted material.

This has quickly become the most prominent legal case in the ongoing debate on the intersection of AI technology and intellectual property rights. Major AI breakthroughs over the past two years have been driven, in particular, by transformer-based large language models, diffusion models, and, more recently, graph neural networks. Training these models can be extremely data-heavy and generally use huge datasets scraped from the internet, often containing copyrighted content. While LLMs generally don’t memorize their training set in full, in some instances, commonly repeated content is memorized in full. The model’s outputs are also very open-ended — and can be used to create content that repeats copyrighted content in full or is inspired by copyrighted content.

The existing legal system was not designed for Generative AI content, and opinions vary strongly on how it should be adapted to deal with it. Training and inference of LLMs do not copy and reuse content in a traditional sense. Still, neither is it equivalent to human reading and gaining “inspiration” from other people’s content. Content on the internet has also generally been available to big tech companies’ web crawlers for indexing and search purposes; however, use for model training purposes is clearly not an equivalent use case. Different people can interpret these differences differently, leading to intense debate, and we expect varied regulations and legal decisions by jurisdiction. For example, earlier this year, Japan confirmed it would not enforce copyrights on data used in AI training.

Why should you care?

The resolution of these AI copyright questions can have major consequences for LLMs’ quality, cost, and pace of progress going forward, as well as for the livelihoods of existing copyright owners and human content creators. We think it is important that content that took time, money, and expertise to produce can still get rewarded in a world of Generative AI content. But there are also many ways new copyright laws and legal interpretations could be overly cumbersome and hold back AI capabilities unnecessarily. The difficulty is getting the balance right. In 2024, we should start to see how this plays out.

– Louie Peters — Towards AI Co-founder and CEO

Hottest News

The New York Times sued OpenAI and Microsoft, accusing them of using millions of the newspaper’s articles without permission to help train chatbots to provide information to readers. The newspaper’s complaint accused OpenAI and Microsoft of trying to “free-ride on The Times’s massive investment in its journalism.”

2. Japan Goes All In: Copyright Doesn’t Apply to AI Training

Japan’s government recently reaffirmed that it will not enforce copyrights on data used in AI training. The policy allows AI to use any data “regardless of whether it is for non-profit or commercial purposes, whether it is an act other than reproduction, or whether it is content obtained from illegal sites or otherwise.”

3. AI-Created “Virtual Influencers” Are Stealing Business From Humans

Virtual influencers are becoming popular in marketing for their controlled branding and predictability. Despite ethical and transparency concerns, including issues of sexualization similar to human influencers, their distinct narratives drive brand partnerships as the industry navigates consumer trust and ethical standards.

4. Nvidia Releases Slower, Less Powerful AI Chip for China

Nvidia has unveiled a new gaming processor that can be sold in China while complying with US export rules. However, the new version performs around 11% lower than the original 4090 chip, first released in late 2022. It also has fewer processing subunits, which help to accelerate AI workloads.

5. GPT and Other AI Models Can’t Analyze an SEC Filing, Researchers Find

Researchers from a startup, Patronus AI, found that large language models, similar to ChatGPT, frequently fail to answer questions derived from SEC filings. Patronus AI notes a 79% accuracy issue, leading to errors and unresponsiveness. To enhance financial AI, they created FinanceBench, a testing set from SEC filings to boost AI performance in the financial sector.

Five 5-minute reads/videos to keep you learning

A lot happened in 2023 in big tech and the AI research community. This video is a recap of the year covering developments like GPT-4 and GPT-4Vision from Open AI, Meta’s Llama 2, Google’s Bard and Gemini, Stability AI’s Stable Video Diffusion, Grok, and more.

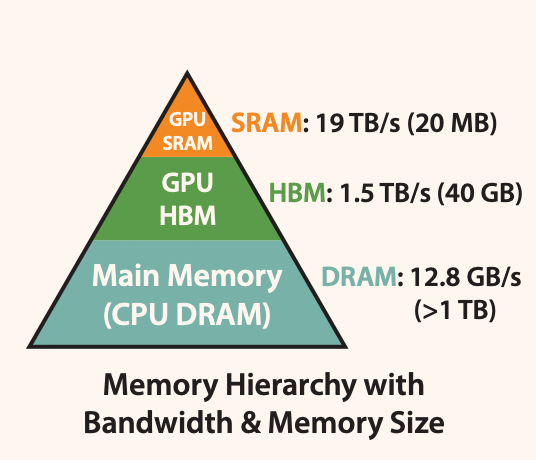

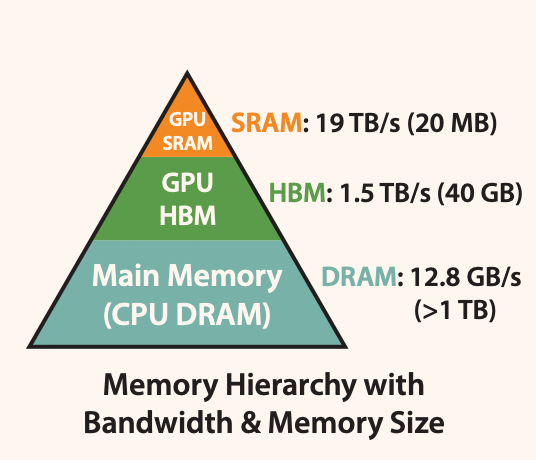

2. Flash Attention: Underlying Principles Explained

Flash Attention revolutionizes Transformer efficiency, optimizing computation and memory use, promising faster AI processing with reduced memory needs. This article explains the underlying principles of Flash Attention, illustrating how it achieves accelerated computation and memory savings without compromising the accuracy of attention.

LangChain’s analysis reveals growing retrieval integration in LLMs, with OpenAI and Hugging Face leading the field. It highlights the significance of specialized databases and embedding generation, underscoring the industry’s evolving preferences and technological advancements.

4. 2023, the Year of Open LLMs

2023 saw increased interest in Open LLMs, with a shift towards efficient, smaller models like LLaMA for their performance impact. The year marked the prevalence of decoder-only architectures and conversational AI, with fine-tuning methods like Instruction Fine-Tuning and RLHF standardizing model customization.

Recent advances in AI have enabled the use of sophisticated models like ChatGPT on personal devices. Companies such as Mistral are creating open-source AI that can be tailored to specific user needs, which democratizes AI technology beyond large tech firms.

Repositories & Tools

- Unum is a pocket-sized multimodal AI for content understanding and generation across multilingual texts.

- The TinyLlama project is an open endeavor to pre-train a 1.1B Llama model on 3 trillion tokens.

- Genie AI is an AI-driven chat for data interaction.

- UserWise helps to collect, analyze & optimize feedback to enhance customer satisfaction & make data-driven decisions.

Top Papers of The Week

The study finds that within computational limits, LLMs (up to 9 billion parameters) benefit negligibly from new data beyond four epochs and gain limitedly from increased resources. Data filtering proves more advantageous for noisy datasets.

2. Beyond Chinchilla-Optimal: Accounting for Inference in Language Model Scaling Laws

In this paper, researchers modify the Chinchilla scaling laws to calculate the optimal LLM parameter count and pre-training data size to train and deploy a given quality and inference demand model.

3. WaveCoder: Widespread And Versatile Enhanced Instruction Tuning with Refined Data Generation

WaveCoder is a fine-tuned Code Language Model that improves LLMs’ instruction tuning and generalization capabilities by utilizing a Generator-Discriminator framework for generating high-quality, non-duplicated instruction data from open-source code. It outperforms other open-source models, with a dataset (CodeOcean) containing 20K instances across four code-related tasks, underscoring the importance of refined data for model enhancement.

4. Generative Multimodal Models are In-Context Learners

The paper introduces Emu2, a generative multimodal model with 37 billion parameters trained on large-scale multimodal sequences with a unified autoregressive objective. Emu2 exhibits strong multimodal in-context learning abilities.

5. Improving Text Embeddings with Large Language Models

This paper introduces a novel and simple method for obtaining high-quality text embeddings using only synthetic data and less than 1k training steps. It leverages proprietary LLMs to generate diverse synthetic data for hundreds of thousands of text embedding tasks across nearly 100 languages.

Quick Links

1. Harvey, an AI platform designed for legal professionals, has successfully raised $80 million in Series B funding. This influx elevates Harvey’s total funding to above $100 million and its valuation to $715 million.

2. Shield AI, a defense technology company, raised an additional $100M in Series F funding and $200M in debt from Hercules Capital.

3. Baidu’s ChatGPT-like Ernie Bot has more than 100 million users, Wang Haifeng, chief technology officer of the Chinese internet company, said.

Who’s Hiring in AI

Sr. DevOps Engineer — Tech Lead @AssemblyAI (Remote)

Data Engineer @Rewiring America (Remote)

Senior Solutions Architect — GenAI @Amazon (Remote)

Sr. Software Engineer-Linux @Stellar Cyber (Remote)

Senior Machine Learning Engineer @Employment Hero (Remote)

DevOps Manager — Big Data and AI @NVIDIA (Remote)

Senior Machine Learning Engineer @Trustly (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

If you are preparing your next machine learning interview, don’t hesitate to check out our leading interview preparation website, confetti!

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.