This AI newsletter is all you need #76

Last Updated on December 11, 2023 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI.

What happened this week in AI by Louie

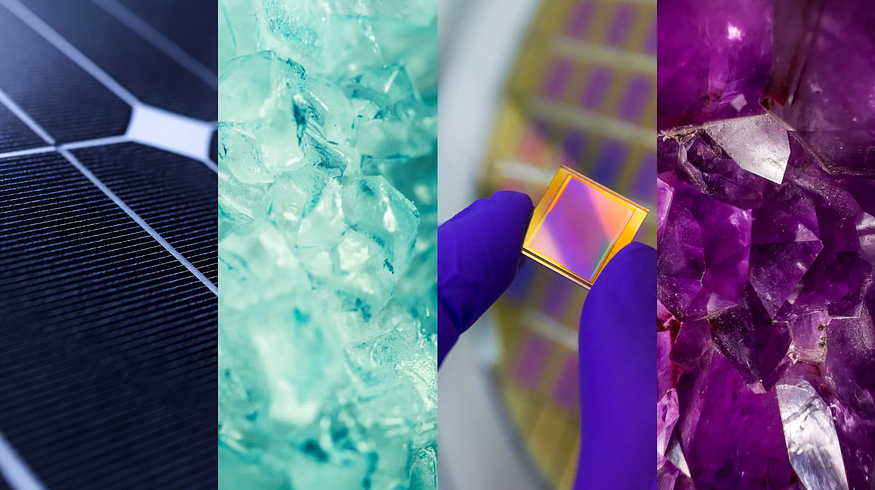

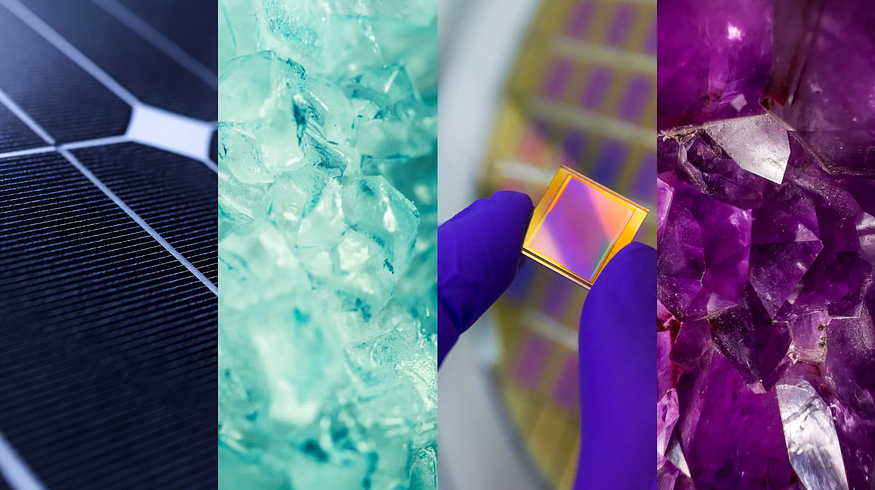

This week, we were focused on significant AI developments beyond the realm of transformers and large language models (LLMs). While the recent momentum of new video generation diffusion-based model releases continued, what excited us most was DeepMind’s latest materials model, GNoME.

GNoME is a new large-scale graph neural network designed to discover new crystal material structures that dramatically increase the speed and efficiency of discovery. The model’s results were announced and made available by Deepmind this week. Incredibly, this has increased the number of likely stable materials known to humanity ~10x week over week! GNoME’s discovery of 2.2 million materials would be equivalent to about 800 years’ worth of knowledge. Of its 2.2 million predictions, 380,000 are estimated to be stable, making them promising candidates for experimental synthesis. Despite this huge breakthrough in human knowledge, there is still a bottleneck in the number of labs and experts available to produce these materials and test them for useful properties. Positively towards this, a second paper was released demonstrating how AI could also be used to help produce these materials.

Why should you care?

Human history has regularly been segmented and described by the new materials discovered and used, and even today, many new technologies can be driven by the discovery of new materials, from clean energy to computer chips, fusion power, and even room-temperature superconductors. We think there is a high chance there is a game-changing new material contained within Deepmind’s new data release; however, it will still take a lot of time to discover which new materials have useful properties and are manufacturable affordably at scale. More broadly, the success of scaling up graph neural networks here suggests the recent AI GPU buildout is likely to lead to breakthroughs beyond the world of scaling LLMs.

– Louie Peters — Towards AI Co-founder and CEO

Hottest News

Meta introduced a model family that enables end-to-end expressive and multilingual translations, SeamlessM4T v2. Seamless is a system that revolutionizes automatic speech translation. This advanced model translates across 76 languages and preserves the speaker’s unique vocal style and prosody, making conversations more natural.

2. Introducing SDXL Turbo: A Real-Time Text-to-Image Generation Model

Stability AI introduces SDXL Turbo, a new text-to-image model that uses adversarial diffusion distillation (ADD) to generate high-quality images in a single step rapidly. It enables the quick and precise creation of 512 x 512 images in just over 200 milliseconds.

3. Pika Wows in Debut as AI Video Generator Takes Aim at Tech Giants

Pika Labs has released Pika 1.0, an impressive AI video generation tool. It has advanced features like Text-to-Video and Image-to-Video conversion. The company has also raised $55 million in funding to compete against giants Meta, Adobe, and Stability AI.

4. Starling-7B: Increasing LLM Helpfulness & Harmlessness with RLAIF

Berkeley unveiled Starling-7B, a powerful language model that utilizes Reinforcement Learning from AI Feedback (RLAIF). It harnesses the power of Berkley’s new GPT-4 labelled ranking dataset, Nectar. The model outperforms every model to date on MT-Bench except for OpenAI’s GPT-4 and GPT-4 Turbo.

5. Amazon Unveils New Chips for Training and Running AI Models

AWS unveiled its next generation of AI chips — Graviton4 and Trainium 2, for model training and inferencing. Trainium 2 is designed to deliver up to 4x better performance and 2x better energy efficiency, and Graviton4 provides up to 30% better compute performance, 50% more cores, and 75% more memory bandwidth than one previous generation.

This week saw the release of several new generative models for image, audio, and video generation. Which one seems the most promising to you and why? Share it in the comments.

Five 5-minute reads/videos to keep you learning

This article showcases visual and interactive representations of renowned Transformer architectures, including nano GPT, GPT2, and GPT3. It provides clear visuals and illustrates the connections between all the blocks.

This video guides developers and AI enthusiasts on improving LLMs, offering methods for both minor and significant advancements. It also helps choose between training from scratch, fine-tuning, (advanced) prompt engineering, and Retrieval Augmented Generation (RAG) with Activeloop’s Deep Memory.

3. Looking Back at a Transformative Year for AI

It has been a year since OpenAI quietly launched ChatGPT. This article traces the timeline of the AI evolution in the past year and how these technologies may upend creative and knowledge work as we know it.

4. Why Do AI Wrappers Get a Bad (W)rap?

AI wrappers are practical tools that leverage AI APIs to generate output and have proven to be financially rewarding for creators. Examples like Formula Bot and PhotoAI have annual revenues ranging from $200k to $900k.

5. 5 Ways To Leverage AI in Tech

Prasad Ramakrishnan, CIO of Freshworks, highlights several practical AI use cases for startups. This article explores five ways organizations leverage AI for effective problem-solving, from improving user experience to streamlining onboarding processes and optimizing data platforms.

Repositories & Tools

- Whisper Zero by Gladia is a complete rework of Whisper ASR to eliminate hallucinations.

- Taipy is an open-source Python library for building your web application front-end & back-end.

- GPT-fast is a simple and efficient pytorch-native transformer text generation in <1000 LOC of Python.

- GitBook is a technical knowledge management platform that centralizes the knowledge base for teams.

Top Papers of The Week

GPT-4 has surpassed Med-PaLM 2 in answering medical questions using a new Medprompt methodology. By leveraging three advanced prompting strategies, GPT-4 achieved a remarkable 90.2% accuracy rate on the MedQA dataset.

2. Merlin: Empowering Multimodal LLMs with Foresight Minds

“Merlin,” a new MLLM supported by FPT and FIT, demonstrates enhanced visual comprehension, future reasoning, and multi-image input analysis. Researchers propose adding future modeling to Multimodal LLMs (MLLMs) to improve their understanding of fundamental principles and subjects’ intentions. They utilize Foresight Pre-Training (FPT) and Foresight Instruction-Tuning (FIT) techniques inspired by existing learning paradigms.

3. Dolphins: Multimodal Language Model for Driving

Dolphins is a vision-language model designed as a conversational driving assistant. Trained using video data, text instructions, and historical control signals, it offers a comprehensive understanding of difficult driving scenarios for autonomous vehicles.

4. Diffusion Models Without Attention

This paper introduced the Diffusion State Space Model (DiffuSSM), an architecture that supplants attention mechanisms with a more scalable state space model backbone. This approach effectively handles higher resolutions without global compression, thus preserving detailed image representation throughout the diffusion process.

5. The Rise and Potential of Large Language Model-Based Agents: A Survey

This is a comprehensive survey of LLM-based agents. It traces the concept of agents from its philosophical origins to its development in AI and explains why LLMs are suitable foundations for agents. It also presents a general framework for LLM-based agents, comprising three main components: brain, perception, and action.

Quick Links

- Alibaba Cloud introduces the Tongyi Qianwen AI language model with 72 billion parameters. Qwen-72B competes with OpenAI’s ChatGPT and excels in English, Chinese, math, and coding.

- Nvidia’s CEO Jensen Huang led the company’s AI growth, resulting in a staggering $200B increase in value. With a strong focus on AI and its applications in various industries, Nvidia has surpassed major companies like Walmart.

- Google is responding to pressure from generative AI tools and legal battles by making changes to its search experience. They are testing a “Notes” feature for public comments on search results and introducing a “Follow” option, allowing users to subscribe to specific search topics.

Who’s Hiring in AI

Applied AI Scientist @Gusto, Inc. (San Francisco, CA, USA)

Senior LLM Engineer @RYTE Corporation (Paris, France/Freelancer)

Machine Learning Engineer @LiveChat (Remote)

Application Developer — Expert — K0714 @TLA-LLC (Virginia, USA)

Data Analyst @Empowerly (Remote)

Consultant (Data & Process Analytics) @Celonis (Denmark)

QA Engineering Manager @Brightflag (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

If you are preparing your next machine learning interview, don’t hesitate to check out our leading interview preparation website, confetti!

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.