PowerInfer: 11x Speed up LLaMA II Inference On a Local GPU

Last Updated on December 21, 2023 by Editorial Team

Author(s): Dr. Mandar Karhade, MD. PhD.

Originally published on Towards AI.

Some neurons are HOT! Some are cold! A clever way of using a GPU-CPU hybrid interface to achieve impressive speeds!

In the last article, we saw that a clever compiler, quantization, Speculative decoding, and tensor parallelism implemented by Pytorch II can lead to a significant boost in inference performance.

There are many ways to run, here is a quick overview

pub.towardsai.net

Today we will discuss PowerInfer. Another clever way of distributing the workload between CPU and GPU in a way to speed up most of the local inference workloads.

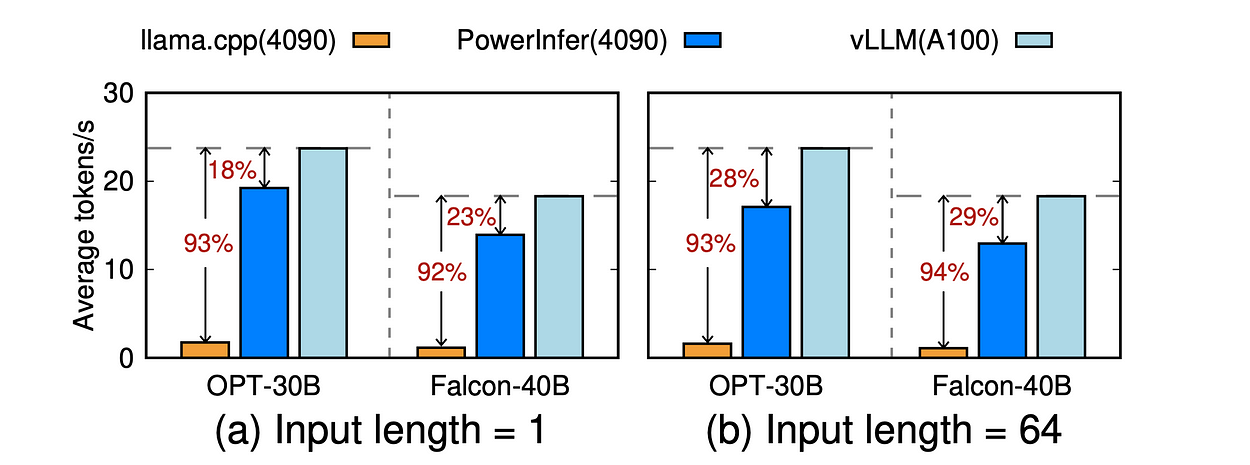

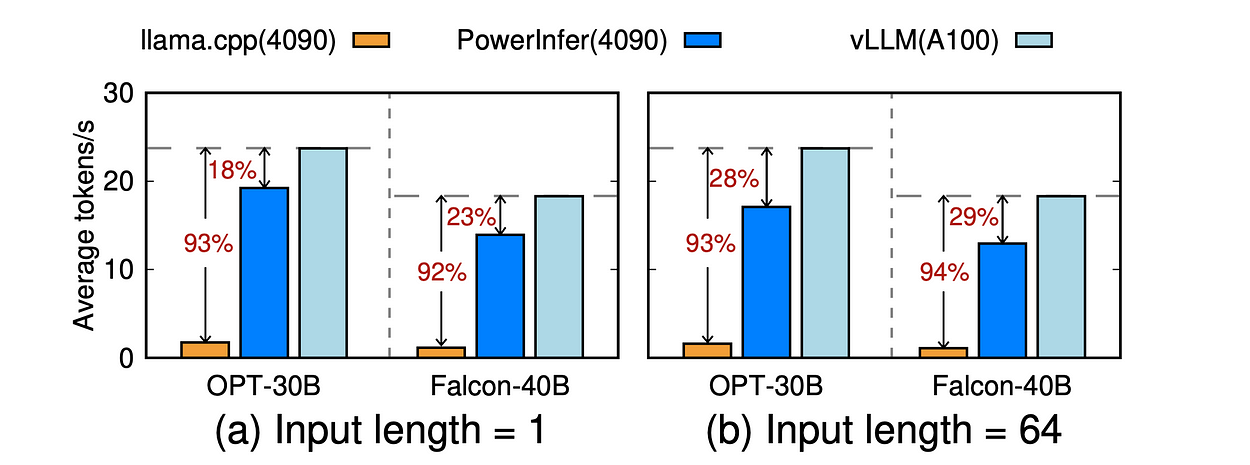

The key underlying the design of PowerInfer is exploiting the high locality inherent in LLM inference, characterized by a power-law distribution in neuron activation. This distribution indicates that a small subset of neurons, termed hot neurons, are consistently activated across inputs, while the majority, cold neurons, vary based on specific inputs. PowerInfer exploits such an insight to design a GPU-CPU hybrid inference engine. Hot-activated neurons are preloaded onto the GPU for fast access, while cold-activated neurons are computed on the CPU, thus significantly reducing GPU memory demands and CPU-GPU data transfers. PowerInfer further integrates adaptive predictors and neuron-aware sparse operators, optimizing the efficiency of neuron activation and computational sparsity.

Evaluation shows that PowerInfer attains an average token generation rate of 13.20 tokens/s, with a peak of 29.08 tokens/s, across various LLMs (including OPT-175B) on a single… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.