Deep Dive Into Neural Networks

Last Updated on March 7, 2021 by Editorial Team

Author(s): Amit Griyaghey

Deep Learning

Apart from well-known Applications like image and voice recognition Neural Networks are being used in several contexts to find complex patterns among very large data sets, an example is when an E-mail engine is suggesting sentence completion or when a machine translating one language to another. For solving such complex problems we use Artificial Neural Network.

In this article, Topics to be covered:-

- Introduction to neural nets

- Purpose of using neural network

- Neural network architecture

- Evaluation of neurons

- Activation function it’s few types explained

- Backpropagation for estimating weights and biases

Introduction

An Artificial Neural Network(ANN) is often called a black box technique because it can be sometimes hard to understand what they are doing. It is a sequence of mathematical calculations which are best visualized through neural networks.

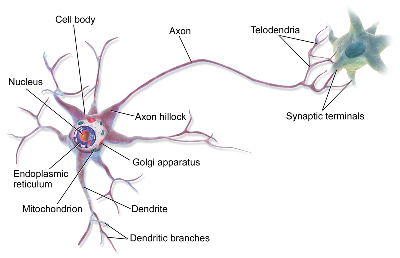

ANN is vaguely inspired by Biological Neural Network that constitutes the human brain. The human brain uses a network of interconnected cells called neurons to provide learning capabilities likewise ANN uses a network of artificial neurons or nodes to solve challenging learning problems.

The Human brain’s neural network showing information intake and out through neurons

Artificial Neural Network

Why learn Neural Network?

- Ability to learn– Neural Networks can perform their function on their own.

- Ability to generalize-It can easily produce output for the inputs it has not been taught how to deal with.

- Adaptivity — It can be easily retained to changing Environment conditions.

Neural network Architecture

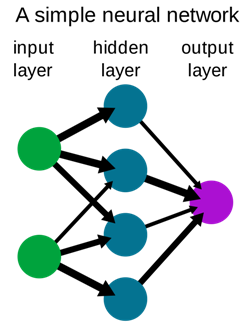

The Neural network consists of three layers shown in the above figure-

- Input layer

- Hidden layer

- Output layer

Neural networks in practice have more than one input mode and also generally have more than one output node in between these two nodes there a spider web of connections between each layer of nodes. These layers are hidden layers. when we build a Neural Network the first thing to do is to decide how many hidden layers we want.

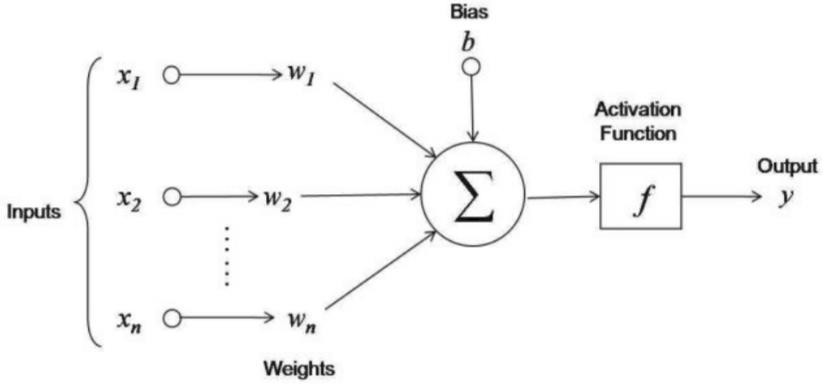

A neuron is an information-processing unit that is fundamental to the operation of a neural network. Three basic elements of the neuron model:

•Synaptic weights

•Combination (Addition)function

- Activation function

External input bias to increase or lower the net input to the Activation function

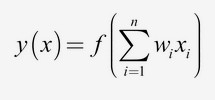

Evaluation of neuron

•w-weights

•n –number of inputs

•xi –input

•f(x) –activation function

- y(x) –output

This Evaluation can be understood by a simple example lets say in the input node we have a value of x1 we are passing this value from the input node to the hidden layer through synapses of the network each synapse or connection have some synaptic weights i.e, w. The input value x1 is multiplied by the respective synaptic weight and it is imputed into the hidden layer node, in this hidden layer node activation function f(x) do their work. now let’s understand what activation function is-

Activation Function-

At the time of inputs in the form of Linear combination, we need to apply some function for optimization purposes. This function is called the Activation function to achieve the desired output. When we build a neural network, we have to decide which Activation function we want to use. There are types of Activation function which can be used-

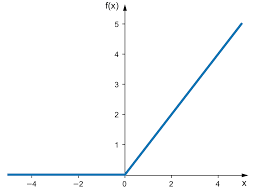

- RELU(Rectified Linear Unit)– This Returns zero for negative inputs and remains silent for all positive values uses the function- y=max(z,0). z is the weighted input received in the hidden layer node.

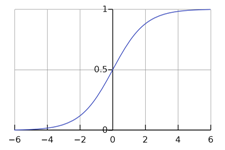

Sigmoid function- The output values can range anywhere between zero and one. Evaluated by — f(x) =1/1+e^z.

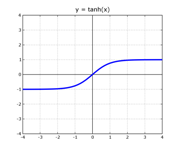

- Hyperbolic Tangent Function — also called tanh function, it is a shifted version of sigmoid function it has a wide range of outputs. which can range from -1 to+1

Among all these activation functions, RELU is the most used activation function and the main motive behind is to transform the inputs into valuable output unit.

Back Propagation

Neural networks start with identical Activation functions, but using different weights and biases on the connections, it flips and stretches the activation function into a new shape that is shifted to estimate the weights and the biases. Backpropagation uses two different methods to estimate the parameters of weights and biases in the neural net-

- use chain rule to calculate derivatives or

- plugging the derivatives into gradient descent to optimize parameters.

Uses of Neural network

- Used in voice recognition

- Facial recognition

- Fraud detection

- Sentiment Analysis

- Image search in social media

Conclusion

This article has covered the concepts of an artificial neural network, its function types, uses, and how it estimates its parametric values. also, it explained in brief how it is similar to a human brain neural system.

Thanks for reading!

References

- https://en.wikipedia.org/wiki/Artificial_neural_network

- https://en.wikipedia.org/wiki/Activation_function

- https://www.sas.com/en_in/insights/analytics/neural-networks.html

Deep Dive Into Neural Networks was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: